Advances in technology are having a profound impact on astronomy and astrophysics. At one end, we have advanced hardware like adaptive optics, coronographs, and spectrometers that allow for more light to be gathered from the cosmos. At the other end, we have improved software and machine learning algorithms that are allowing for the data to be analyzed and mined for valuable nuggets of information.

One area of research where this is proving to be invaluable is in the hunt for exoplanets and the search for life. At the University of Warwick, technicians recently developed an algorithm that was able to confirm the existence of 50 new exoplanets. When used to sort through archival data, this algorithm was able to sort through a sample of candidates and determine which were actual planets and which were false positives.

The study that describes their findings was recently published in the Monthly Notices of the Royal Astronomical Society. For their study, which was led by David J Armstrong (co-lead of Warwick’s Habitability Global Research Group and a member of the Centre for Exoplanets and Habitability), the team also performed the first large-scale comparison of planet validation techniques that rely on machine learning.

As a subset of artificial intelligence research, machine learning consists of algorithms that automatically improve as they examine more data. They are especially useful in astrophysical research, where the amount of raw data is enormous and growing all the time. In many cases, this entails examining light curves from stars to detect periodic dips, which may be the result of an exoplanet passing in front of them relative to the observer (aka. transiting).

Alternately, they could artifacts in the data or the result of interference. These include stars orbiting each other in a binary system, interference from a background object, or even slight errors in the camera. These false positives need to be separated from the rest in order to confirm the existence of exoplanets and select targets for follow-up observations.

This is incredibly time-consuming work and requires that countless hours be put in by researchers and volunteer scientists. It’s because of this that researchers from Warwick’s Departments of Physics and Computer Science, in collaboration with scientists from The Alan Turing Institute, created a machine learning algorithm that can perform this very task with large samples of data.

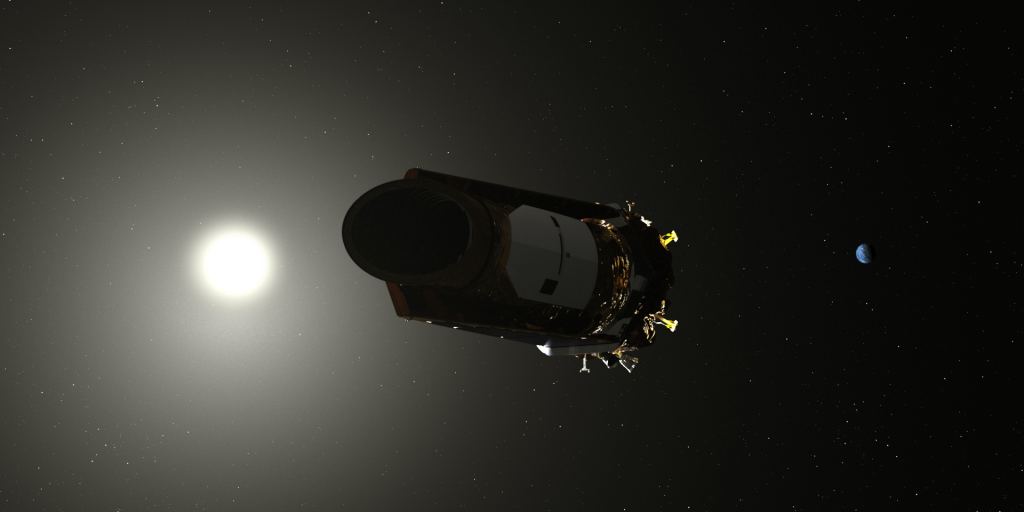

The research team used this algorithm examined thousands of candidates found by NASA’s now-retired Kepler Space Telescope, which relied on the Transit Method to detect potential exoplanets. Using two data samples obtained by the mission (real planets vs. false positives) as a benchmark, the researchers applied the algorithm to a dataset of still-unconfirmed planets detected by Kepler.

The result was fifty new confirmed planets which included the first to be validated by machine learning. These ranged from Neptune-sized gas giants (or possibly Super-Earths) to mini-Earths, with orbits that were as short as a single day to as long as 6.5 months (200 days). Now that these planets have been confirmed, astronomers can prioritize for further observations using still-operational telescopes.

The confirmation of these planets was especially significant because of the sizeable leap it represents. In the past, machine learning techniques have been used to rank candidates, but never to independently determine if a candidate was likely to be planet, a key step in the planet verification proficess. As Dr. Armstrong explained:

“The algorithm we have developed lets us take fifty candidates across the threshold for planet validation, upgrading them to real planets. We hope to apply this technique to large samples of candidates from current and future missions like TESS and PLATO.

“In terms of planet validation, no-one has used a machine learning technique before. Machine learning has been used for ranking planetary candidates but never in a probabilistic framework, which is what you need to truly validate a planet. Rather than saying which candidates are more likely to be planets, we can now say what the precise statistical likelihood is. Where there is less than a 1% chance of a candidate being a false positive, it is considered a validated planet.”

Dr. Theo Damoulas of the University of Warwick Department of Computer Science, who is also a Turing Fellow and the Deputy Director of the Data Centric Engineering program at The Alan Turing Institute, added:

“Probabilistic approaches to statistical machine learning are especially suited for an exciting problem like this in astrophysics that requires incorporation of prior knowledge – from experts like Dr Armstrong – and quantification of uncertainty in predictions. A prime example when the additional computational complexity of probabilistic methods pays off significantly.”

In addition to being able to sort through volumes of data much faster than a human being, these algorithms have the added benefit of being completely automated. This makes them ideal for operational missions like the Transiting Exoplanet Survey Satellite (TESS), which recently completed its initial sky survey is expected to yield thousands of exoplanet candidates.

However, the research team led by Dr. Armstrong and Dr. Damoulas emphasized that machine learning should be just one of the tools collectively used to validate the existence of exoplanets in the future. As Dr. Armstrong explained, almost a third of the exoplanets confirmed so far were validated using a single method (for the most part, the Transit Method or Radial Velocity Method), which is not ideal.

Incorporating machine learning is appealing for just this reason, in that it adds a layer of verification that is also very quick, can function without a human controller and allows astronomers to prioritize candidates faster. As Dr. Armstrong added:

“We still have to spend time training the algorithm, but once that is done it becomes much easier to apply it to future candidates. You can also incorporate new discoveries to progressively improve it.

“A survey like TESS is predicted to have tens of thousands of planetary candidates and it is ideal to be able to analyse them all consistently. Fast, automated systems like this that can take us all the way to validated planets in fewer steps let us do that efficiently.”

The team’s research was possible thanks to support provided by the UK Science and Technology Facilities Council (STFC), part of UK Research and Innovation, through an Ernest Rutherford Fellowship.

Further Reading: University of Warwick, MNRAS