[/caption]

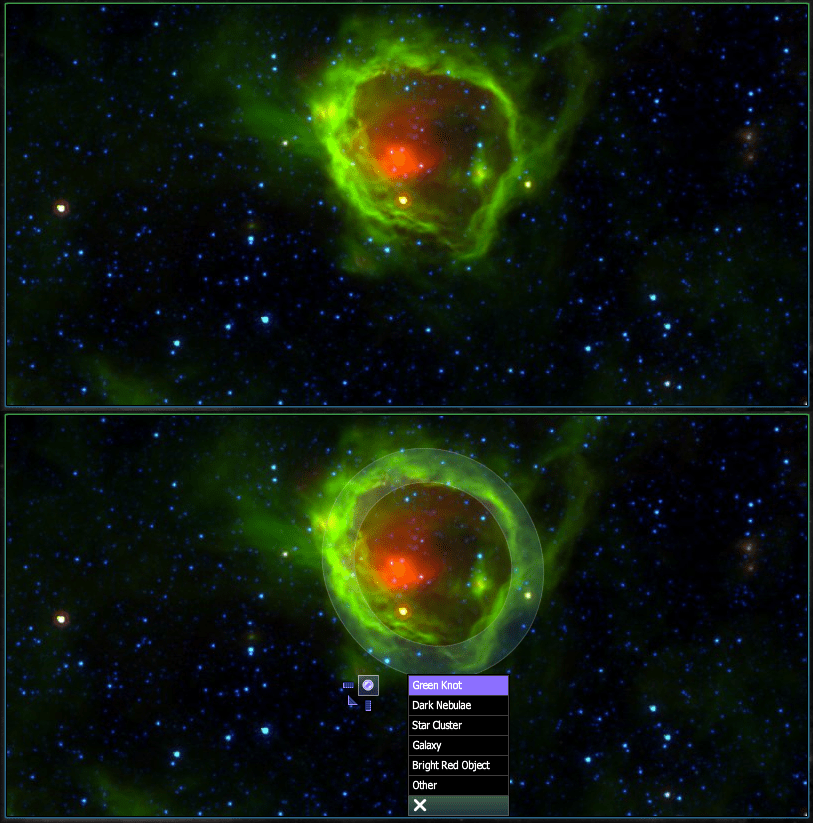

From the folks that brought you the addictive citizen science projects Galaxy Zoo and Moon Zoo (among others), comes yet another way to explore our Universe and help out scientists at the same time. The Milky Way Project invites members of the public to look at images from infrared surveys of our Milky Way and flag features such as gas bubbles, knots of gas and dust and star clusters.

As with the other Zooniverse projects, the participation of the public is a core feature. Accompanying the Milky Way Project is a way for Zooniverse members – lovingly called “zooites” – to discuss the images they’ve cataloged. Called Milky Way Talk, users can submit images they find curious or just plain beautiful to the talk forum for discussion.

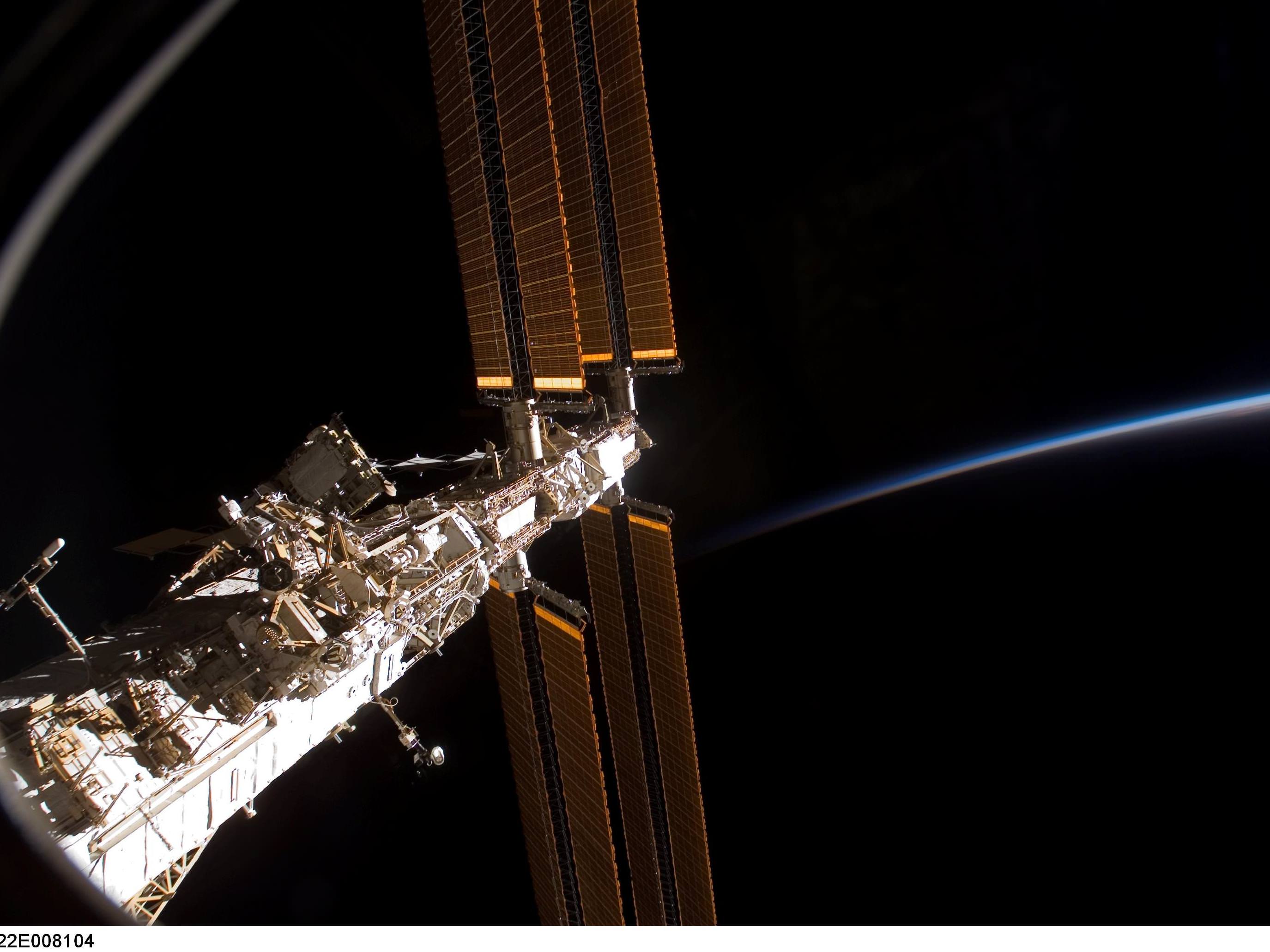

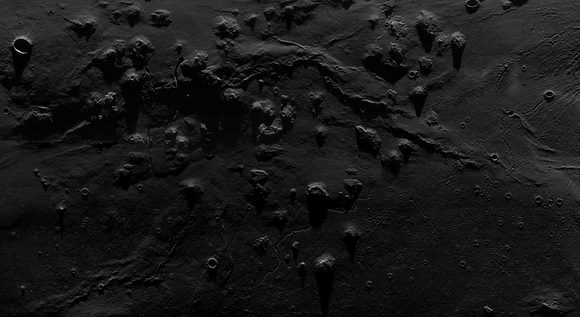

The Milky Way Project uses data from the Galactic Legacy Infrared Mid-Plane Survey Extraordinaire (GLIMPSE) and the Multiband Imaging Photometer for Spitzer Galactic Plane Survey (MIPSGAL). These two surveys have imaged the Milky Way in infrared light at different frequencies. GLIMPSE at 3.6, 4.5, 5.8, and 8 microns, and MIPSGAL at 24 and 70 microns. In the infrared, things that don’t emit much visible light – such as large gas clouds excited by stellar radiation – are apparent in images.

The new project aims at cataloging bubbles, star clusters, knots of gas and dark nebulae. All of these objects are interesting in their own ways.

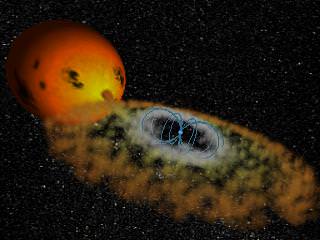

Bubbles – large structures of gas in the galactic plane – belie areas where young stars are altering the interstellar medium that surrounds them. They heat up the dust and/or ionize the gas that surrounds them, and the flow of particles from the star pushes the diffuse material surrounding out into bubble shapes.

The green knots are where the gas and dust are more dense, and might be regions that contain stellar nurseries. Similarly, dark nebulae – nebulae that appear darker than the surrounding gas – are of interest to astronomers because they may also point to stellar formation of high-mass stars.

Star clusters and galaxies outside of the Milky Way may also be visible in some of the images. Though the cataloging of these objects isn’t the main focus of the project, zooites can flag them in the images for later discussion. Just like in the other Zooniverse projects, which use data from robotic surveys, there is always the chance that you will be the first person ever to look at something in one of the images. You could even be like Galaxy Zoo member Hanny and discover something that astronomers will spend telescope time looking at!

The GLIMPSE-MIPSGAL surveys were performed by the Spitzer Space Telescope. Over 440,000 images – all taken in the infrared – are in the catalog and need to be sifted through. This is a serious undertaking, one that cannot be accomplished by graduate students in astronomy alone.

In cataloging these bubbles for subsequent analysis, Milky Way Project members can help astronomers understand both the interstellar medium and the stars themselves imaged by the survey. It will also help them to make a map of the Milky Way’s stellar formation regions.

As with the other Zooniverse projects, this newest addition relies on the human brain’s ability to pick out patterns. Diffuse or oddly-shaped bubbles – such as those that appear “popped” or are elliptical – are difficult for a computer to analyze. So, it’s up to willing members of the public to help out the astronomy community. The Zooniverse community boasts over 350,000 members participating in their various projects.

A little cataloging and research of these gas bubbles has already been done by researchers. The Milky Way Project site references work by Churchwell, et. al, who cataloged over 600 of the bubbles and discovered that 75% of the bubbles they looked at were created by type B4-B9 stars, while 0-B3 stars make up the remainder (for more on what these stellar types mean, click here).

A zoomable map that uses images from the surveys – and has labeled a lot of the bubbles that have been already cataloged by the researchers- is available at Alien Earths.

For an extensive treatment of just how important these bubbles are to understanding stars and their formation, the paper “IR Dust Bubbles: Probing the Detailed Structure and Young Massive Stellar Populations of Galactic HII Regions” by Watson, et. al is available here.

If you want to get cracking on drawing bubbles and cataloging interesting features of our Milky Way, take the tutorial and sign up today.

Sources: The Milky Way Project, Arxiv, GLIMPSE