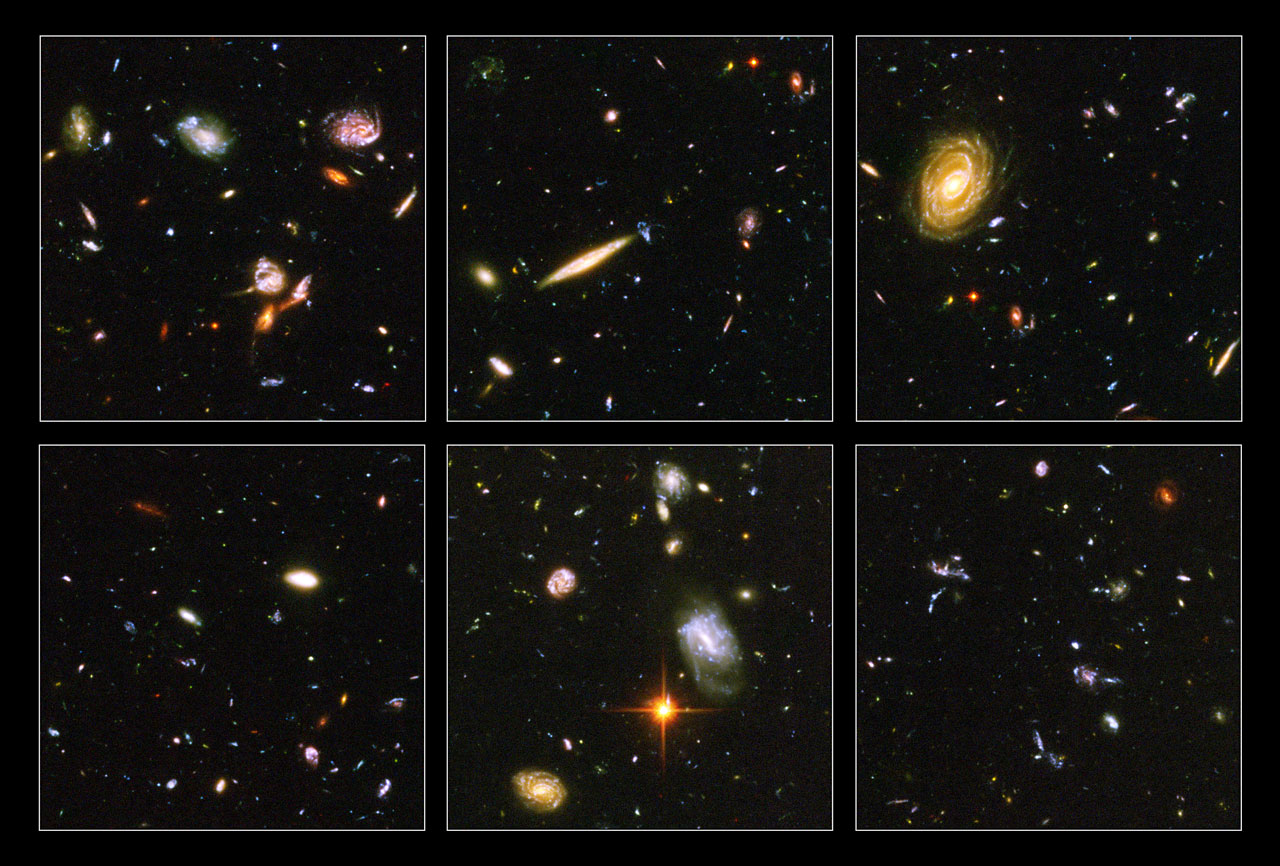

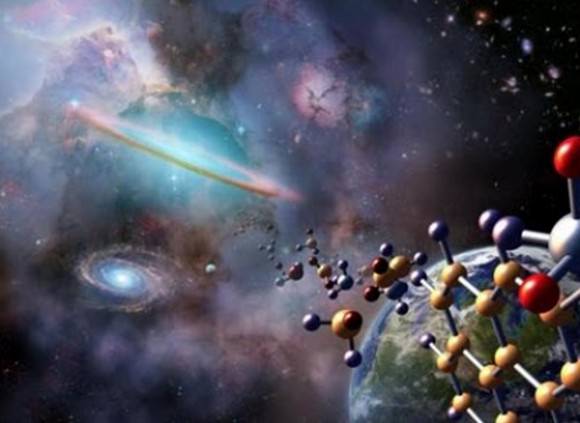

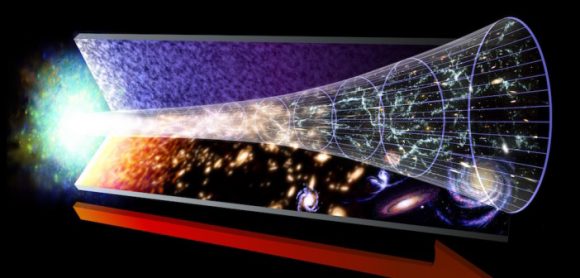

It is a well known fact among astronomers and cosmologists that the farther into the Universe you look, the further back in time you are seeing. And the closer astronomers are able to see to the Big Bang, which took place 13.8 billion years ago, the more interesting the discoveries tend to become. It is these finds that teach us the most about the earliest periods of the Universe and its subsequent evolution.

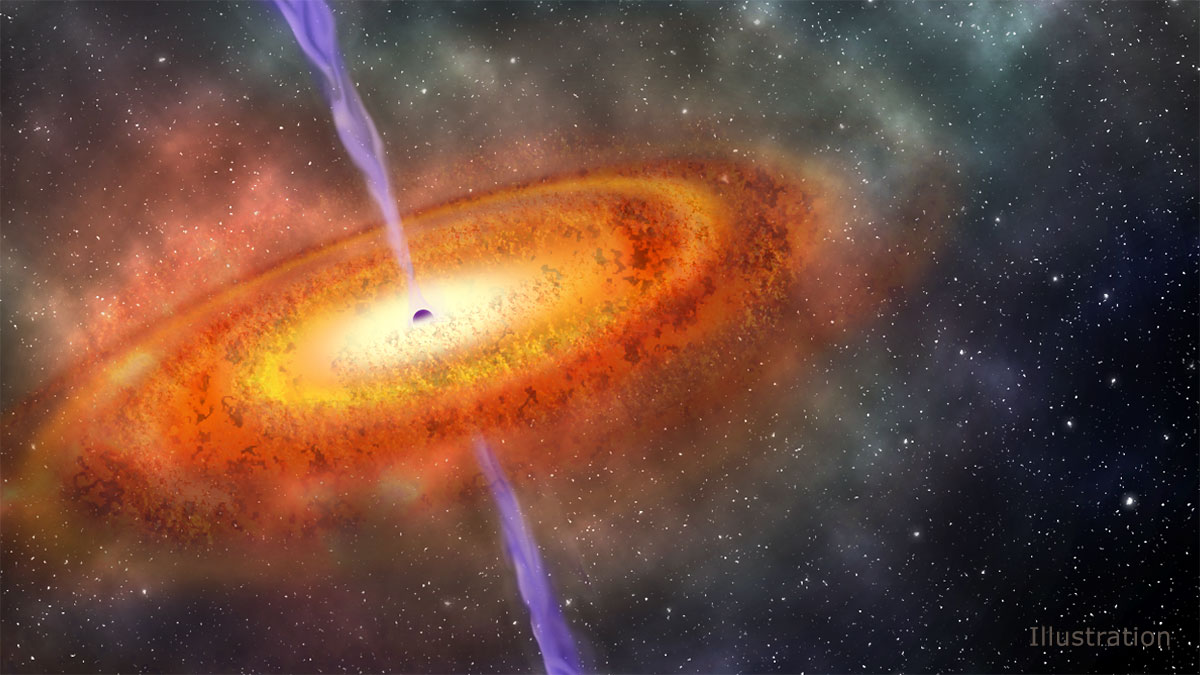

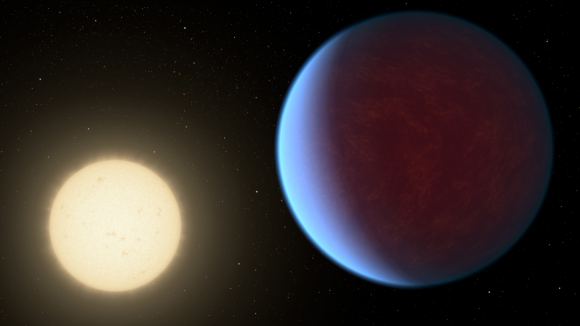

For instance, scientists using the Wide-field Infrared Survey Explorer (WISE) and the Magellan Telescopes recently observed the earliest Supermassive Black Hole (SMBH) to date. According to the discovery team’s study, this black hole is roughly 800 million times the mass of our Sun and is located more than 13 billion light years from Earth. This makes it the most distant, and youngest, SMBH observed to date.

The study, titled “An 800-million-solar-mass black hole in a significantly neutral Universe at a redshift of 7.5“, recently appeared in the journal Nature. Led by Eduardo Bañados, a researcher from the Carnegie Institution for Science, the team included members from NASA’s Jet Propulsion Laboratory, the Max Planck Institute for Astronomy, the Kavli Institute for Astronomy and Astrophysics, the Las Cumbres Observatory, and multiple universities.

As with other SMBHs, this particular discovery (designated J1342+0928) is a quasar, a class of super bright objects that consist of a black hole accreting matter at the center of a massive galaxy. The object was discovered during the course of a survey for distant objects, which combined infrared data from the WISE mission with ground-based surveys. The team then followed up with data from the Carnegie Observatory’s Magellan telescopes in Chile.

As with all distant cosmological objects, J1342+0928’s distance was determined by measuring its redshift. By measuring how much the wavelength of an object’s light is stretched by the expansion of the Universe before it reaches Earth, astronomers are able to determine how far it had to travel to get here. In this case, the quasar had a redshift of 7.54, which means that it took more than 13 billion years for its light to reach us.

As Xiaohui Fan of the University of Arizona’s Steward Observatory (and a co-author on the study) explained in a Carnegie press release:

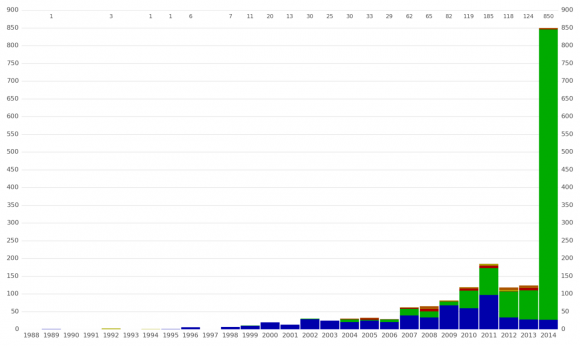

“This great distance makes such objects extremely faint when viewed from Earth. Early quasars are also very rare on the sky. Only one quasar was known to exist at a redshift greater than seven before now, despite extensive searching.”

Given its age and mass, the discovery of this quasar was quite the surprise for the study team. As Daniel Stern, an astrophysicist at NASA’s Jet Propulsion Laboratory and a co-author on the study, indicated in a NASA press release, “This black hole grew far larger than we expected in only 690 million years after the Big Bang, which challenges our theories about how black holes form.”

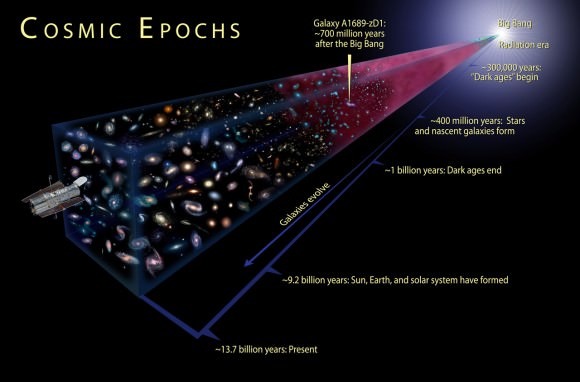

Essentially, this quasar existed at a time when the Universe was just beginning to emerge from what cosmologists call the “Dark Ages”. During this period, which began roughly 380,000 years to 150 million years after the Big Bang, most of the photons in the Universe were interacting with electrons and protons. As a result, the radiation of this period is undetectable by our current instruments – hence the name.

The Universe remained in this state, without any luminous sources, until gravity condensed matter into the first stars and galaxies. This period is known as the “Reinozation Epoch”, which lasted from 150 million to 1 billion years after the Big Bang and was characterized by the first stars, galaxies and quasars forming. It is so-named because the energy released by these ancient galaxies caused the neutral hydrogen of the Universe to get excited and ionize.

Once the Universe became reionzed, photons could travel freely throughout space and the Universe officially became transparent to light. This is what makes the discovery of this quasar so interesting. As the team observed, much of the hydrogen surrounding it is neutral, which means it is not only the most distant quasar ever observed, but also the only example of a quasar that existed before the Universe became reionized.

In other words, J1342+0928 existed during a major transition period for the Universe, which happens to be one of the current frontiers of astrophysics. As if this wasn’t enough, the team was also confounded by the object’s mass. For a black hole to have become so massive during this early period of the Universe, there would have to be special conditions to allow for such rapid growth.

What these conditions are, however, remains a mystery. Whatever the case may be, this newly-found SMBH appears to be consuming matter at the center of a galaxy at an astounding rate. And while its discovery has raised many questions, it is anticipated that the deployment of future telescopes will reveal more about this quasar and its cosmological period. As Stern said:

“With several next-generation, even-more-sensitive facilities currently being built, we can expect many exciting discoveries in the very early universe in the coming years.”

These next-generation missions include the European Space Agency’s Euclid mission and NASA’s Wide-field Infrared Survey Telescope (WFIRST). Whereas Euclid will study objects located 10 billion years in the past in order to measure how dark energy influenced cosmic evolution, WFIRST will perform wide-field near-infrared surveys to measure the light coming from a billion galaxies.

Both missions are expected to reveal more objects like J1342+0928. At present, scientists predict that there are only 20 to 100 quasars as bright and as distant as J1342+0928 in the sky. As such, they were most pleased with this discovery, which is expected to provide us with fundamental information about the Universe when it was only 5% of its current age.

Further Reading: NASA, Carnegie Science, Nature