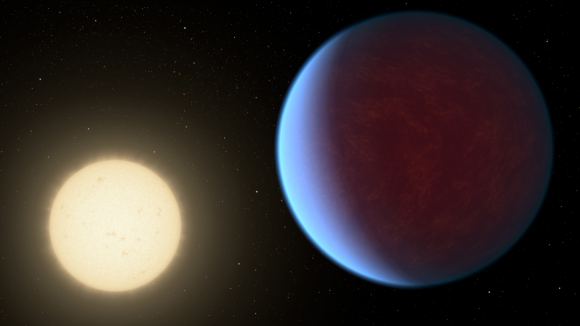

The search for extra-solar planets has turned up some very interesting discoveries. Aside planets that are more-massive versions of their Solar counterparts (aka. Super-Jupiters and Super-Earths), there have been plenty of planets that straddle the line between classifications. And then there were times when follow-up observations have led to the discovery of multiple planetary systems.

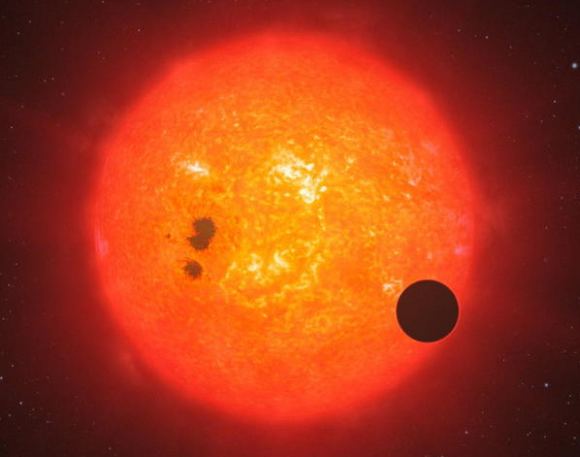

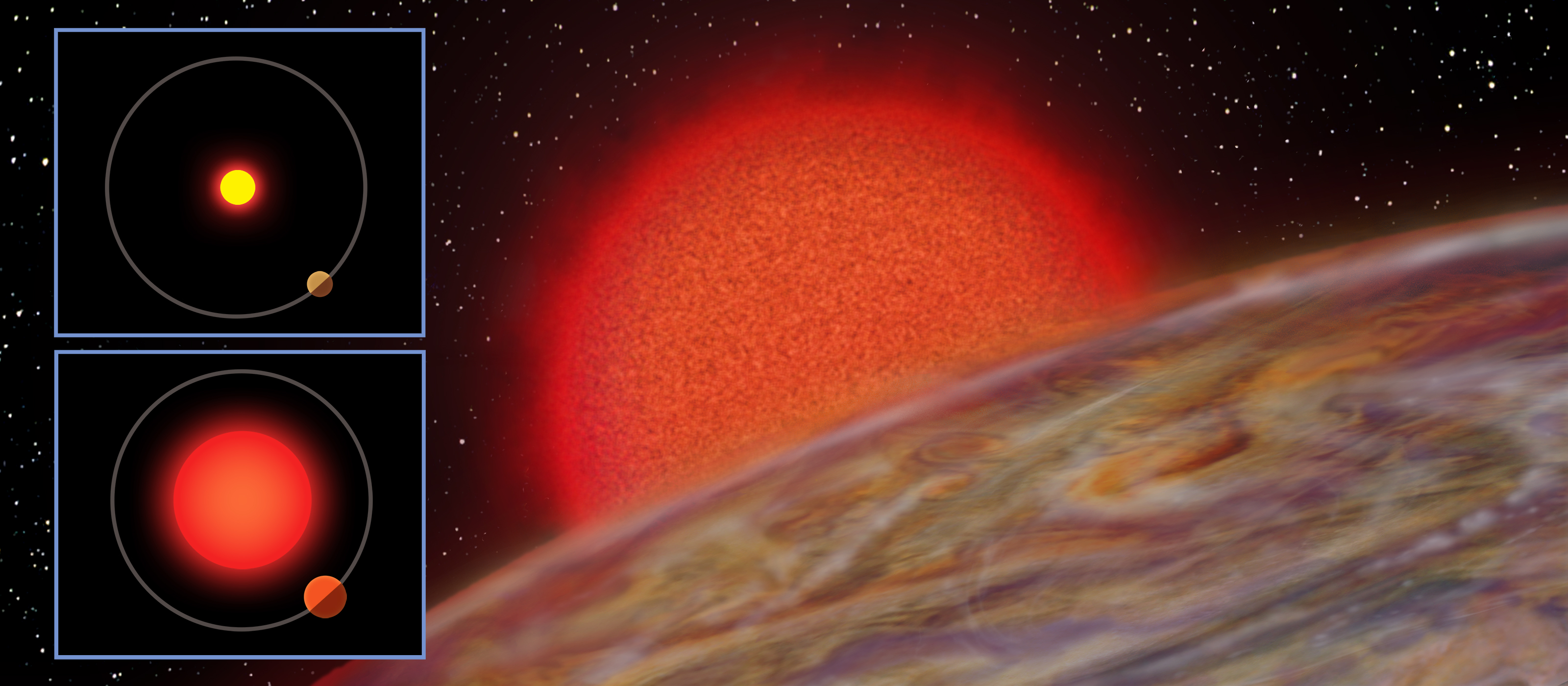

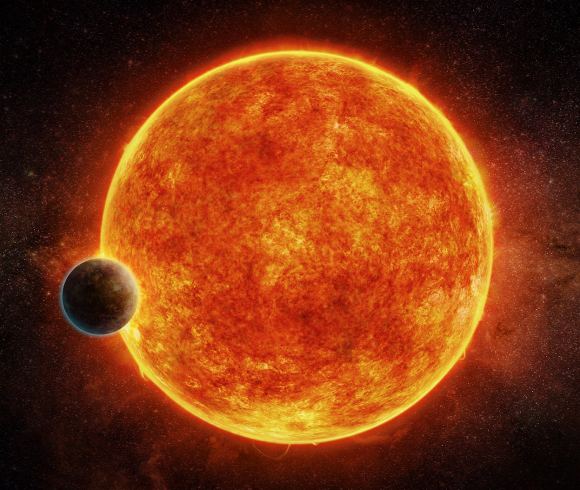

This was certainly the case when it came to K2-18, a red dwarf star system located about 111 light-years from Earth in the constellation Leo. Using the ESO’s High Accuracy Radial Velocity Planet Searcher (HARPS), an international team of astronomers was recently examining a previously-discovered exoplanet in this system (K2-18b) when they noted the existence of a second exoplanet.

The study which details their findings – “Characterization of the K2-18 multi-planetary system with HARPS” – is scheduled to be published in the journal Astronomy and Astrophysics. The research was supported by the Natural Sciences and Research Council of Canada (NSERC) and the Institute for Research on Exoplanets – a consortium of scientists and students from the University of Montreal and McGill University.

Led by Ryan Cloutier, a PhD student at the University of Toronto’s Center for Planet Science and the University of Montréal’s Institute for Research on Exoplanets (iREx), the team included members from the University of Geneva, the University Grenoble Alpes, and the University of Porto. Together, the team conducted a study of K2-18b in the hopes of characterizing this exoplanet and determining its true nature.

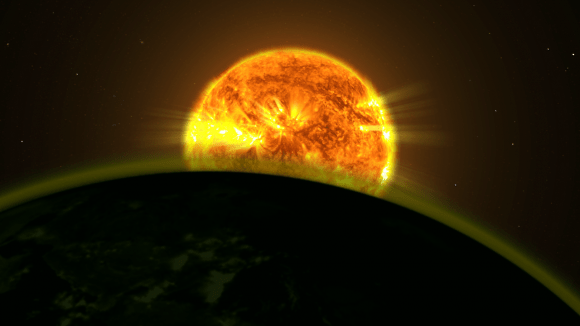

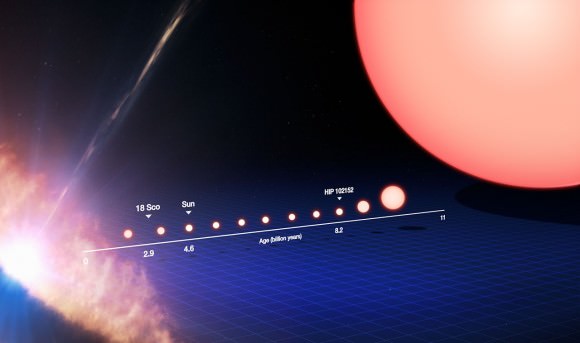

When K2-18b was first discovered in 2015, it was found to be orbiting within the star’s habitable zone (aka. “Goldilocks Zone“). The team responsible for the discovery also determined that given its distance from its star, K2-18b’s surface received similar amounts of radiation as Earth. However, the initial estimates of the planet’s size left astronomers uncertain as to whether the planet was a Super-Earth or a mini-Neptune.

For this reason, Cloutier and his team sought to characterize the planet’s mass, a necessary step towards determining it’s atmospheric properties and bulk composition. To this end, they obtained radial velocity measurements of K2-18 using the HARPS spectrograph. These measurements allowed them to place mass constraints on previously-discovered exoplanet, but also revealed something extra.

As Ryan Cloutier explained in a UTSc press statement:

“Being able to measure the mass and density of K2-18b was tremendous, but to discover a new exoplanet was lucky and equally exciting… If you can get the mass and radius, you can measure the bulk density of the planet and that can tell you what the bulk of the planet is made of.”

Essentially, their radial velocity measurements revealed that K2-18b has a mass of about 8.0 ± 1.9 Earth masses and a bulk density of 3.3 ± 1.2 g/cm³. This is consistent with a terrestrial (aka. rocky) planet with a significant gaseous envelop and a water mass fraction that is equal to or less than 50%. In other words, it is either a Super-Earth with a small gaseous atmosphere (like Earths) or “water world” with a thick layer of ice on top.

They also found evidence for a second “warm” Super Earth named K2-18c, which has a mass of 7.5 ± 1.3 Earth masses, an orbital period of 9 days, and a semi-major axis roughly 2.4 times smaller than K2-18b. After re-examining the original light curves obtained from K2-18, they concluded that K2-18c was not detected because it has an orbit that does not lie on the same plane. As Cloutier described the discovery:

“When we first threw the data on the table we were trying to figure out what it was. You have to ensure the signal isn’t just noise, and you need to do careful analysis to verify it, but seeing that initial signal was a good indication there was another planet… It wasn’t a eureka moment because we still had to go through a checklist of things to do in order to verify the data. Once all the boxes were checked it sunk in that, wow, this actually is a planet.”

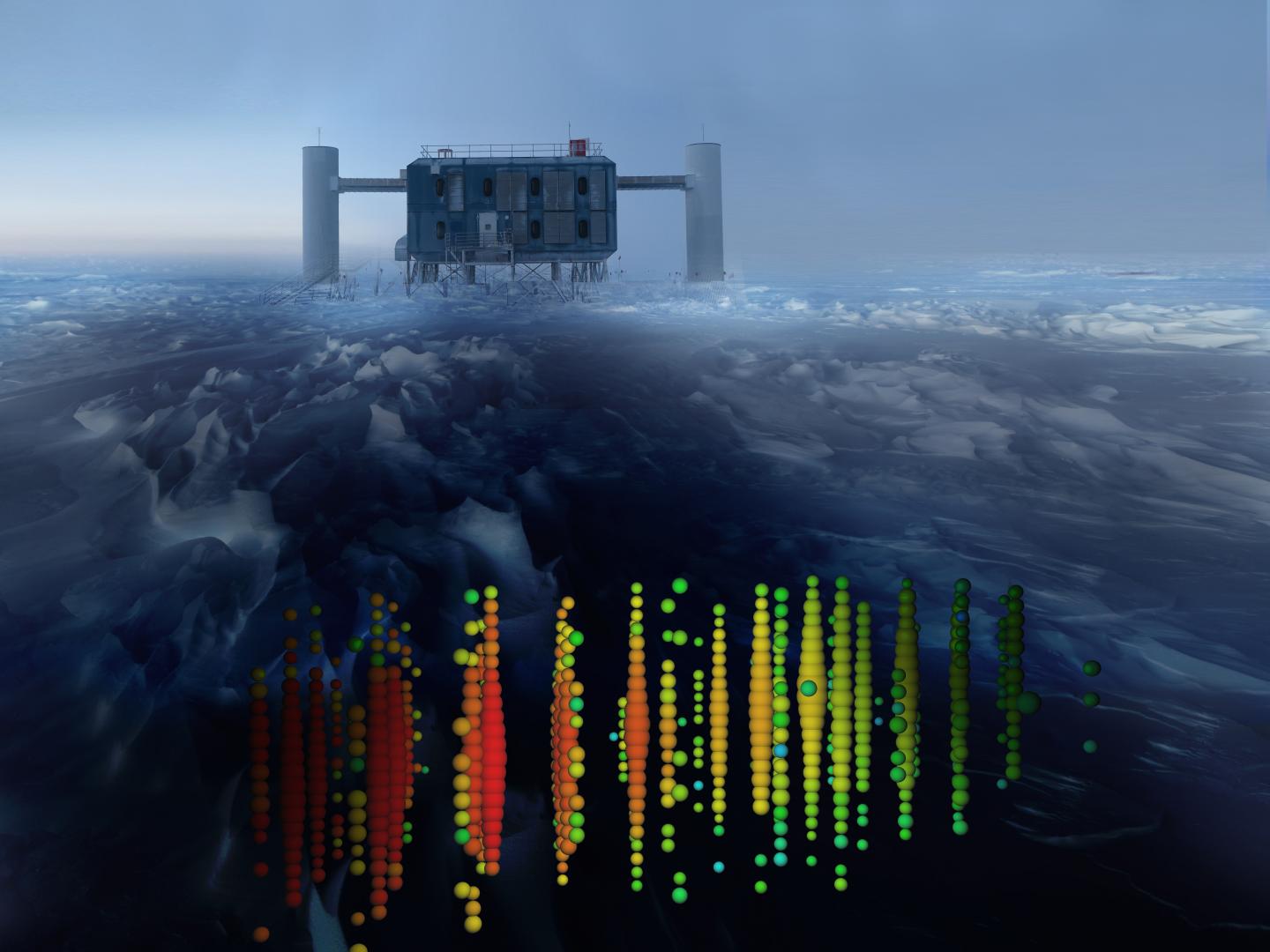

Unfortunately, the newly-discovered K2-18c orbits too closely to its star for it to be within it’s habitable zone. However, the likelihood of K2-18b being habitable remains probable, thought that depends on its bulk composition. In the end, this system will benefit from additional surveys that will more than likely involve NASA’s James Webb Space Telescope (JWST) – which is scheduled for launch in 2019.

These surveys are expecting to resolve the latest mysteries about this planet, which is whether it is Earth-like or a “water world”. “With the current data, we can’t distinguish between those two possibilities,” said Cloutier. “But with the James Webb Space Telescope (JWST) we can probe the atmosphere and see whether it has an extensive atmosphere or it’s a planet covered in water.”

As René Doyon – the principal investigator for the Near-Infrared Imager and Slitless Spectrograph (NIRISS), the Canadian Space Agency instrument on board JWST, and a co-author on the paper – explained:

“There’s a lot of demand to use this telescope, so you have to be meticulous in choosing which exoplanets to look at. K2-18b is now one of the best targets for atmospheric study, it’s going to the near top of the list.”

The discovery of this second Super-Earth in the K2-18 system is yet another indication of how prevalent multi-planet systems are around M-type (red dwarf) stars. The proximity of this system, which has at least one planet with a thick atmosphere, also makes it well-suited to studies that will teach astronomers more about the nature of exoplanet atmospheres.

Expect to hear more about this star and its planetary system in the coming years!

Further Reading: University of Toronto Scarborough, Astronomy and Astrophysics