Artificial intelligence and machine learning have become ubiquitous, with applications ranging from data analysis, cybersecurity, pharmaceutical development, music composition, and artistic renderings. In recent years, large language models (LLMs) have also emerged, adding human interaction and writing to the long list of applications. This includes ChatGPT, an LLM that has had a profound impact since it was introduced less than two years ago. This application has sparked considerable debate (and controversy) about AI’s potential uses and implications.

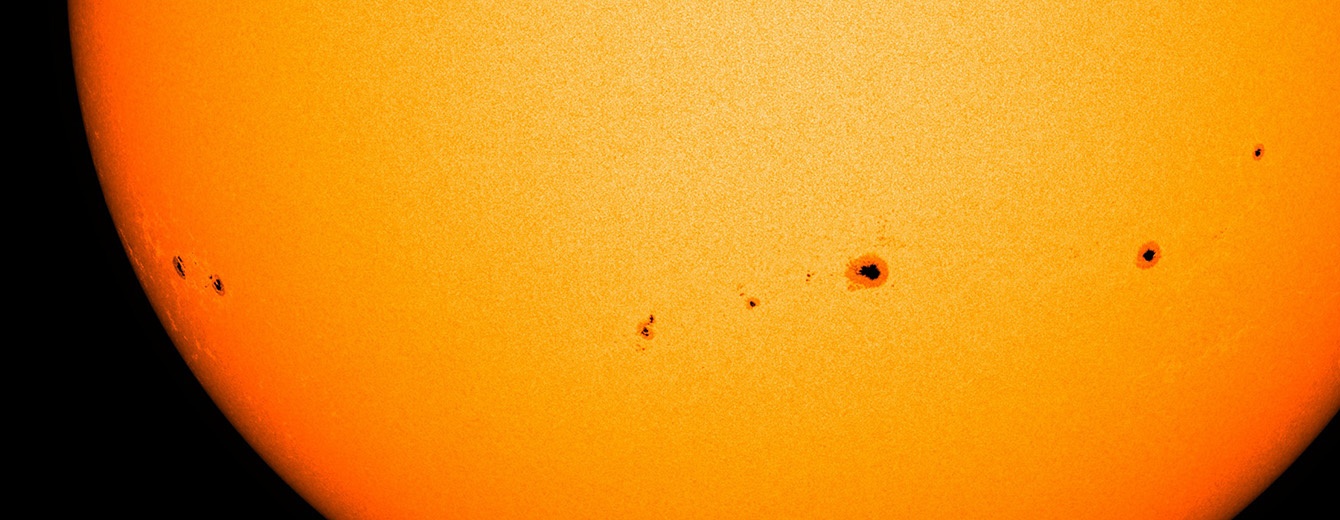

Astronomy has also benefitted immensely, where machine learning is used to sort through massive volumes of data to look for signs of planetary transits, correct for atmospheric interference, and find patterns in the noise. According to an international team of astrophysicists, this may just be the beginning of what AI could do for astronomy. In a recent study, the team fine-tuned a Generative Pre-trained Transformer (GPT) model using observations of astronomical objects. In the process, they successfully demonstrated that GPT models can effectively assist with scientific research.

Continue reading “What Can AI Learn About the Universe?”