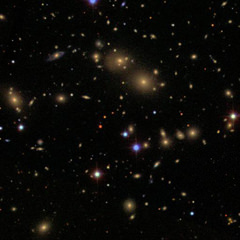

In 1993, the Hubble Space Telescope snapped a close-up of the nucleus of the Andromeda galaxy, M31, and found that it is double.

In the 15+ years since, dozens of papers have been written about it, with titles like The stellar population of the decoupled nucleus in M 31, Accretion Processes in the Nucleus of M31, and The Origin of the Young Stars in the Nucleus of M31.

And now there’s a paper which seems, at last, to explain the observations; the cause is, apparently, a complex interplay of gravity, angular motion, and star formation.

[/caption]

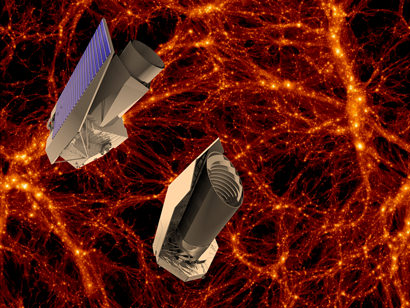

It is now reasonably well-understood how supermassive black holes (SMBHs), found in the nuclei of all normal galaxies, can snack on stars, gas, and dust which comes within about a third of a light-year (magnetic fields do a great job of shedding the angular momentum of this ordinary, baryonic matter).

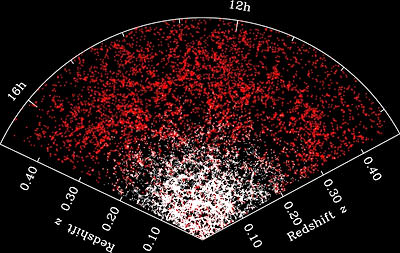

Also, disturbances from collisions with other galaxies and the gravitational interactions of matter within the galaxy can easily bring gas to distances of about 10 to 100 parsecs (30 to 300 light years) from a SMBH.

However, how does the SMBH snare baryonic matter that’s between a tenth of a parsec and ~10 parsecs away? Why doesn’t matter just form more-or-less stable orbits at these distances? After all, the local magnetic fields are too weak to make changes (except over very long timescales), and collisions and close encounters too rare (these certainly work over timescales of ~billions of years, as evidenced by the distributions of stars in globular clusters).

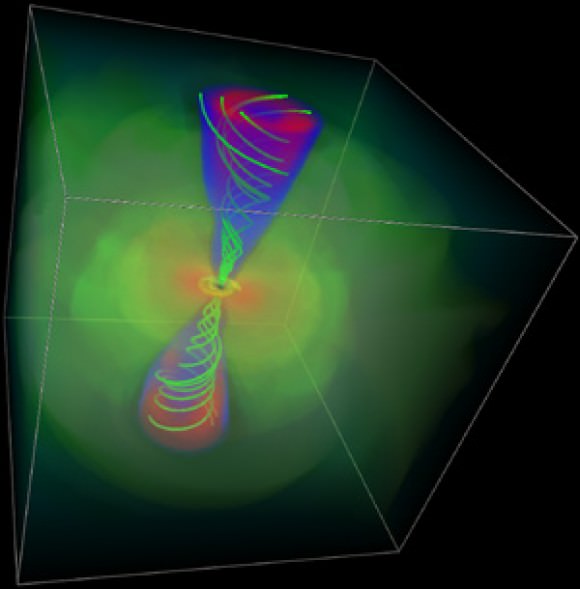

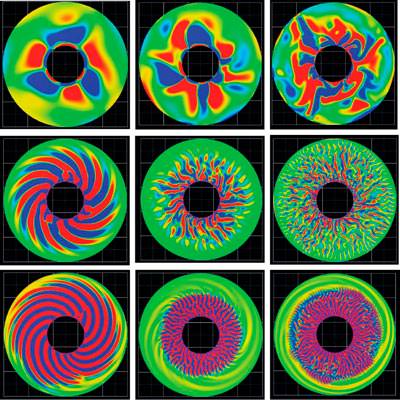

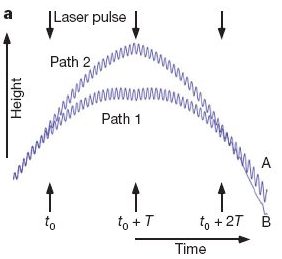

That’s where new simulations by Philip Hopkins and Eliot Quataert, both of the University of California, Berkeley, come into play. Their computer models show that at these intermediate distances, gas and stars form separate, lopsided disks that are off-center with respect to the black hole. The two disks are tilted with respect to one another, allowing the stars to exert a drag on the gas that slows its swirling motion and brings it closer to the black hole.

The new work is theoretical; however, Hopkins and Quataert note that several galaxies seem to have lopsided disks of elderly stars, lopsided with respect to the SMBH. And the best-studied of these is in M31.

Hopkins and Quataert now suggest that these old, off-center disks are the fossils of the stellar disks generated by their models. In their youth, such disks helped drive gas into black holes, they say.

The new study “is interesting in that it may explain such oddball [stellar disks] by a common mechanism which has larger implications, such as fueling supermassive black holes,” says Tod Lauer of the National Optical Astronomy Observatory in Tucson. “The fun part of their work,” he adds, is that it unifies “the very large-scale black hole energetics and fueling with the small scale.” Off-center stellar disks are difficult to observe because they lie relatively close to the brilliant fireworks generated by supermassive black holes. But searching for such disks could become a new strategy for hunting supermassive black holes in galaxies not known to house them, Hopkins says.

Sources: ScienceNews, “The Nuclear Stellar Disk in Andromeda: A Fossil from the Era of Black Hole Growth”, Hopkins, Quataert, to be published in MNRAS (arXiv preprint), AGN Fueling: Movies.