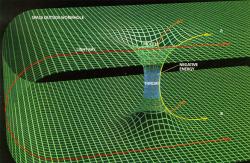

Braneworld challenges Einstein’s general relativity. Image credit: NASA. Click to enlarge

Scientists have been intrigued for years about the possibility that there are additional dimensions beyond the three we humans can understand. Now researchers from Duke and Rutgers universities think there’s a way to test for five-dimensional theory (4 spatial dimensions plus time) of gravity that competes with Einstein’s General Theory of Relativity. This extra dimension should have effects in the cosmos which are detectable by satellites scheduled to launch in the next few years.

Scientists at Duke and Rutgers universities have developed a mathematical framework they say will enable astronomers to test a new five-dimensional theory of gravity that competes with Einstein’s General Theory of Relativity.

Charles R. Keeton of Rutgers and Arlie O. Petters of Duke base their work on a recent theory called the type II Randall-Sundrum braneworld gravity model. The theory holds that the visible universe is a membrane (hence “braneworld”) embedded within a larger universe, much like a strand of filmy seaweed floating in the ocean. The “braneworld universe” has five dimensions — four spatial dimensions plus time — compared with the four dimensions — three spatial, plus time — laid out in the General Theory of Relativity.

The framework Keeton and Petters developed predicts certain cosmological effects that, if observed, should help scientists validate the braneworld theory. The observations, they said, should be possible with satellites scheduled to launch in the next few years.

If the braneworld theory proves to be true, “this would upset the applecart,” Petters said. “It would confirm that there is a 4th dimension to space, which would create a philosophical shift in our understanding of the natural world.”

The scientists’ findings appeared May 24, 2006, in the online edition of the journal Physical Review D. Keeton is an astronomy and physics professor at Rutgers, and Petters is a mathematics and physics professor at Duke. Their research is funded by the National Science Foundation.

The Randall-Sundrum braneworld model — named for its originators, physicists Lisa Randall of Harvard University and Raman Sundrum of Johns Hopkins University — provides a mathematical description of how gravity shapes the universe that differs from the description offered by the General Theory of Relativity.

Keeton and Petters focused on one particular gravitational consequence of the braneworld theory that distinguishes it from Einstein’s theory.

The braneworld theory predicts that relatively small “black holes” created in the early universe have survived to the present. The black holes, with mass similar to a tiny asteroid, would be part of the “dark matter” in the universe. As the name suggests, dark matter does not emit or reflect light, but does exert a gravitational force.

The General Theory of Relativity, on the other hand, predicts that such primordial black holes no longer exist, as they would have evaporated by now.

“When we estimated how far braneworld black holes might be from Earth, we were surprised to find that the nearest ones would lie well inside Pluto’s orbit,” Keeton said.

Petters added, “If braneworld black holes form even 1 percent of the dark matter in our part of the galaxy — a cautious assumption — there should be several thousand braneworld black holes in our solar system.”

But do braneworld black holes really exist — and therefore stand as evidence for the 5-D braneworld theory?

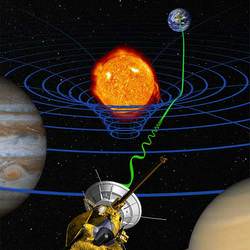

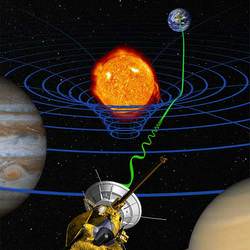

The scientists showed that it should be possible to answer this question by observing the effects that braneworld black holes would exert on electromagnetic radiation traveling to Earth from other galaxies. Any such radiation passing near a black hole will be acted upon by the object’s tremendous gravitational forces — an effect called “gravitational lensing.”

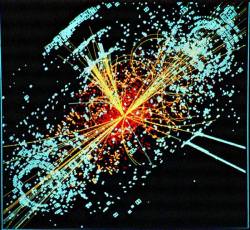

“A good place to look for gravitational lensing by braneworld black holes is in bursts of gamma rays coming to Earth,” Keeton said. These gamma-ray bursts are thought to be produced by enormous explosions throughout the universe. Such bursts from outer space were discovered inadvertently by the U.S. Air Force in the 1960s.

Keeton and Petters calculated that braneworld black holes would impede the gamma rays in the same way a rock in a pond obstructs passing ripples. The rock produces an “interference pattern” in its wake in which some ripple peaks are higher, some troughs are deeper, and some peaks and troughs cancel each other out. The interference pattern bears the signature of the characteristics of both the rock and the water.

Similarly, a braneworld black hole would produce an interference pattern in a passing burst of gamma rays as they travel to Earth, said Keeton and Petters. The scientists predicted the resulting bright and dark “fringes” in the interference pattern, which they said provides a means of inferring characteristics of braneworld black holes and, in turn, of space and time.

“We discovered that the signature of a fourth dimension of space appears in the interference patterns,” Petters said. “This extra spatial dimension creates a contraction between the fringes compared to what you’d get in General Relativity.”

Petters and Keeton said it should be possible to measure the predicted gamma-ray fringe patterns using the Gamma-ray Large Area Space Telescope, which is scheduled to be launched on a spacecraft in August 2007. The telescope is a joint effort between NASA, the U.S. Department of Energy, and institutions in France, Germany, Japan, Italy and Sweden.

The scientists said their prediction would apply to all braneworld black holes, whether in our solar system or beyond.

“If the braneworld theory is correct,” they said, “there should be many, many more braneworld black holes throughout the universe, each carrying the signature of a fourth dimension of space.”

Original Source: Duke University