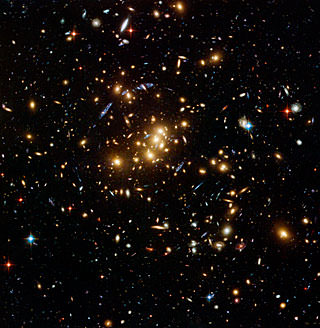

The name “dark energy” is just a placeholder for the force — whatever it is — that is causing the Universe to expand. But astronomers are perhaps getting closer to understanding this force. New observations of several Cepheid variable stars by the Hubble Space Telescope has refined the measurement of the Universe’s present expansion rate to a precision where the error is smaller than five percent. The new value for the expansion rate, known as the Hubble constant, or H0 (after Edwin Hubble who first measured the expansion of the universe nearly a century ago), is 74.2 kilometers per second per megaparsec (error margin of ± 3.6). The results agree closely with an earlier measurement gleaned from Hubble of 72 ± 8 km/sec/megaparsec, but are now more than twice as precise.

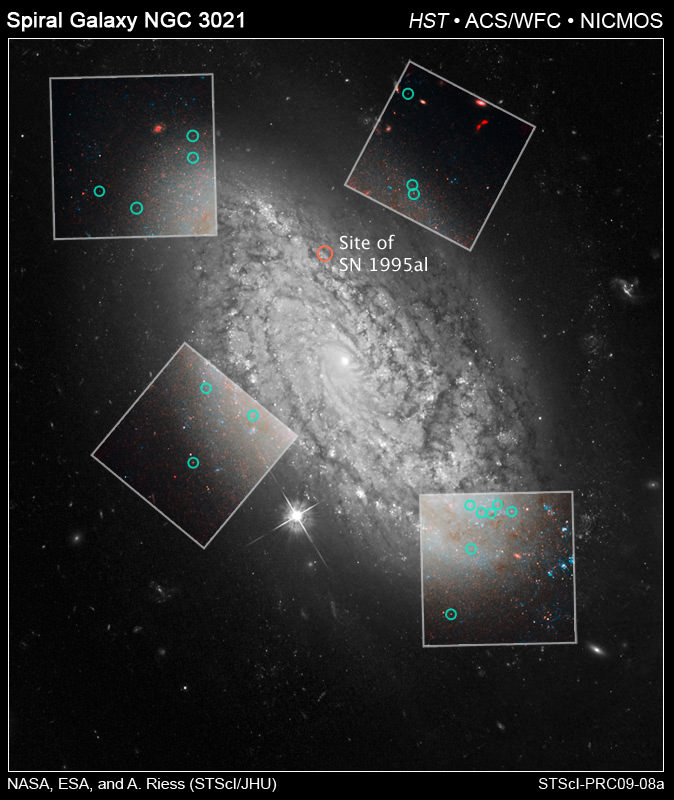

The Hubble measurement, conducted by the SHOES (Supernova H0 for the Equation of State) Team and led by Adam Riess, of the Space Telescope Science Institute and the Johns Hopkins University, uses a number of refinements to streamline and strengthen the construction of a cosmic “distance ladder,” a billion light-years in length, that astronomers use to determine the universe’s expansion rate.

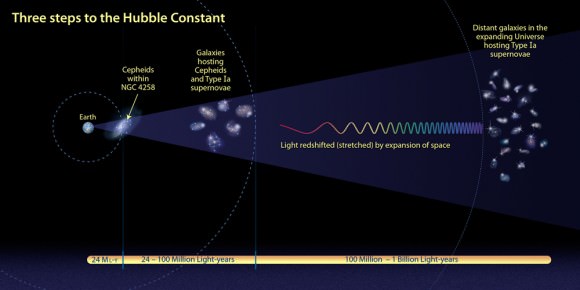

Hubble observations of the pulsating Cepheid variables in a nearby cosmic mile marker, the galaxy NGC 4258, and in the host galaxies of recent supernovae, directly link these distance indicators. The use of Hubble to bridge these rungs in the ladder eliminated the systematic errors that are almost unavoidably introduced by comparing measurements from different telescopes.

Riess explains the new technique: “It’s like measuring a building with a long tape measure instead of moving a yard stick end over end. You avoid compounding the little errors you make every time you move the yardstick. The higher the building, the greater the error.”

Lucas Macri, professor of physics and astronomy at Texas A&M, and a significant contributor to the results, said, “Cepheids are the backbone of the distance ladder because their pulsation periods, which are easily observed, correlate directly with their luminosities. Another refinement of our ladder is the fact that we have observed the Cepheids in the near-infrared parts of the electromagnetic spectrum where these variable stars are better distance indicators than at optical wavelengths.”

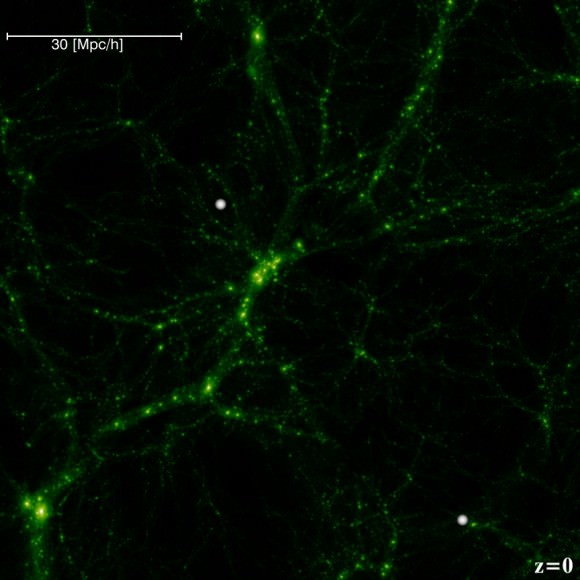

This new, more precise value of the Hubble constant was used to test and constrain the properties of dark energy, the form of energy that produces a repulsive force in space, which is causing the expansion rate of the universe to accelerate.

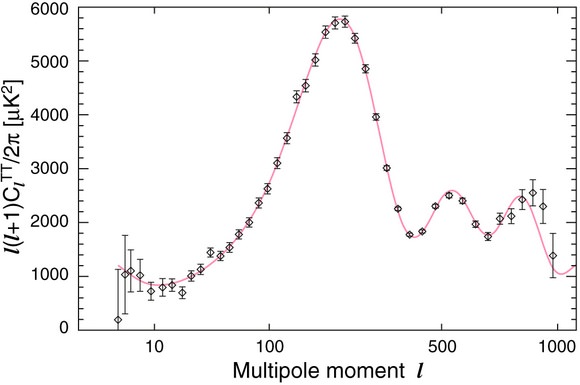

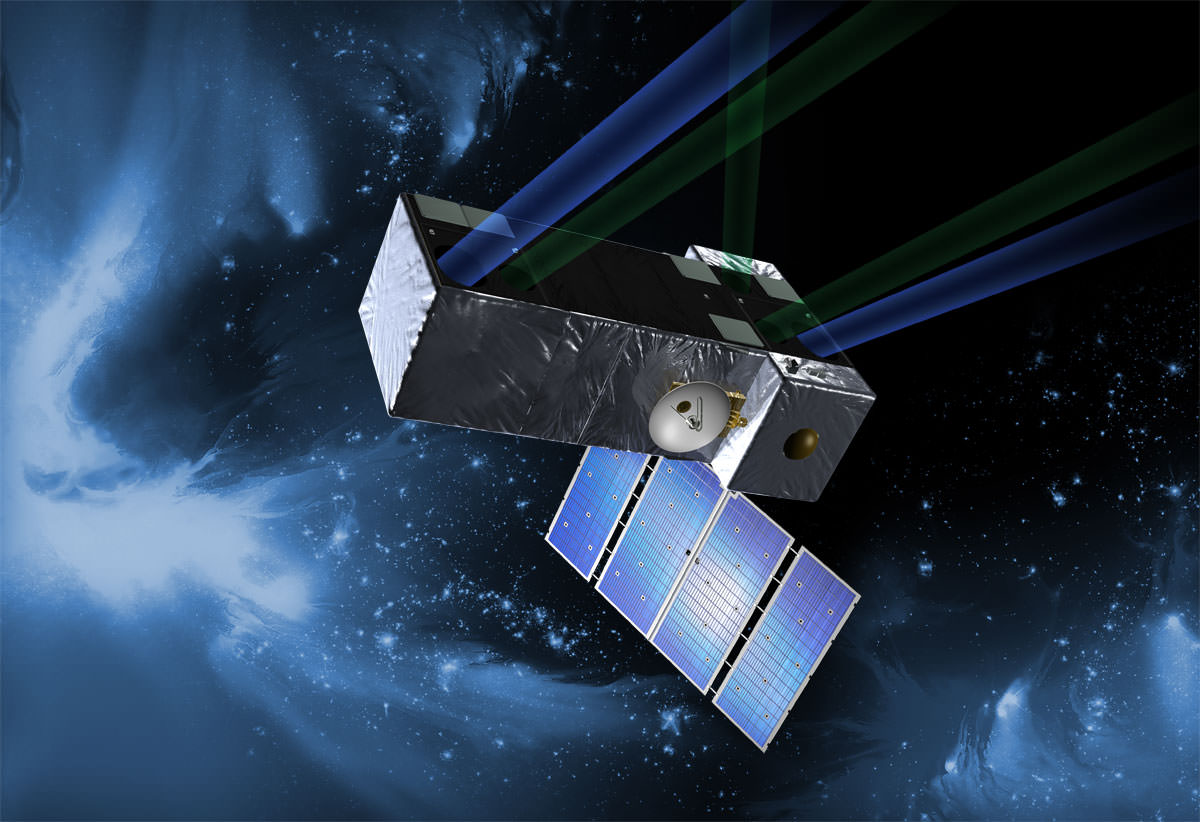

By bracketing the expansion history of the universe between today and when the universe was only approximately 380,000 years old, the astronomers were able to place limits on the nature of the dark energy that is causing the expansion to speed up. (The measurement for the far, early universe is derived from fluctuations in the cosmic microwave background, as resolved by NASA’s Wilkinson Microwave Anisotropy Probe, WMAP, in 2003.)

Their result is consistent with the simplest interpretation of dark energy: that it is mathematically equivalent to Albert Einstein’s hypothesized cosmological constant, introduced a century ago to push on the fabric of space and prevent the universe from collapsing under the pull of gravity. (Einstein, however, removed the constant once the expansion of the universe was discovered by Edwin Hubble.)

“If you put in a box all the ways that dark energy might differ from the cosmological constant, that box would now be three times smaller,” says Riess. “That’s progress, but we still have a long way to go to pin down the nature of dark energy.”

Though the cosmological constant was conceived of long ago, observational evidence for dark energy didn’t come along until 11 years ago, when two studies, one led by Riess and Brian Schmidt of Mount Stromlo Observatory, and the other by Saul Perlmutter of Lawrence Berkeley National Laboratory, discovered dark energy independently, in part with Hubble observations. Since then astronomers have been pursuing observations to better characterize dark energy.

Riess’s approach to narrowing alternative explanations for dark energy—whether it is a static cosmological constant or a dynamical field (like the repulsive force that drove inflation after the big bang)—is to further refine measurements of the universe’s expansion history.

Before Hubble was launched in 1990, the estimates of the Hubble constant varied by a factor of two. In the late 1990s the Hubble Space Telescope Key Project on the Extragalactic Distance Scale refined the value of the Hubble constant to an error of only about ten percent. This was accomplished by observing Cepheid variables at optical wavelengths out to greater distances than obtained previously and comparing those to similar measurements from ground-based telescopes.

The SHOES team used Hubble’s Near Infrared Camera and Multi-Object Spectrometer (NICMOS) and the Advanced Camera for Surveys (ACS) to observe 240 Cepheid variable stars across seven galaxies. One of these galaxies was NGC 4258, whose distance was very accurately determined through observations with radio telescopes. The other six galaxies recently hosted Type Ia supernovae that are reliable distance indicators for even farther measurements in the universe. Type Ia supernovae all explode with nearly the same amount of energy and therefore have almost the same intrinsic brightness.

By observing Cepheids with very similar properties at near-infrared wavelengths in all seven galaxies, and using the same telescope and instrument, the team was able to more precisely calibrate the luminosity of supernovae. With Hubble’s powerful capabilities, the team was able to sidestep some of the shakiest rungs along the previous distance ladder involving uncertainties in the behavior of Cepheids.

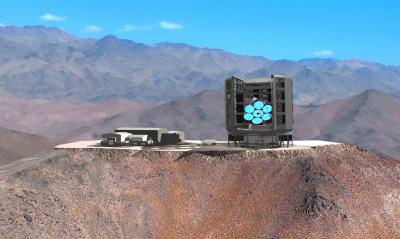

Riess would eventually like to see the Hubble constant refined to a value with an error of no more than one percent, to put even tighter constraints on solutions to dark energy.