[/caption]

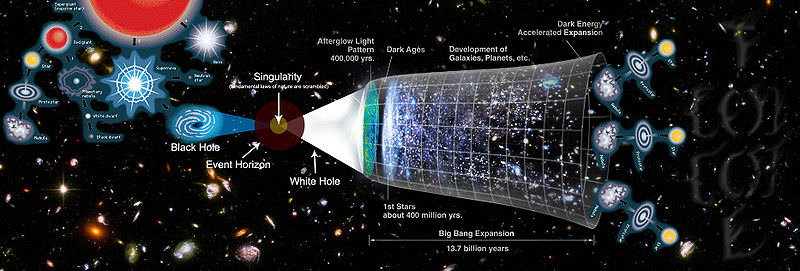

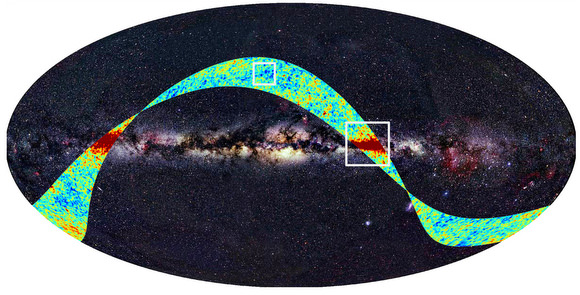

Theoretical physics has brought us the notion that our single universe is not necessarily the only game in town. Satellite data from WMAP, along with string theory and its 11- dimensional hyperspace idea has produced the concept of the multiverse, where the Big Bang could have produced many different universes instead of a single uniform universe. The idea has gained popularity recently, so it was only a matter of time until someone asked the question of how many multiverses could possibly exist. The number, according to two physicists, could be “humongous.”

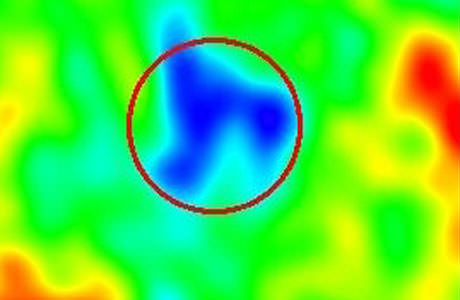

Andrei Linde and Vitaly Vanchurin at Stanford University in California, did a few back-of- the- envelope calculations, starting with the idea that the Big Bang was essentially a quantum process which generated quantum fluctuations in the state of the early universe. The universe then underwent a period of rapid growth called inflation during which these perturbations were “frozen,” creating different initial classical conditions in different parts of the cosmos. Since each of these regions would have a different set of laws of low energy physics, they can be thought of as different universes.

Linde and Vanchurin then estimated how many different universes could have appeared as a result of this effect. Their answer is that this number must be proportional to the effect that caused the perturbations in the first place, a process called slow roll inflation, — the solution Linde came up with previously to answer the problem of the bubbles of universes colliding in the early inflation period. In this model, inflation occurred from a scalar field rolling down a potential energy hill. When the field rolls very slowly compared to the expansion of the universe, inflation occurs and collisions end up being rare.

Using all of this (and more – see their paper here) Linde and Vanchurin calculate that the number of universes in the multiverse and could be at least 10^10^10^7, a number which is definitely “humungous,” as they described it.

The next question, then, is how many universes could we actually see? Linde and Vanchurin say they had to invoke the Bekenstein limit, where the properties of the observer become an important factor because of a limit to the amount of information that can be contained within any given volume of space, and by the limits of the human brain.

The total amount of information that can be absorbed by one individual during a lifetime is about 10^16 bits. So a typical human brain can have 10^10^16 configurations and so could never distinguish more than that number of different universes.

“So, the total number of possibilities accessible to any given observer is limited not only by the entropy of perturbations of metric produced by inflation and by the size of the cosmological horizon, but also by the number of degrees of freedom of an observer,” the physicists write.

“We have found that the strongest limit on the number of different locally distinguishable geometries is determined mostly by our abilities to distinguish between different universes and to remember our results,” wrote Linde and Vanchurin. “Potentially it may become very important that when we analyze the probability of existencse of a universe of a given type, we should be talking about a consistent pair: the universe and an observer who makes the rest of the universe “alive” and the wave function of the rest of the universe time-dependant.”

So their conclusion is that the limit does not depend on the properties of the multiverse itself, but on the properties of the observer.

They hope to further study this concept to see if this probability if proportional to the observable entropy of inflation.

Sources: ArXiv, Technology Review Blog