The Northern Lights have fascinated human beings for millennia. In fact, their existence has informed the mythology of many cultures, including the Inuit, Northern Cree, and ancient Norse. They were also a source of intense fascination for the ancient Greeks and Romans, and were seen as a sign from God by medieval Europeans.

Thanks to the birth of modern astronomy, we now know what causes both the Aurora Borealis and its southern sibling – Aurora Australis. Nevertheless, they remain the subject of intense fascination, scientific research, and are a major tourist draw. For those who live north of 60° latitude, this fantastic light show is also a regular occurrence.

Causes:

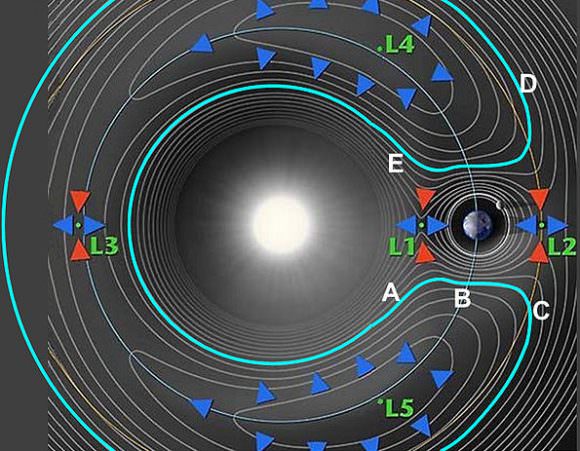

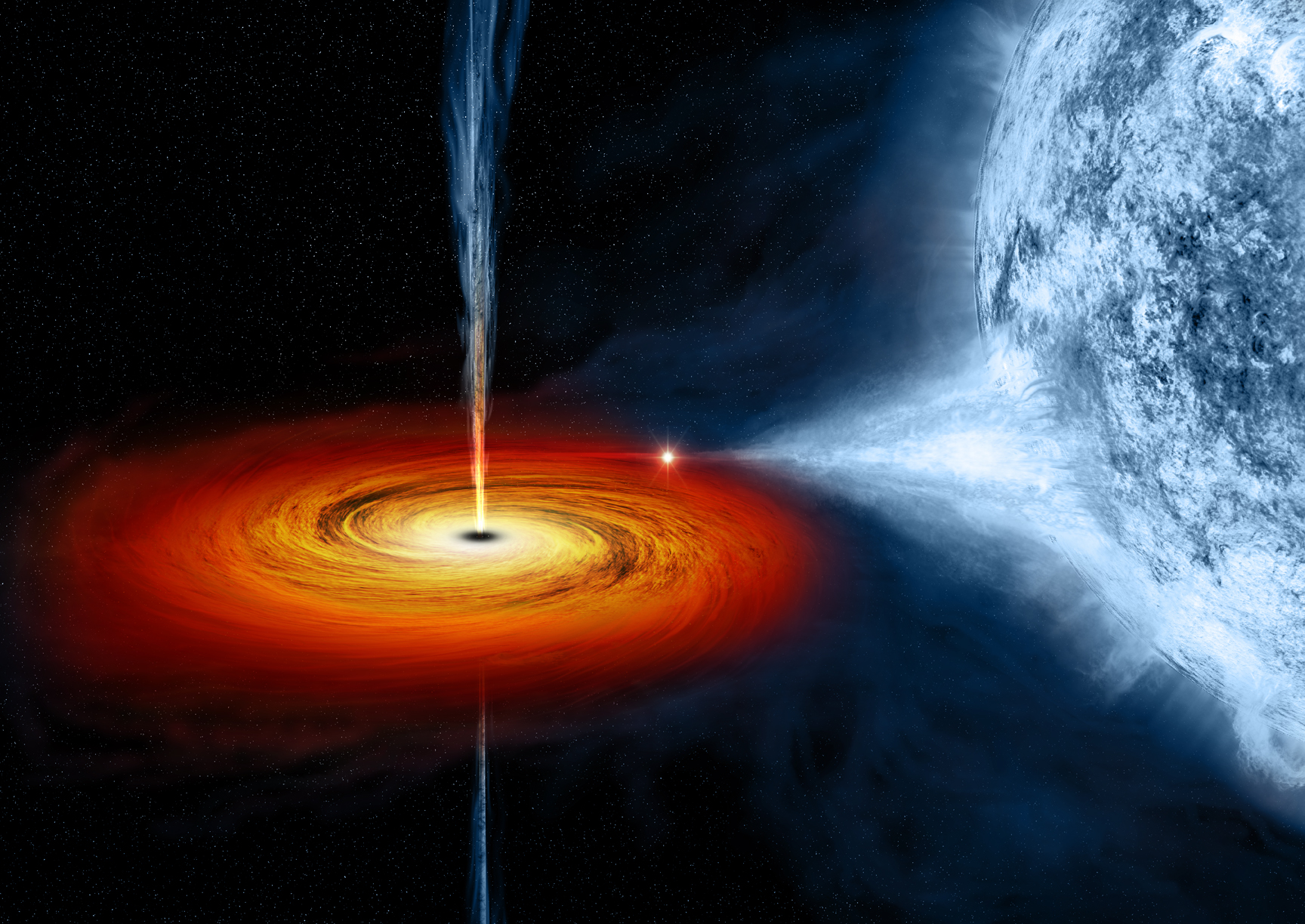

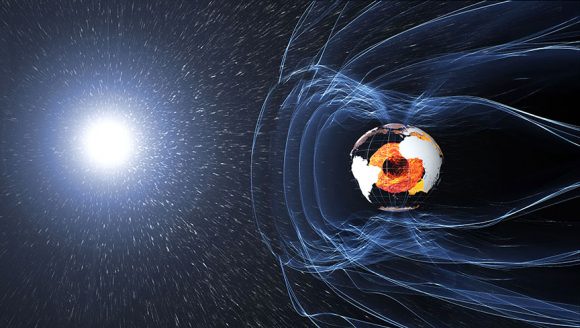

Aurora Borealis (and Australis) is caused by interactions between energetic particles from the Sun and the Earth’s magnetic field. The invisible field lines of Earth’s magnetoshere travel from the Earth’s northern magnetic pole to its southern magnetic pole. When charged particles reach the magnetic field, they are deflected, creating a “bow shock” (so-named because of its apparent shape) around Earth.

However, Earth’s magnetic field is weaker at the poles, and some particles are therefore able to enter the Earth’s atmosphere and collide with gas particles in these regions. These collisions emit light that we perceive as wavy and dancing, and are generally a pale, yellowish-green in color.

The variations in color are due to the type of gas particles that are colliding. The common yellowish-green is produced by oxygen molecules located about 100 km (60 miles) above the Earth, whereas high-altitude oxygen – at heights of up to 320 km (200 miles) – produce all-red auroras. Meanwhile, interactions between charged particles and nitrogen will produces blue or purplish-red auroras.

Variability:

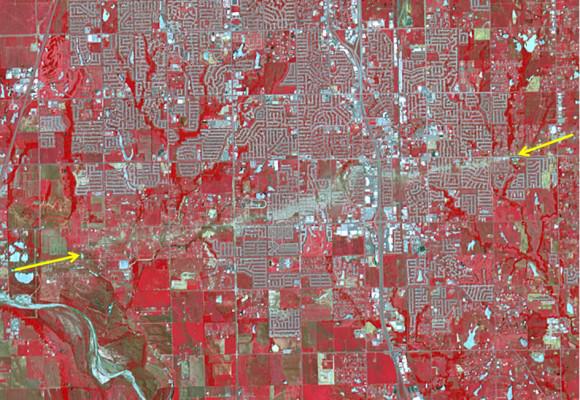

The visibility of the northern (and southern) lights depends on a lot of factors, much like any other type of meteorological activity. Though they are generally visible in the far northern and southern regions of the globe, there have been instances in the past where the lights were visible as close to the equator as Mexico.

In places like Alaska, Norther Canada, Norway and Siberia, the northern lights are often seen every night of the week in the winter. Though they occur year-round, they are only visible when it is rather dark out. Hence why they are more discernible during the months where the nights are longer.

Because they depend on the solar wind, auroras are more plentiful during peak periods of activity in the Solar Cycle. This cycle takes places every 11 years, and is marked by the increase and decrease of sunspots on the sun’s surface. The greatest number of sunspots in any given solar cycle is designated as a “Solar Maximum“, whereas the lowest number is a “Solar Minimum.”

A Solar Maximum also accords with bright regions appearing in the Sun’s corona, which are rooted in the lower sunspots. Scientists track these active regions since they are often the origin of eruptions on the Sun, such as solar flares or coronal mass ejections.

The most recent solar minimum occurred in 2008. As of January 2010, the Sun’s surface began to increase in activity, which began with the release of a lower-intensity M-class flare. The Sun continued to get more active, culminating in a Solar Maximum by the summer of 2013.

Locations for Viewing:

The ideal places to view the Northern Lights are naturally located in geographical regions north of 60° latitude. These include northern Canada, Greenland, Iceland, Scandinavia, Alaska, and Northern Russia. Many organizations maintain websites dedicated to tracking optimal viewing conditions.

For instance, the Geophysical Institute of the University of Alaska Fairbanks maintains the Aurora Forecast. This site is regularly updated to let residents know when auroral activity is high, and how far south it will extend. Typically, residents who live in central or northern Alaska (from Fairbanks to Barrow) have a better chance than those living in the south (Anchorage to Juneau).

In Northern Canada, auroras are often spotted from the Yukon, the Northwest Territories, Nunavut, and Northern Quebec. However, they are sometimes seen from locations like Dawson Creek, BC; Fort McMurry, Alberta; northern Saskatchewan and the town of Moose Factory by James Bay, Ontario. For information, check out Canadian Geographic Magazine’s “Northern Lights Across Canada“.

The National Oceanic and Atmospheric Agency also provides 30 minute forecasts on auroras through their Space Weather Prediction Center. And then there’s Aurora Alert, an Android App that allows you to get regular updates on when and where an aurora will be visible in your region.

Understanding the scientific cause of auroras has not made them any less awe-inspiring or wondrous. Every year, countless people venture to locations where they can be seen. And for those serving aboard the ISS, they got the best seat in the house!

Speaking of which, be sure to check out this stunning NASA video which shows the Northern Lights being viewed from the ISS:

We have written many interesting articles about Auroras here at Universe Today. Here’s The Northern and Southern Lights – What is an Aurora?, What is the Aurora Borealis?, What is the Aurora Australis?, What Causes the Northern Lights?, How Does the Aurora Borealis Form?, and Watch Fast and Furious All-sky Aurora Filmed in Real Time.

For more information, visit the THEMIS website – a NASA mission that is currently studying space weather in great detail. The Space Weather Center has information on the solar wind and how it causes aurorae.

Astronomy Cast also has episodes on the subject, like Episode 42: Magnetism Everywhere.

Sources: