Finding a black hole is an easy task… compared with searching for a wormhole. Suspected black holes have a massive gravitational effect on planets, stars and even galaxies, generating radiation, producing jets and accretion disks. Black holes will even bend light through gravitational lensing. Now, try finding a wormhole… Any ideas? Well, a Russian researcher thinks he has found an answer, but a highly sensitive radio telescope plus a truckload of patience (I’d imagine) is needed to find a special wormhole signature…

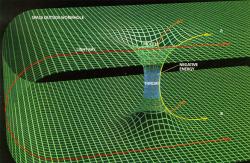

Wormholes are a valid consequence of Einstein’s general relativity view on the universe. A wormhole, in theory, acts as a shortcut or tunnel through space and time. There are several versions on the same theme (i.e. wormholes may link different universes; they may link the two separate locations in the same universe; they may even link black and white holes together), but the physics is similar, wormholes create a link two locations in space-time, bypassing normal three dimensional travel through space. Also, it is theorized, that matter can travel through some wormholes fuelling sci-fi stories like in the film Stargate or Star Trek: Deep Space Nine. If wormholes do exist however, it is highly unlikely that you’ll find a handy key to open the mouth of a wormhole in your back yard, they are likely to be very elusive and you’ll probably need some specialist equipment to travel through them (although this will be virtually impossible).

Alexander Shatskiy, from the Lebedev Physical Institute in Moscow, has an idea how these wormholes may be observed. For a start, they can be distinguished from black holes, as wormhole mouths do not have an event horizon. Secondly, if matter could possibly travel through wormholes, light certainly can, but the light emitted will have a characteristic angular intensity distribution. If we were viewing a wormhole’s mouth, we would be witness to a circle, resembling a bubble, with intense light radiating from the inside “rim”. Looking toward the center, we would notice the light sharply dim. At the center we would notice no light, but we would see right through the mouth of the wormhole and see stars (from our side of the universe) shining straight through.

For the possibility to observe the wormhole mouth, sufficiently advanced radio interferometers would be required to look deep into the extreme environments of galactic cores to distinguish this exotic cosmic ghost from its black hole counterpart.

However, just because wormholes are possible does not mean they do exist. They could simply be the mathematical leftovers of general relativity. And even if they do exist, they are likely to be highly unstable, so any possibility of traveling through time and space will be short lived. Besides, the radiation passing through will be extremely blueshifted, so expect to burn up very quickly. Don’t pack your bags quite yet…

Source: arXiv publication