[/caption]

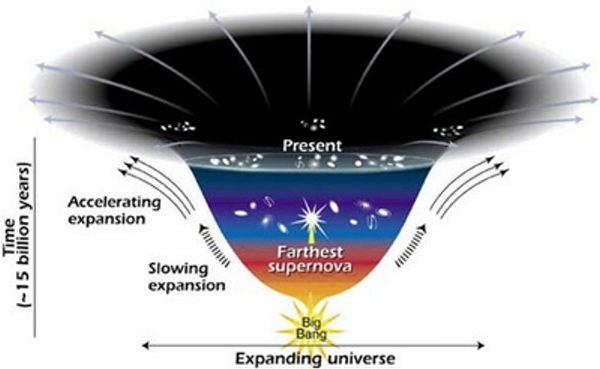

A remarkable finding of the early 21st century, that kind of sits alongside the Nobel prize winning discovery of the universe’s accelerating expansion, is the finding that the universe is geometrically flat. This is a remarkable and unexpected feature of a universe that is expanding – let alone one that is expanding at an accelerated rate – and like the accelerating expansion, it is a key feature of our current standard model of the universe.

It may be that the flatness is just a consequence of the accelerating expansion – but to date this cannot be stated conclusively.

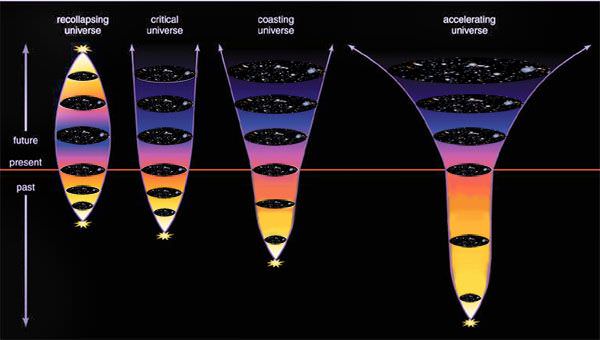

As usual, it’s all about Einstein. The Einstein field equations enable the geometry of the universe to be modelled – and a great variety of different solutions have been developed by different cosmology theorists. Some key solutions are the Friedmann equations, which calculate the shape and likely destiny of the universe, with three possible scenarios:

• closed universe – with a contents so dense that the universe’s space-time geometry is drawn in upon itself in a hyper-spherical shape. Ultimately such a universe would be expected to collapse in on itself in a big crunch.

• open universe – without sufficient density to draw in space-time, producing an outflung hyperbolic geometry – commonly called a saddle-shape – with a destiny to expand forever.

• flat universe – with a ‘just right’ density – although an unclear destiny.

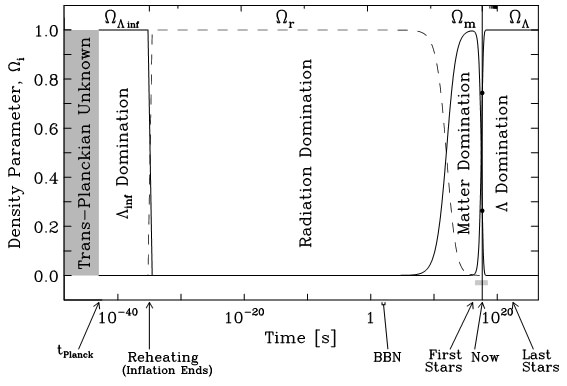

The Friedmann equations were used in twentieth century cosmology to try and determine the ultimate fate of our universe, with few people thinking that the flat scenario would be a likely finding – since a universe might be expected to only stay flat for a short period, before shifting to an open (or closed) state because its expansion (or contraction) would alter the density of its contents.

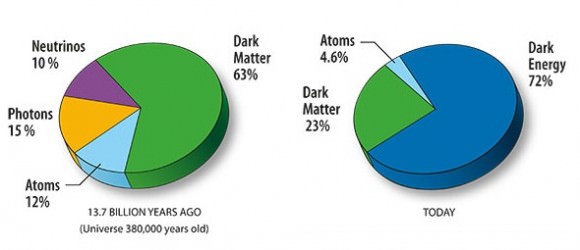

Matter density was assumed to be key to geometry – and estimates of the matter density of our universe came to around 0.2 atoms per cubic metre, while the relevant part of the Friedmann equations calculated that the critical density required to keep our universe flat would be 5 atoms per cubic metre. Since we could only find 4% of the required critical density, this suggested that we probably lived in an open universe – but then we started coming up with ways to measure the universe’s geometry directly.

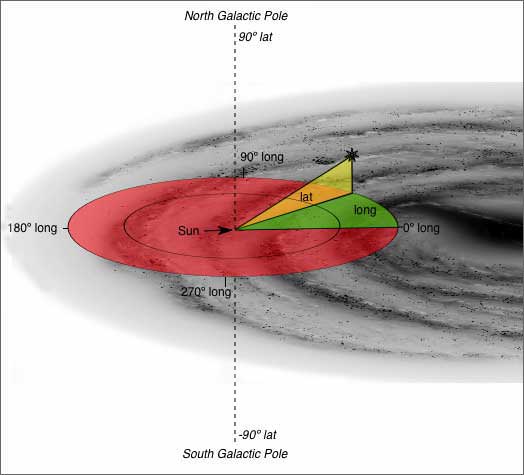

There’s a You-Tube of Lawrence Krauss (of Physics of Star Trek fame) explaining how this is done with cosmic microwave background data (from WMAP and earlier experiments) – where the CMB mapped on the sky represents one side of a triangle with you at its opposite apex looking out along its two other sides. The angles of the triangle can then be measured, which will add up to 180 degrees in a flat (Euclidean) universe, more than 180 in a closed universe and less than 180 in an open universe.

These findings, indicating that the universe was remarkably flat, came at the turn of the century around the same time that the 1998 accelerated expansion finding was announced.

So really, it is the universe’s flatness and the estimate that there is only 4% (0.2 atoms per metre) of the matter density required to keep it flat that drives us to call on dark stuff to explain the universe. Indeed we can’t easily call on just matter, light or dark, to account for how our universe sustains its critical density in the face of expansion, let alone accelerated expansion – since whatever it is appears out of nowhere. So, we appeal to dark energy to make up the deficit – without having a clue what it is.

Given how little relevance conventional matter appears to have in our universe’s geometry, one might question the continuing relevance of the Friedmann equations in modern cosmology. There is more recent interest in the De Sitter universe, another Einstein field equation solution which models a universe with no matter content – its expansion and evolution being entirely the result of the cosmological constant.

De Sitter universes, at least on paper, can be made to expand with accelerating expansion and remain spatially flat – much like our universe. From this, it is tempting to suggest that universes naturally stay flat while they undergo accelerated expansion – because that’s what universes do, their contents having little direct influence on their long-term evolution or their large-scale geometry.

But who knows really – we are both literally and metaphorically working in the dark on this.

Further reading:

Krauss: Why the universe probably is flat (video).