According to current estimates, there could be as many as 100 billion planets in the Milky Way Galaxy alone. Unfortunately, finding evidence of these planets is tough, time-consuming work. For the most part, astronomers are forced to rely on indirect methods that measure dips in a star’s brightness (the Transit Method) of Doppler measurements of the star’s own motion (the Radial Velocity Method).

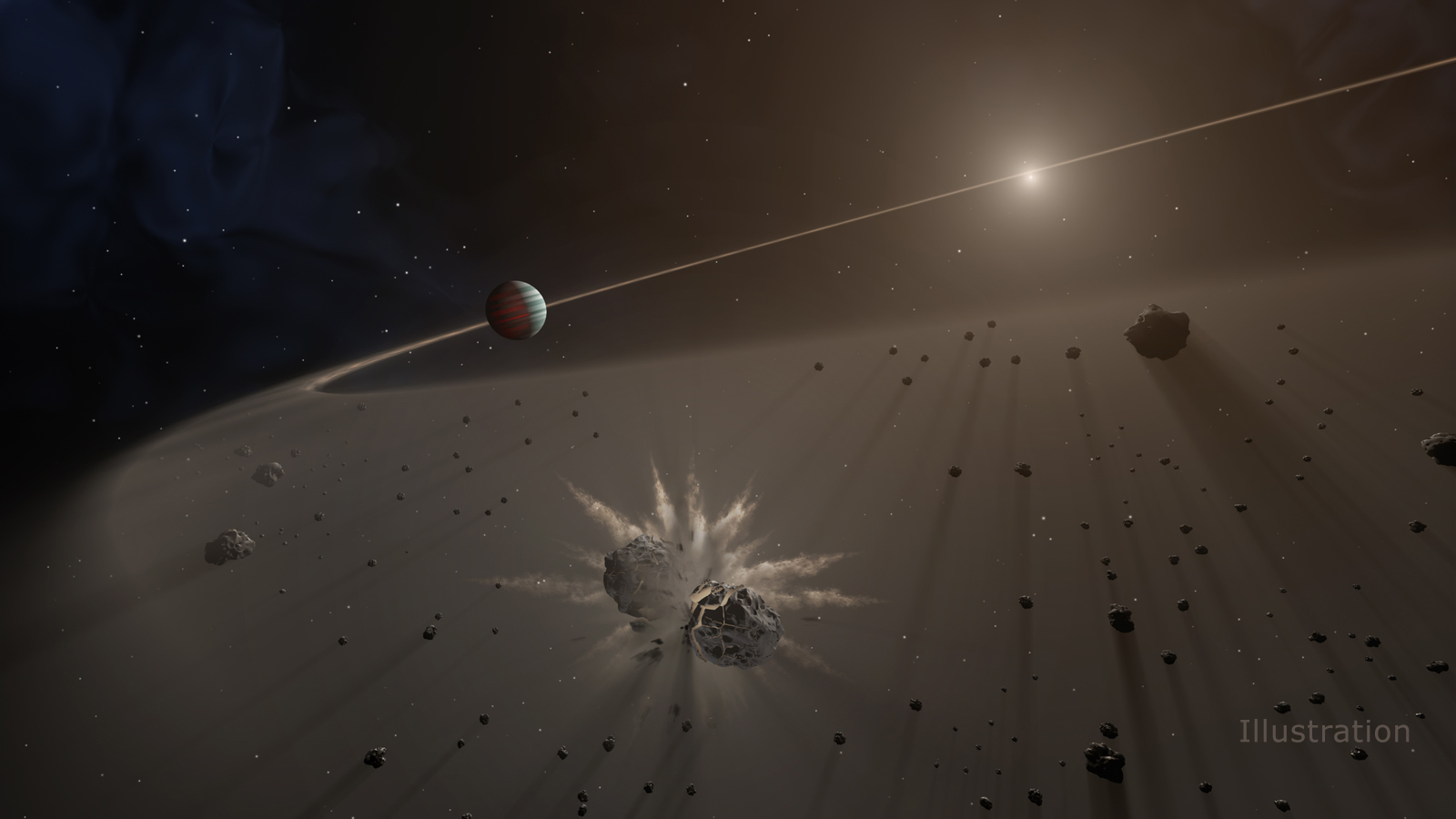

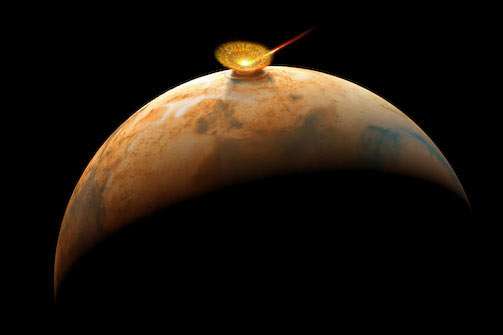

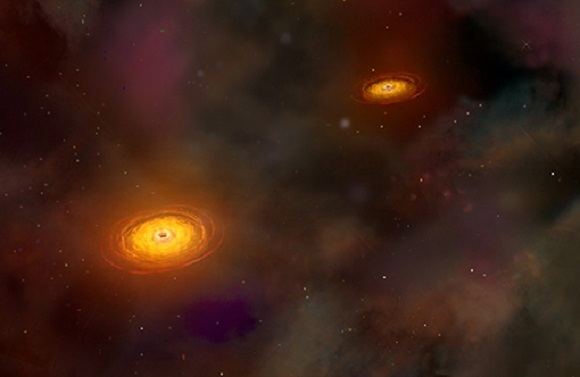

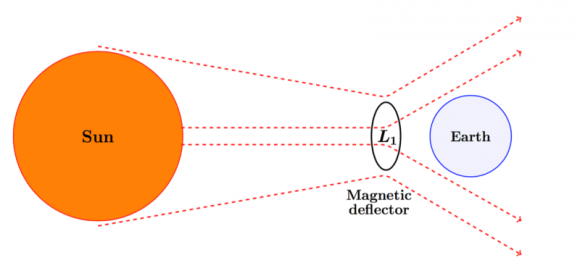

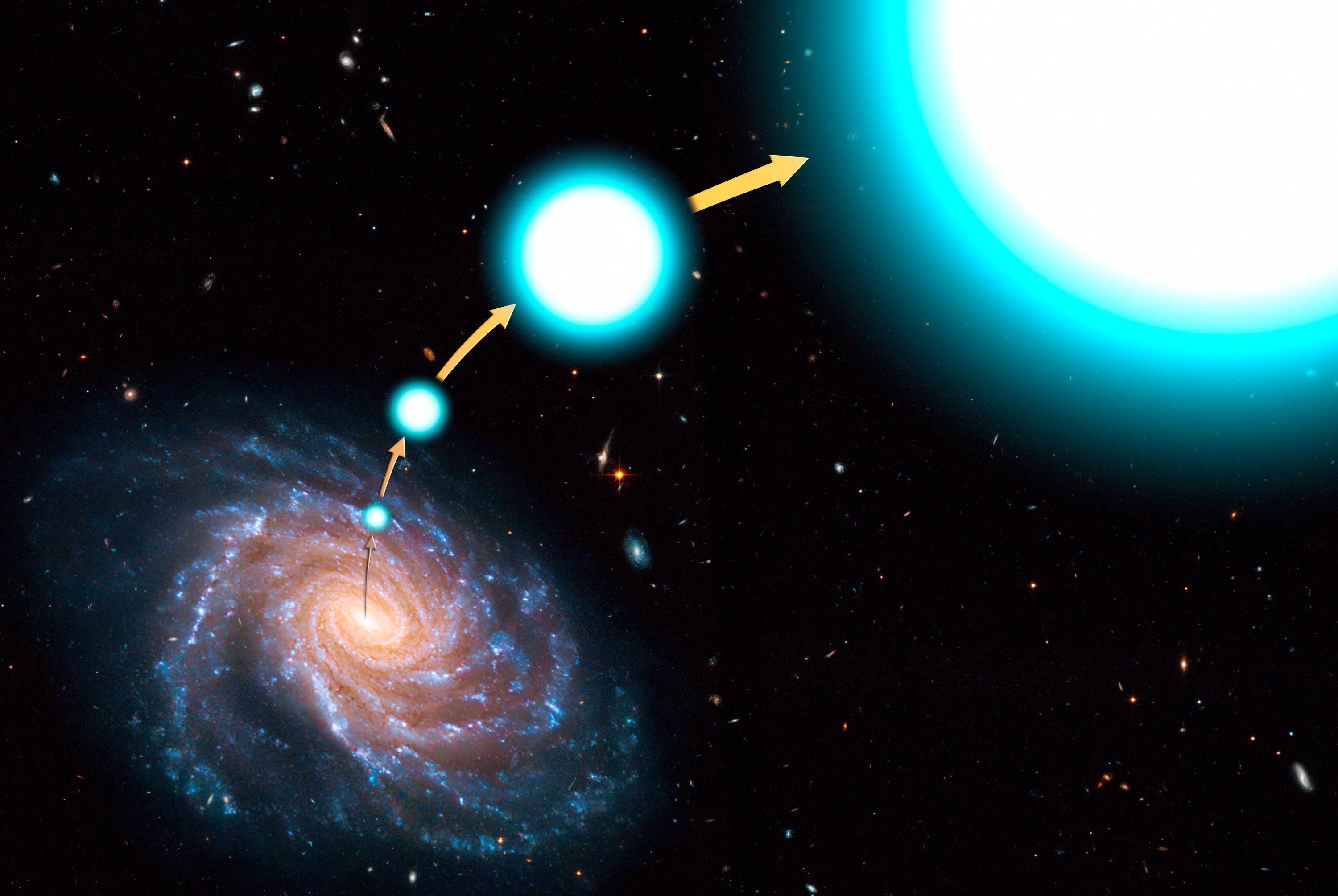

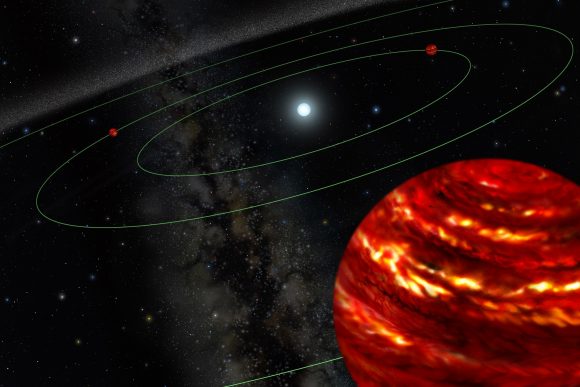

Direct imaging is very difficult because of the cancelling effect stars have, where their brightness makes it difficult to spot planets orbiting them. Luckily a new study led by the Infrared Processing and Analysis Center (IPAC) at Caltech has determined that there may be a shortcut to finding exoplanets using direct imaging. The solution, they claim, is to look for systems with a circumstellar debris disk, for they are sure to have at least one giant planet.

The study, titled “A Direct Imaging Survey of Spitzer Detected Debris Disks: Occurrence of Giant Planets in Dusty Systems“, recently appeared in The Astronomical Journal. Tiffany Meshkat, an assistant research scientist at IPAC/Caltech, was the lead author on the study, which she performed while working at NASA’s Jet Propulsion Laboratory as a postdoctoral researcher.

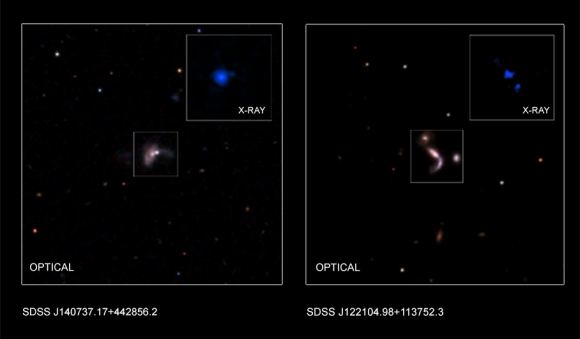

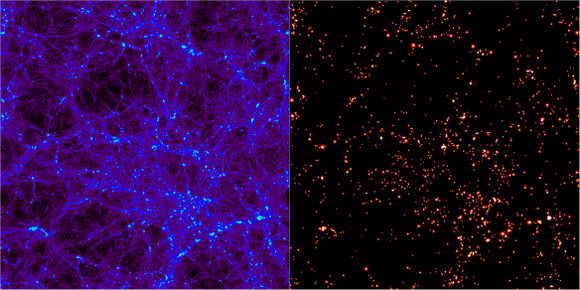

For the sake of this study, Dr. Meshkat and her colleagues examined data on 130 different single-star systems with debris disks, which they then compared to 277 stars that do not appear to host disks. These stars were all observed by NASA’s Spitzer Space Telescope and were all relatively young in age (less than 1 billion years). Of these 130 systems, 100 had previously been studied for the sake of finding exoplanets.

Dr. Meshkat and her team then followed up on the remaining 30 systems using data from the W.M. Keck Observatory in Hawaii and the European Southern Observatory’s (ESO) Very Large Telescope (VLT) in Chile. While they did not detect any new planets in these systems, their examinations helped characterize the abundance of planets in systems that had disks.

What they found was that young stars with debris disks are more likely to also have giant exoplanets with wide orbits than those that do not. These planets were also likely to have five times the mass of Jupiter, thus making them “Super-Jupiters”. As Dr. Meshkat explained in a recent NASA press release, this study will be of assistance when it comes time for exoplanet-hunters to select their targets:

“Our research is important for how future missions will plan which stars to observe. Many planets that have been found through direct imaging have been in systems that had debris disks, and now we know the dust could be indicators of undiscovered worlds.”

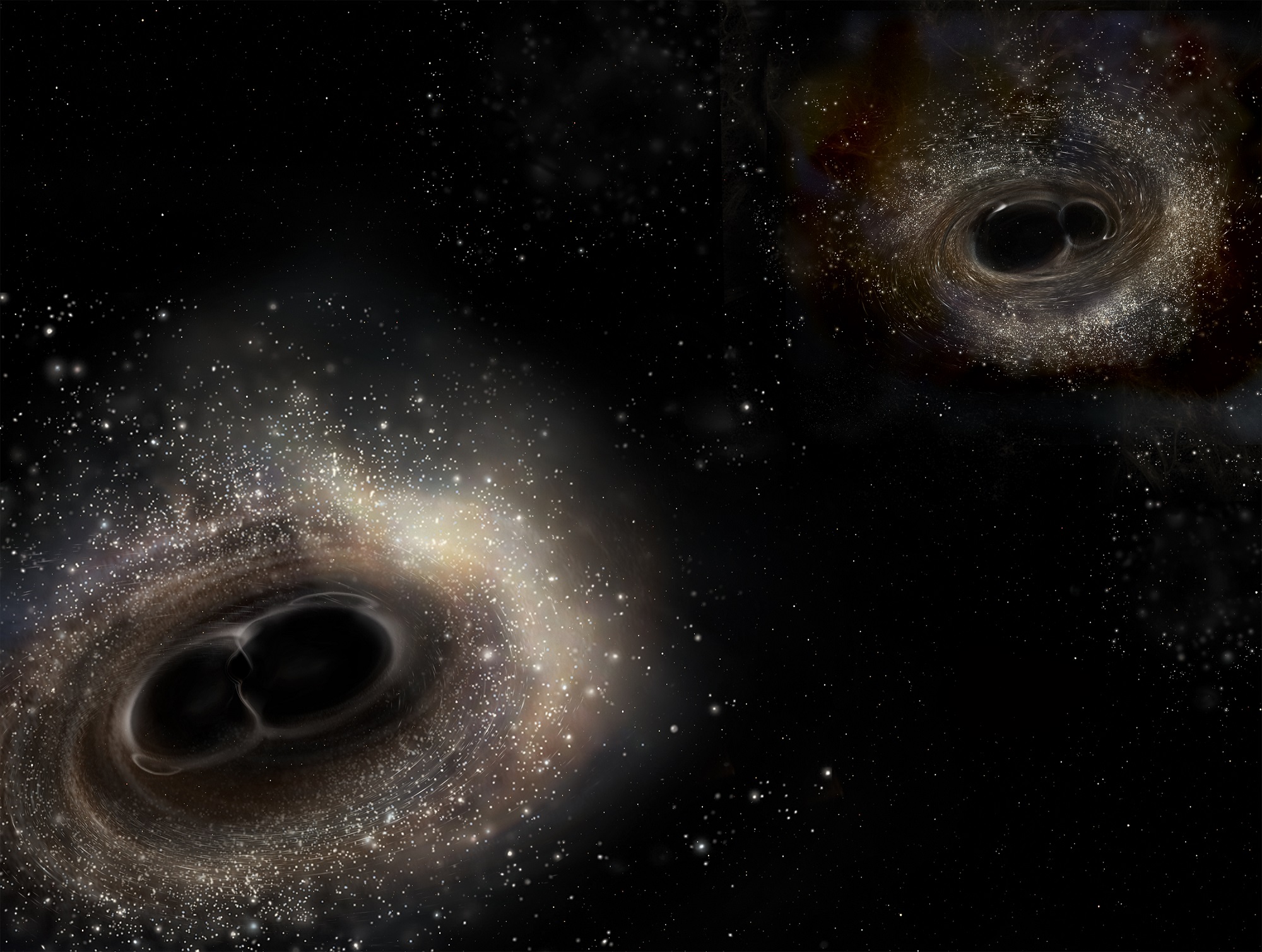

This study, which was the largest examination of stars with dusty debris disks, also provided the best evidence to date that giant planets are responsible for keeping debris disks in check. While the research did not directly resolve why the presence of a giant planet would cause debris disks to form, the authors indicate that their results are consistent with predictions that debris disks are the products of giant planets stirring up and causing dust collisions.

In other words, they believe that the gravity of a giant planet would cause planestimals to collide, thus preventing them from forming additional planets. As study co-author Dimitri Mawet, who is also a JPL senior research scientist, explained:

“It’s possible we don’t find small planets in these systems because, early on, these massive bodies destroyed the building blocks of rocky planets, sending them smashing into each other at high speeds instead of gently combining.”

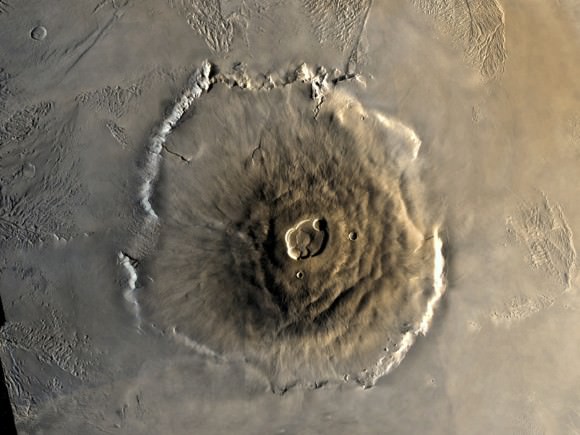

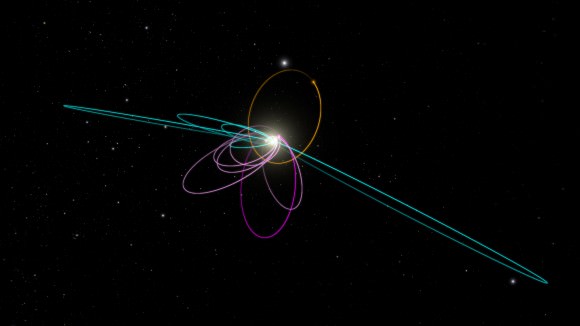

Within the Solar System, the giant planets create debris belts of sorts. For example, between Mars and Jupiter, you have the Main Asteroid Belt, while beyond Neptune lies the Kuiper Belt. Many of the systems examined in this study also have two belts, though they are significantly younger than the Solar System’s own belts – roughly 1 billion years old compared to 4.5 billion years old.

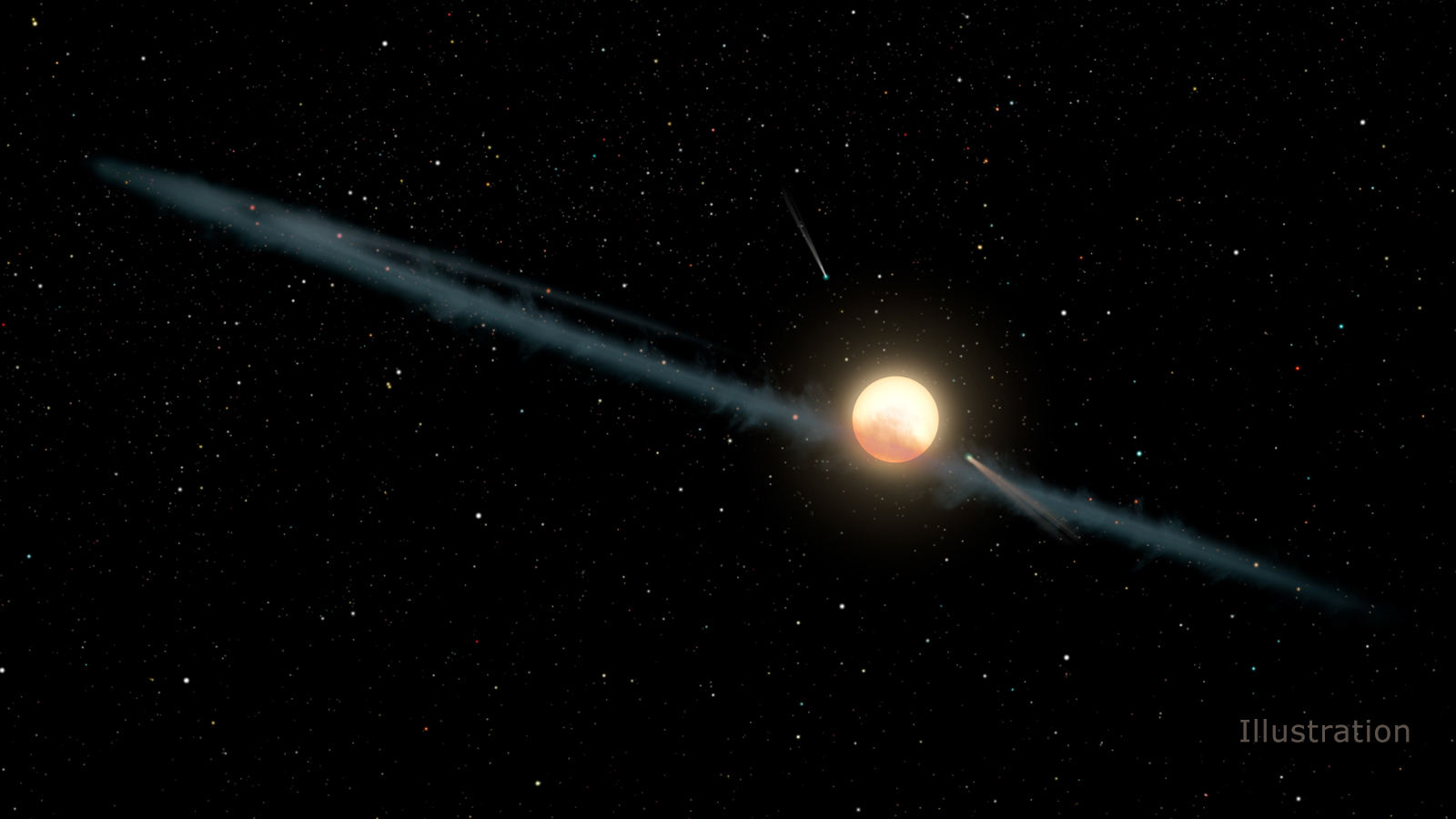

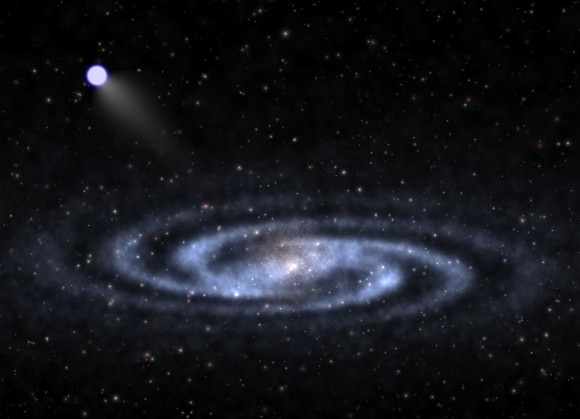

One of the systems examined in the study was Beta Pictoris, a system that has a debris disk, comets, and one confirmed exoplanet. This planet, designated Beta Pictoris b, which has 7 Jupiter masses and orbits the star at a distance of 9 AUs – i.e. nine times the distance between the Earth and the Sun. This system has been directly imaged by astronomers in the past using ground-based telescopes.

Interestingly enough, astronomers predicted the existence of this exoplanet well before it was confirmed, based on the presence and structure of the system’s debris disk. Another system that was studied was HR8799, a system with a debris disk that has two prominent dust belts. In these sorts of systems, the presence of more giant planets is inferred based on the need for these dust belts to be maintained.

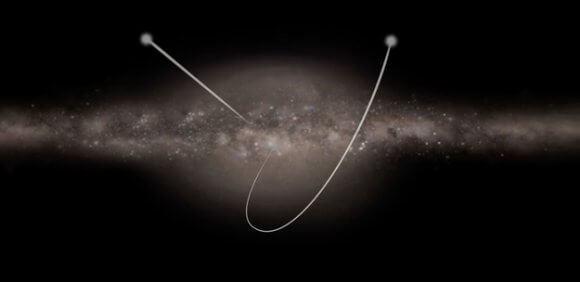

This is believed to be case for our own Solar System, where 4 billion years ago, the giant planets diverted passing comets towards the Sun. This resulted in the Late Heavy Bombardment, where the inner planets were subject to countless impacts that are still visible today. Scientists also believe that it was during this period that the migrations of Jupiter, Saturn, Uranus and Neptune deflected dust and small bodies to form the Kuiper Belt and Asteroid Belt.

Dr. Meshkat and her team also noted that the systems they examined contained much more dust than our Solar System, which could be attributable to their differences in age. In the case of systems that are around 1 billion years old, the increased presence of dust could be the result of small bodies that have not yet formed larger bodies colliding. From this, it can be inferred that our Solar System was once much dustier as well.

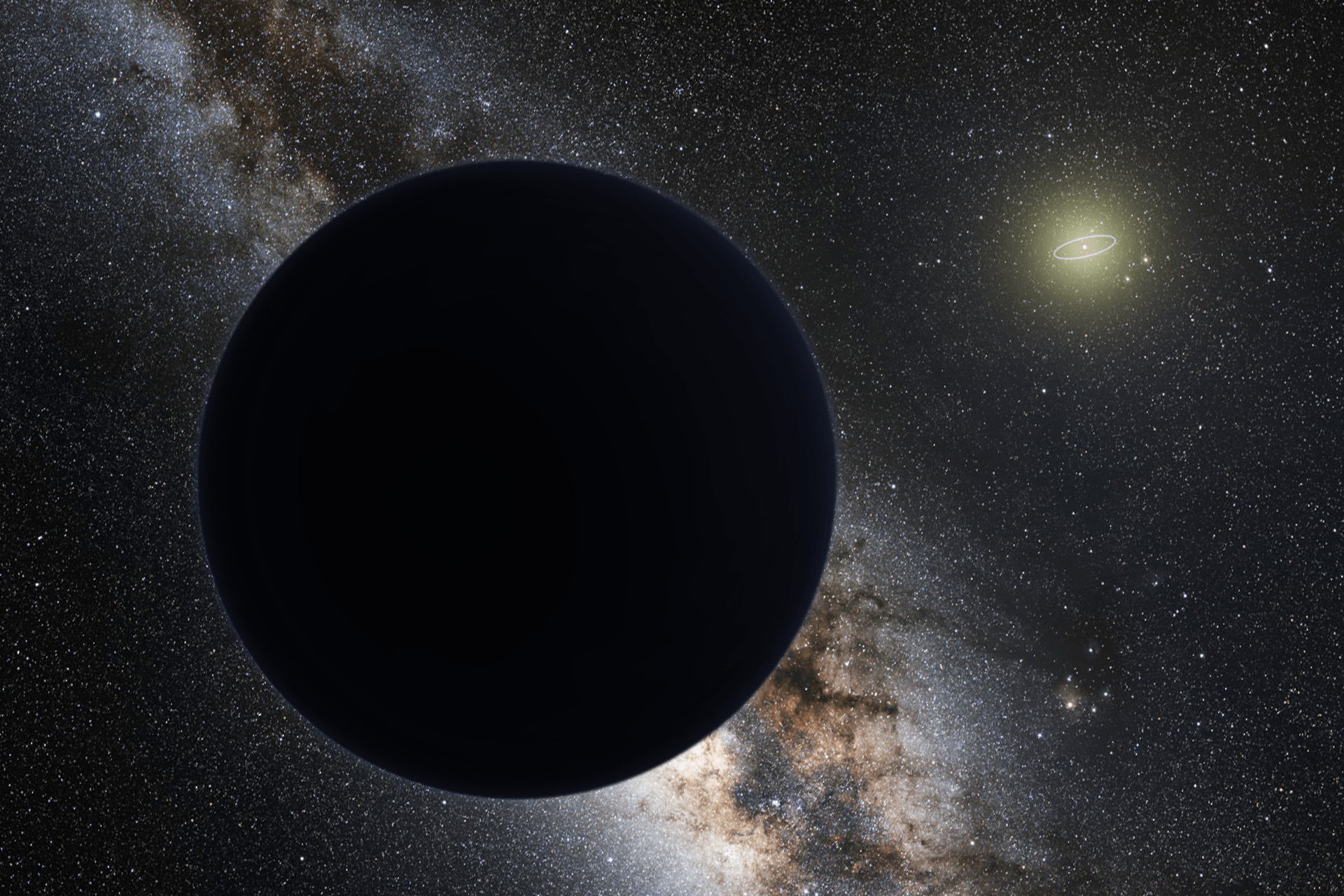

However, the authors note is also possible that the systems they observed – which have one giant planet and a debris disk – may contain more planets that simply have not been discovered yet. In the end, they concede that more data is needed before these results can be considered conclusive. But in the meantime, this study could serve as an guide as to where exoplanets might be found.

As Karl Stapelfeldt, the chief scientist of NASA’s Exoplanet Exploration Program Office and a co-author on the study, stated:

“By showing astronomers where future missions such as NASA’s James Webb Space Telescope have their best chance to find giant exoplanets, this research paves the way to future discoveries.”

In addition, this study could help inform our own understanding of how the Solar System evolved over the course of billions of years. For some time, astronomers have been debating whether or not planets like Jupiter migrated to their current positions, and how this affected the Solar System’s evolution. And there continues to be debate about how the Main Belt formed (i.e. empty of full).

Last, but not least, it could inform future surveys, letting astronomers know which star systems are developing along the same lines as our own did, billions of years ago. Wherever star systems have debris disks, they an infer the presence of a particularly massive gas giant. And where they have a disk with two prominent dust belts, they can infer that it too will become a system containing many planets and and two belts.

Further Reading: NASA, The Astrophysical Journal