In this period of heightened geopolitical flux, enthusiasm for advances in planetary exploration can be dampened. But that's not stopping NASA from forging ahead in its efforts.

In December, NASA took another small, incremental step towards autonomous surface rovers. In a demonstration, the Perseverance team used AI to generate the rover's waypoints. Perseverance used the AI waypoints on two separate days, travelling a total of 456 meters (1,496 ft) without human control.

“This demonstration shows how far our capabilities have advanced and broadens how we will explore other worlds,” said NASA Administrator Jared Isaacman. “Autonomous technologies like this can help missions to operate more efficiently, respond to challenging terrain, and increase science return as distance from Earth grows. It’s a strong example of teams applying new technology carefully and responsibly in real operations.”

Mars is a long way away, and there's about a 25 minute delay for a round trip signal between Earth and Mars. That means that one way or another, rovers are on their own for short periods of time.

The delay shapes the route-planning process. Rover drivers here on Earth examine images and elevation data and program a series of waypoints, which usually don't exceed 100 meters (330 ft.) apart. The driving plan is sent to NASA's Deep Space Network (DSN), which transmits it to one of several orbiters, which then relay it to Perseverance. (Perseverance can receive direct comms from the DSN as a back up, but the data rate is slower.)

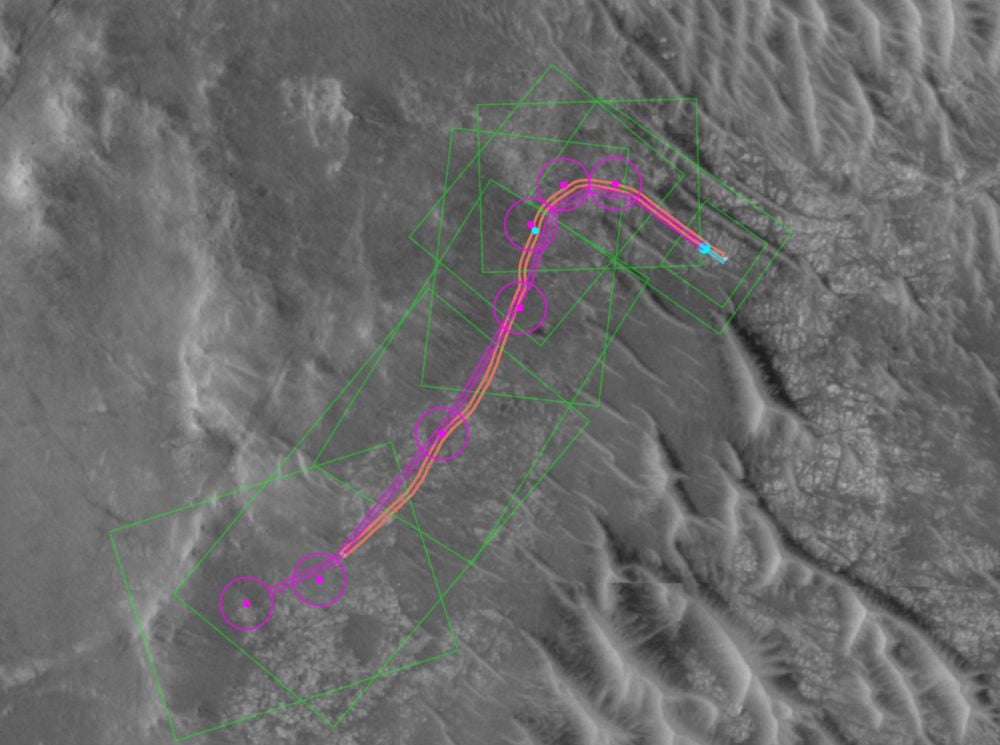

In this demonstration, the AI analyzed orbital images from the Mars Reconnaissance Orbiter's HiRISE camera, as well as digital elevation models. The AI, which is based on Anthropic's Claude AI, identifed hazards like sand traps, boulder fields, bedrock, and rocky outcrops. Then it generated a path defined by a series of waypoints that avoids the hazards. From there, Perseverance's auto-navigation system took over. It has more autonomy than its predecessors and can process images and driving plans while in motion.

There was another important step before these waypoints were transmitted to Perseverance. NASA's Jet Propulsion Laboratory has a "twin" for Perseverance called the “Vehicle System Test Bed” (VSTB) in JPL’s Mars Yard. It's an engineering model that the team can work with here on Earth to solve problems, or for situations like this. These engineering versions are common on Mars missions, and JPL has one for Curiosity, too.

*This is the full-scale engineering model of NASA's Perseverance rover. JPL used it to test the waypoint instructions generated by AI before sending them to Perseverance. Image Credit: NASA/JPL-Caltech*

*This is the full-scale engineering model of NASA's Perseverance rover. JPL used it to test the waypoint instructions generated by AI before sending them to Perseverance. Image Credit: NASA/JPL-Caltech*

“The fundamental elements of generative AI are showing a lot of promise in streamlining the pillars of autonomous navigation for off-planet driving: perception (seeing the rocks and ripples), localization (knowing where we are), and planning and control (deciding and executing the safest path),” said Vandi Verma, a space roboticist at JPL and a member of the Perseverance engineering team. “We are moving towards a day where generative AI and other smart tools will help our surface rovers handle kilometer-scale drives while minimizing operator workload, and flag interesting surface features for our science team by scouring huge volumes of rover images.”

The video below is based on Perseverance's second AI drive. It's made from data the rover acquired during its journey. The mission’s “drivers,” or rover planners, use the information to understand the rover’s autonomous decision-making process during its drive by showing why it chose one specific path over other options. The pale blue lines depict the track the rover’s wheels follow. The black lines snaking out in front of the rover depict the different path options the rover is considering from moment to moment. The white terrain Perseverance drives onto in the animation is a height map generated using data the rover collected during the drive. The pale blue circle that appears in front of the rover near the end of the animation is a waypoint.

AI is rapidly becoming ubiquitous in our lives, showing up in places that don't necessarily have a strong use case for it. But this isn't NASA hopping on the AI bandwagon. They've been developing automatic navigation systems for a while, out of necessity. In fact, Perseverance's primary means of driving is its self-driving autonomous navigation system.

One thing that prevents fully-autonomous driving is the way uncertainty grows as the rover operates without human assistance. The longer the rover travels, the more uncertain it becomes about its position on the surface. The solution is to re-localize the rover on its map. Currently, humans do this. But this takes time, including a complete communication cycle between Earth and Mars. Overall, it limits how far Perseverance can go without a helping hand.

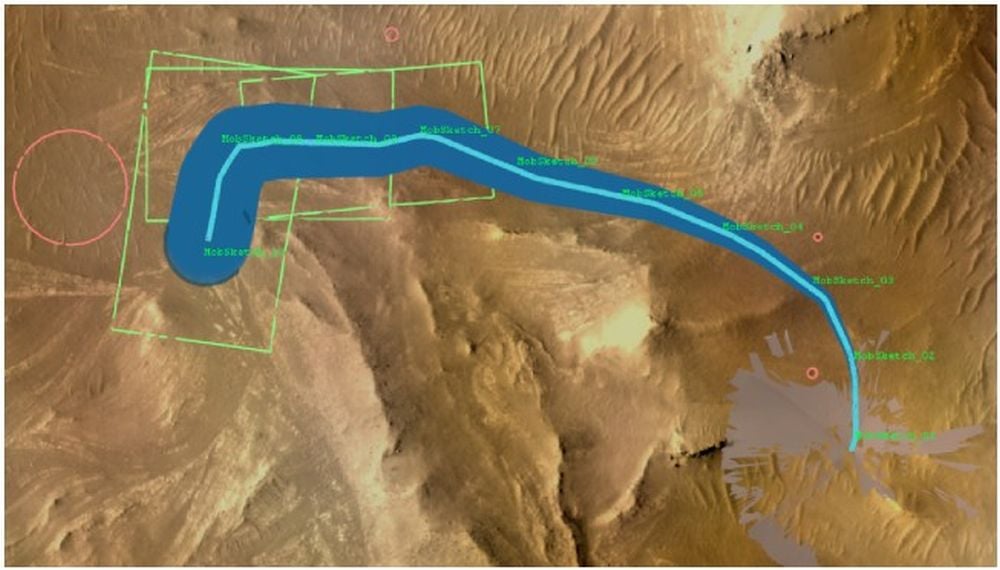

The blue in this image shows how the rover's uncertainty about its position on the surface grows the further it follows a set of instructions. Perseverance drove a total of 655 meters in this image, shown by the light blue line. It started in the lower right and ended in the upper left. The uncertainty grew from 0 meters at the start of the drive to almost 33 meters at the end, shown by the blue region progressively thickening. Image Credit: Verma et al. 2024.

The blue in this image shows how the rover's uncertainty about its position on the surface grows the further it follows a set of instructions. Perseverance drove a total of 655 meters in this image, shown by the light blue line. It started in the lower right and ended in the upper left. The uncertainty grew from 0 meters at the start of the drive to almost 33 meters at the end, shown by the blue region progressively thickening. Image Credit: Verma et al. 2024.

NASA/JPL is also working on a way that Perseverance can use AI to re-localize. The main roadblock is matching orbital images with the rover's ground-level images. It seems highly likely that AI will be trained to excel at this.

It's obvious that AI is set to play a much larger role in planetary exploration. The next Mars rover may be much different than current ones, with more advanced autonomous navigation and other AI features. There are already concepts for a swarm of flying drones released by a rover to expand its explorative reach on Mars. These swarms would be controlled by AI to work together and autonomously.

And it's not just Mars exploration that will benefit from AI. NASA's Dragonfly mission to Saturn's moon Titan will make extensive use of AI. Not only for autonomous navigation as the rotorcraft flies around, but also for autonomous data curation.

“Imagine intelligent systems not only on the ground at Earth, but also in edge applications in our rovers, helicopters, drones, and other surface elements trained with the collective wisdom of our NASA engineers, scientists, and astronauts,” said Matt Wallace, manager of JPL’s Exploration Systems Office. “That is the game-changing technology we need to establish the infrastructure and systems required for a permanent human presence on the Moon and take the U.S. to Mars and beyond."

Universe Today

Universe Today