Chris Lewicki is the President and Chief Engineer for one of the most pioneering and audacious companies in the world today. Planetary Resources was founded in 2008 by two leading space advocates, Peter Diamandis, Chairman and CEO of the X-Prize Foundation and Eric Anderson, a forerunner in the field of space tourism. In from the earliest days of the company, in turning to Lewicki, Anderson and Diamandis have gained scientific and management expertise which reaches far beyond low Earth orbit.

Chris is a recipient of two NASA Exceptional Achievement Medals and has an asteroid name in his honour, 13609 Lewicki. Chris holds bachelor’s and master’s degrees in Aerospace Engineering from the University of Arizona.

In this exclusive interview with Nick Howes, Lewicki gives us a feel for what lies behind Planetary Resources most compelling step yet in their quest to bring space to the masses.

Nick Howes – So Chris, what first inspired you to get in to astronomy and space science?

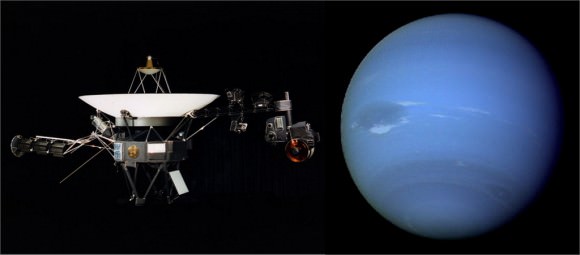

Chris Lewicki – So, I guess it wasn’t a person as most would say, but a mission that got me started on this road. Even before college, and you have to remember I grew up in dairy country in Northern Wisconsin, where we didn’t really have much in the way of space. I wanted to do something interesting, and found I was good at math. When I saw the Voyager 2 spacecraft flyby of Neptune and Triton, I thought “wow this is it,” and wanted to work at JPL pretty much from that moment onwards. Thinking that this was a “really special place.”

NH – At college were you determined to work for someone like NASA, and was your time at Blastoff a good stepping stone in to this?

CL – I think it really did start even before college, like I said, from the Voyager 2 encounter and all the subsequent missions which JPL were involved in this was kind of the goal. Ahead of JPL though, was my first encounter with Peter (Diamandis) and Eric (Anderson) when we worked on starport.com where I was a web developer. Prior to that I’d had a spell at the Goddard Space Flight Centre, but with Eric and Peter, we really did form a bond. Starport didn’t last too long though, as it was at the time of the dotcom boom and bubble, but it taught me some valuable lessons in those months.

Then I took up a position at JPL, but as you probably know, not everything they do is mission design and planning, and while it is an amazing place, I wanted to get my hands on some real mission stuff, so moved on after just under a year.

Then came Blastoff which kind of set a lot of the wheels in motion for ideas relating to the Google Lunar X-Prize. We had a lot of fun there designing rovers and exploratory missions to the Moon, lots of great people with great ideas.

I was then at a small satellites conference in Utah, when a representative of JPL came up to me after my talk, gave me his business card and effectively said I should come and do an interview for them. Peter and Eric didn’t really want me to go, but I told them “I really have to go off and learn how to build rockets.” Thus really started the real journey working with NASA on some of the most exciting missions in recent history.

NH – How thrilling was it being the flight director for two of the most successful missions in NASA’s history?

CL – Thrilling really doesn’t come close to covering it. There I was, 29 years old, thinking “should I really be doing this?” but then, realising “yes, I can do this” sitting in the flight directors desk for two of NASA’s most audacious missions, being Spirit and Opportunity. It was my role to get them safely down on the surface, and boy did we test those missions.

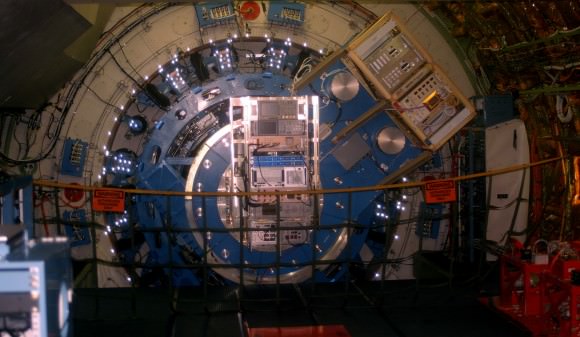

The simulators were so realistic; we’d be running so many different scenarios for years prior to the actual EDL phase, now known as the “7 minutes of terror”. It really doesn’t feel quite real though when it’s actually happening, you just know it is because the room is full of TV cameras, and you have that extra notion in the back of your mind saying it’s not a sim this time. The telemetry though in the simulations was so close to the real data, just a few variations, it kind of showed how much testing and planning went in to those missions, and how it all paid off.

NH – With Phoenix you’d obviously experienced the sadness of the loss of Polar Lander before hand; did that teach you any valuable lessons which you have now carried forward to your role at Planetary Resources?

CL – Phoenix started with a failure review, but that’s what I think is so important about engineering and indeed life in general. You have to fail to understand how to make things better. During that design review we figured out a dozen more reasons for things that could have gone wrong with Mars Polar Lander, and implemented the changes for Phoenix. You have to plan for failure so much with missions of this type, and it’s quite an exhilarating but in some ways stressful ride, and one that after Phoenix I felt like I needed to pass the mantle on to for Curiosity.

NH – On the topic of Planetary Resources, when did you start to think about being part of a company of this magnitude?

CL – Well working with Peter and Eric again was mooted as long ago as 2008, the company ideas being formulated then when it was called Arkyd Astronautics, a name which stuck with us until 2012. Eric and Peter approached me about possibly coming back. As I said, I’d pretty much resigned myself to not working on Curiosity, and having to put myself through all of the phases associated with that landing, and there’s a quote which many people believe comes from Mark Twain, but is really from Jackson Brown, that basically says

“Twenty years from now you will be more disappointed by the things that you didn’t do than by the ones you did do. So throw off the bowlines. Sail away from the safe harbor. Catch the trade winds in your sails. Explore. Dream. Discover” I decided to throw off the bowlines and set sail with Planetary Resources.

NH – How do you see your relationship with a company like Planetary Resources with the major space agencies? Do you see yourselves as complimenting them or competing?

CL – Complimenting totally. NASA has over 50 years of incredible exploration, missions, research, development and insight, and a great future ahead of them too. With NASA recently transferring some of their low Earth orbit operations in to the commercial sector, we feel that this is really a great time to be in this industry, with our goals for being at the forefront of the types of science and commercial operations that the business sector can excel in, leaving NASA to focus on the amazing deep space missions, like landing on Europa or going back to Titan, missions like that, which only the large government agencies can really pull off at this time.

NH – The Arkyd has to be one of the most staggering Kickstarter success stories ever, raising aaround $800,000 in a week…did you imagine that the reaction to putting a space telescope available for all in to orbit would garner so much enthusiasm?

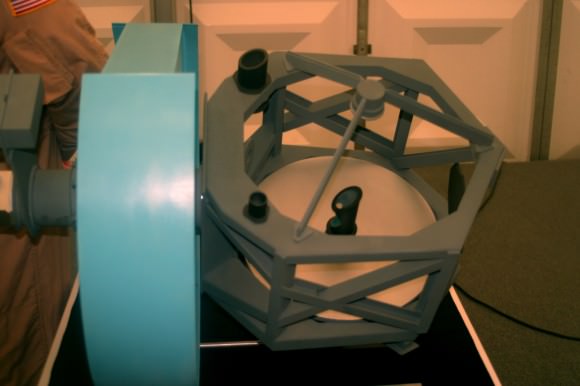

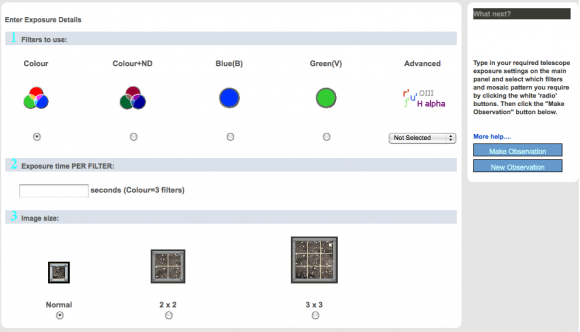

CL – Staggering again doesn’t really do it enough justice. This is the biggest space based Kickstarter in their history, as it’s also in the photography category; it’s the biggest photographic Kickstarter ever too. We have many more surprises planned which I can’t go in to now, but in setting the $1 million minimum bar to “test the water” with public interest in a space telescope, we’ve not really exceeded expectations, but absolutely reached what we felt was possible. From talking to people ahead of the launch, and just seeing their reaction (note from author, I was one of those people, and my reaction was jaw dropping) we knew we had something really special. The idea of the space selfie we felt was part of the cornerstone of what we wanted to achieve, opening up space to everyone, not just the real die hard space enthusiasts.

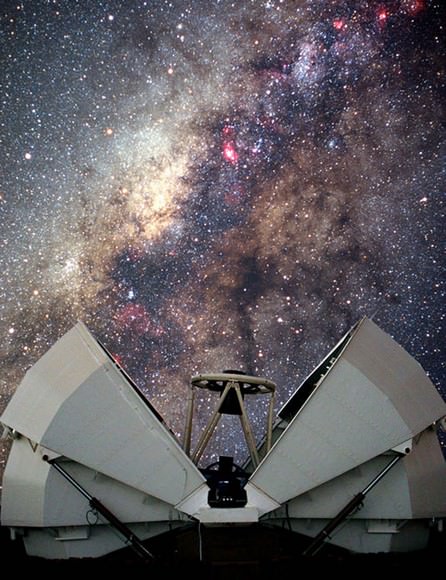

NH – With the huge initial success of the Arkyd project, do you see any scope for a flotilla of space telescopes for the public, much like say the LCOGT or iTelescope networks are on Earth?

CL – Possibly in the future. You yourself know with your work with the Las Cumbres and Faulkes network and iTelescope networks that having a suite of telescopes around the planet has huge benefits when it comes to observations and science. At present we have the plan for one telescope for public use as you know.

The Arkyd 100, which will be utilising our Arkyd technologies, which we’ll be using to examine near Earth asteroids. If you think, that in the last 100 years, the Hale’s, Lowell’s etc of this world were all private individuals sponsoring and building amazing instruments for space exploration, it’s really just a natural progression on from this. We’re partnering closely with the Planetary Society on this, as they have common goals and interests to us, and also with National Geographic. We feel this really does open up space to a whole new group of people, and it’s apparent from the phenomenal interest we’ve had from Kickstarter, and the thousands of people who’ve pledged their support, that this vision was right.

NH – Planetary Resources has some huge goals in terms of asteroids in future, but you seem to have a very balanced and phased scientific plan to study and then proceed to the larger scale operations. Does this come from your science background?

CL – As I said, I grew up in dairy country in Wisconsin, where I had to really make my own opportunities be a part of this industry, there was no space there. On saying that, I have been an advocate of space pretty much all my life, and yes, I guess my scientific background, and experience with working at JPL has come to bear in Planetary Resources. We have a solid plan in terms of risk management with our “swarm” mentality, of sending up lots of spacecraft, and even if one or more fails, we’ll still be able to get valuable science data. I see it really in that lots of people have big ideas, and set up companies with them, but then after initial investment dries up, the ideas may still be big and there, but there is no way to pursue them.

We’ve all come from companies which have seen this kind of mindset in the past, and now, whilst we love employing students and college graduates who have big ideas, who take chances, we have a plan, a long term, and sustainable plan, and yes, we’re taking a steady approach to this, so that we can guarantee that our investors get a return on what they have supported.

NH – Can you give us a timeline for what Planetary Resources aim to achieve?

CL – Our first test launch will be as early as 2014, and then in 2015 we’ll start with the space telescopes using the Arkyd technology. By 2017 we hope to be identifying and on our way to classification of potentially interesting NEO targets for future mining. By the early 2020’s the aim is to be doing extraction from asteroids, and starting sample return missions.

NH – You were and still it seems from all I have read, remain passionate about student involvement, with SEDS etc, what could you say to younger people inspired by what you’re doing to encourage them to get in to the space industry?

CL – Tough one, but I’d say that looking at the people you admire, always remember that they are not superhuman, they are like you and me, but to have goals, take chances and be determined is a great way to look forward. The SEDS movement played a big part in my early life, and I would encourage any student to get involved in that for sure.

NH – In conclusion, what would be your ultimate goal as a pioneer of the new frontier in space exploration?

CL – Our ultimate goal is to be the developer of the economic engine that makes space exploration commercially viable. Once we have established that, we can then look at more detailed exploration of space, with tourism, scientific missions, and extending our reach out even further. I’ve already been a part of placing three missions on the surface of Mars, so nothing really is beyond our reach.

Nick’s closing comments :

I first met Chris at the Spacefest V conference in Tucson, where he gave me a preview of the Arkyd space telescope. There is no doubt in my mind that after meeting him, that he and the team at Planetary Resources will succeed in their mission. A quite brilliant individual, but humble with it, someone who you can spend hours talking to and come away feeling truly inspired. This interview we talked for what seemed like hours, and Chris said I could have written a book with the answers he gave, I hope this article gives you some taste however of the person behind the missions which, at the new frontier of exploration, much like the prospectors in the Gold Rush, are charting new and unknown, yet hugely exiting territories. As the old saying goes…and possibly more aptly then ever… watch this space.

You can find out more about the ARKYD project at the Planetary Resources website.