Admittedly, Mars has drawn more space missions than the rest of the Solar System’s planets, but why have nearly two thirds of all Mars missions failed in some way? Is the “Galactic Ghoul” or the “Mars Triangle” real? Or is it a case of technological trial-and-error? In any case, the Mars Curse has been a matter of debate for many years, but recent missions to the Red Planet haven’t only reached their destination, they are surpassing our wildest expectations. Perhaps our luck is changing…

In 1964, NASA’s Mariner 3 was launched from Cape Canaveral Air Force Station. In space, its solar panels failed to open and the batteries went flat. Now it’s orbiting the Sun, dead. In 1965, Russian controllers lost contact with Zond 2 after it lost one of its solar panels. It lifelessly floated past Mars in the August of that year, only 1,500 km away from the planet. In March and April, 1969, the twin probes in the Soviet Mars 1969 program both suffered launch failure, 1969A exploded minutes after launch and 1969B took a U-turn and crashed to earth. More recently, NASA’s Mars Climate Orbiter crashed into the Red Planet in 1999 after an embarrassing measurement unit mix-up caused the satellite to enter the atmosphere too low. On Christmas 2003, the world waited for a signal from the UK Mars lander, Beagle 2, after it separated from ESA’s Mars Express. To this day, there’s been no word.

Looking over the past 48 years of Mars exploration, it makes for sad reading. A failed mission here, a “lost” mission there, with some unknowns thrown in for good measure. It would seem that mankind’s efforts to send robots to Mars have been thwarted by bad luck and strange mysteries. Is there some kind of Red Planet Triangle (much like the Bermuda Triangle), perhaps with its corners pointing to Mars, Phobos and Deimos? Is the Galactic Ghoul really out there devouring billions of dollars-worth of hardware?

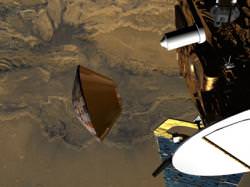

The “Galactic Ghoul” has been mentioned jokingly by NASA scientists to describe the misfortune of space missions, particularly Mars missions. Looking at the statistics of failed missions, you can’t help but think that there are some strange forces at play. During NASA’s Mars Pathfinder mission, there was a technical hitch as the airbags were deflated after the rover mission landed in 1998, prompting one of the rover scientists to mention that perhaps the Galactic Ghoul was beginning to rear its ugly head:

“The great galactic ghoul had to get us somewhere, and apparently the ghoul has decided to pick on the rover.” – Donna Shirley, JPL’s Mars program manager and Sojourner’s designer, in an interview in 1997

Well, there are plenty of answers that explain the losses of these early forays to Mars, putting the Galactic Ghoul to one side for now.

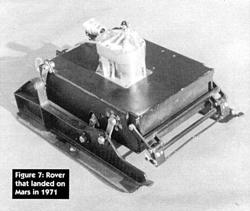

Beginning with the very first manmade objects to land on the Martian surface, Mars 2 and Mars 3, Soviet Union-built Mars lander/orbiter missions in 1971. The lander from Mars 2 is famous for being the first ever robotic explorer on the surface of Mars, but it is also infamous for making the first manmade crater on the surface of Mars. The Mars 3 lander had more luck, it was able to make a soft landing and transmit a signal back to Earth… for 20 seconds. After that, the robot was silenced.

Both landers had the first generation of Mars rovers on board; tethered to the landing craft, they would have had a range of 15 meters from the landing site. Alas, neither was used. It is thought that the Mars 3 lander was blown over by one of the worst dust storms observed on Mars.

To travel from Earth to Mars over a long seven months, separate from its orbiter, re-enter the Martian atmosphere and make a soft landing was a huge technological success in itself – only to get blown over by a dust storm is the ultimate example of “bad luck” in my books! Fortunately, both the Mars 2 and 3 orbiters completed their missions, relaying huge amounts of data back to Earth.

This isn’t the only example where “bad luck” and “Mars mission” could fall into the same sentence. In 1993, NASA’s Mars Observer was only three days away from orbital insertion around Mars when it stopped transmitting. After a very long 337 day trip from Earth it is thought that on pressurizing the fuel tanks in preparation for its approach, the orbiters propulsion system started to leak monomethyl hydrazine and helium gas. The leakage caused the craft to spin out of control, switching its electronics into “safe” mode. There was to be no further communication from Mars Observer.

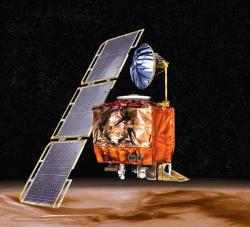

Human error also has a part to play in many of the problems with getting robots to the Red Planet. Probably the most glaring, and much hyped error was made during the development of NASA’s Mars Climate Orbiter. In 1999, just before orbital insertion, a navigation error sent the satellite into an orbit 100 km lower than its intended 150 km altitude above the planet. This error was caused by one of the most expensive measurement incompatibilities in space exploration history. One of NASA’s subcontractors, Lockheed Martin, used Imperial units instead of NASA-specified metric units. This incompatibility in the design units culminated in a huge miscalculation in orbital altitude. The poor orbiter plummeted through the Martian atmosphere and burned up.

Human error is not only restricted to NASA missions. The earlier Russian Phobos 1 mission in 1988 was lost through a software error. Neglecting a programming subroutine that should never have been used during space flight was accidentally activated. The subroutine was known about before the launch of Phobos 1, but engineers decided to leave it, repairing it would require the whole computer to be upgraded. Due to the tight schedule, the spaceship was launched. Although deemed “safe”, the software was activated and the probe was sent into a spin. With no lock on the Sun to fuel its solar panels, the satellite was lost.

To date, 26 of the 43 missions to Mars (that’s a whopping 60%) have either failed or only been partially successful in the years since the first Marsnik 1 attempt by the Soviet Union in 1960. In total the USA/NASA has flown 20 missions, six were lost (70% success rate); the Soviet Union/Russian Federation flew 18, only two orbiters (Mars 2 and 3) were a success (11% success rate); the two ESA missions, Mars Express, and Rosetta (fly-by) were both a complete success; the single Japanese mission, Nozomi, in 1998 suffered complications en-route and never reached Mars; and the British lander, Beagle 2, famously went AWOL in 2003.

Despite the long list of failed missions, the vast majority of lost missions to Mars occurred during the early “pioneering” years of space exploration. Each mission failure was taken on board and used to improve the next and now we are entering an era where mission success is becoming the “norm”. NASA currently has two operational satellites around Mars, Mars Odyssey and the Mars Reconnaissance Orbiter. The European Mars Express is also in orbit.

The Mars Exploration Rovers Spirit and Opportunity continue to explore the Martian landscape as their mission keeps on getting extended.

Recent mission losses, such as the British Beagle 2, are inevitable when we look at how complex and challenging sending robotic explorers into the unknown. There will always be a degree of human error, technology failure and a decent helping of bad fortune, but we seem to be learning from our mistakes and moving forward. There definitely seems to be an improving trend toward mission success over mission failure.

Perhaps, with technological advancement and a little bit of luck, we are overcoming the Mars Curse and keeping the Galactic Ghoul at bay as we gradually gain a strong foothold on a planet we hope to colonize in the not-so-distant future…