NASA and JPL are working hard to develop more autonomy for their Mars rovers. Both of their current rovers on Mars—MSL Curiosity and Perseverance—are partly autonomous, with Perseverance being a little more advanced. In fact, developing more autonomous navigation was an explicit part of Perseverance's mission.

Both rovers use a system called AutoNav, with Perseverance's system being more powerful and more well-developed. During the rover's first year on Mars, it travelled a total of 17.7 km, and AutoNav was used to evaluate about 88% of its route.

One of the main obstacles to even greater autonomous navigation is position-finding. The longer Perseverance drives autonomously, the larger the error becomes in its understanding of where it actually is on the Martian surface. And if it doesn't know where it is, it can't accurately plan and navigate a route. It is, in effect, lost.

"Not until we are lost do we begin to understand ourselves." — Henry David Thoreau

Three different systems combine to create Perseverance's autonomous operations: AutoNav, AEGIS, and OBP. AutoNav uses images and maps to plan routes, and AEGIS, the Autonomous Exploration for Gathering Increased Science, uses onboard wide-angle imagery to select observation targets for the rover's SuperCam instrument. OBP (OnBoard Planner) schedules planned operations to reduce the rover's energy consumption. All three systems combine to give Perseverance greater autonomy.

All of these systems and the greater operational autonomy they grant the rover are aimed at obtaining maximum science results.

Perseverance's autonomy took another step forward with the development of Mars Global Localization (MGL). There's no GPS on Mars (at least, not yet) so pinpointing its position on the surface is a roadblock to the rover's greater autonomy and better science results. The system is explained in a conference paper titled "Censible: A Robust and Practical Global Localization Framework for Planetary Surface Missions."

“This is kind of like giving the rover GPS. Now it can determine its own location on Mars,” said JPL’s Vandi Verma, chief engineer of robotics operations for the mission. “It means the rover will be able to drive for much longer distances autonomously, so we’ll explore more of the planet and get more science. And it could be used by almost any other rover traveling fast and far.”

Perseverance's longest autonomous drive is 699.9 meters over three days. Position uncertainty prevents it from extending that distance. The farther it drives autonomously, the larger its position uncertainty becomes.

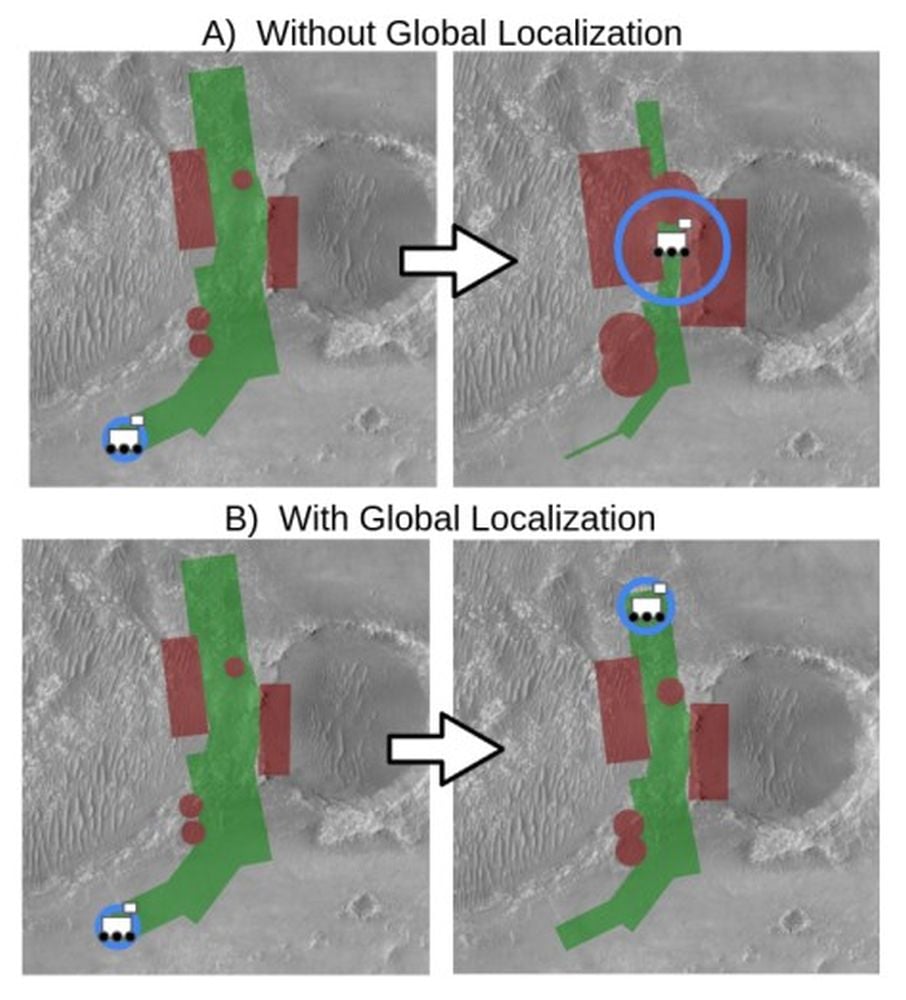

*This figure shows what happened when Perseverance attempted a long drive through a narrow corridor on Sol 385. The blue circles show how the rover's position uncertainty grows over time without MGL. The red polygons and circles show known hazards near its route. Once the uncertainty becomes large enough, the potential footprint of the hazards also grows, and the rover is essentially lost and can't continue without human intervention. In the bottom panels showing MGL's effect, the error doesn't grow as large, the hazard footprint doesn't grow near as large, and the rover can travel further without help. Image Credit: Nash et al. 2026. ICRA*

*This figure shows what happened when Perseverance attempted a long drive through a narrow corridor on Sol 385. The blue circles show how the rover's position uncertainty grows over time without MGL. The red polygons and circles show known hazards near its route. Once the uncertainty becomes large enough, the potential footprint of the hazards also grows, and the rover is essentially lost and can't continue without human intervention. In the bottom panels showing MGL's effect, the error doesn't grow as large, the hazard footprint doesn't grow near as large, and the rover can travel further without help. Image Credit: Nash et al. 2026. ICRA*

In the current navigation system, Perseverance drives until its position error accumulates and becomes too large to travel safely anymore. Then it takes a 360 degree panoramic image. This is where a human being intervenes by manually matching the rover's position to a global map.

“Humans have to tell it, ‘You’re not lost, you’re safe. Keep going,’” Verma said. “We knew if we addressed this problem, the rover could travel much farther every day.”

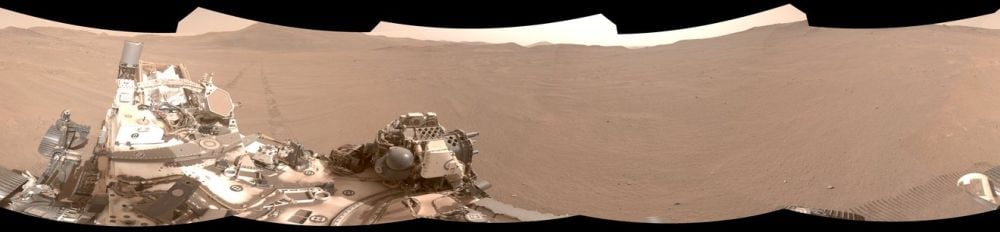

This new method is more powerful. It still involves a 360 panorama of the rover's current location, but no human intervention is required. The image is a monochromal red image, which most closely matches that of the HiRISE images from the Mars Reconnaissance Orbiter.

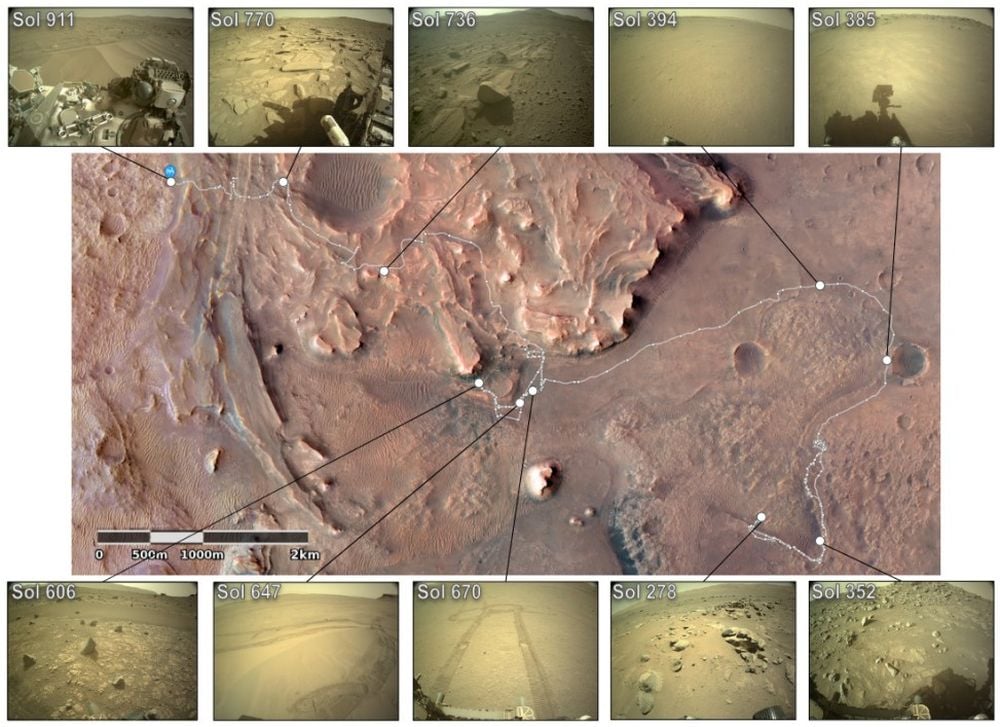

*Each white dot in this image represents one of the 264 panoramic images Perseverance captured in Jezero Crater. They capture the rover's entire mission up to Sol 911 as travelled through multiple terrain types. Image Credit: Nash et al. 2026. ICRA*

*Each white dot in this image represents one of the 264 panoramic images Perseverance captured in Jezero Crater. They capture the rover's entire mission up to Sol 911 as travelled through multiple terrain types. Image Credit: Nash et al. 2026. ICRA*

This new development is partly because of Perseverance's sidekick, the Ingenuity Helicopter. Ingenuity was a remarkable success. It completed 72 flights over three years before suffering rotor damage and ending its mission in January, 2024. With its demise, a powerful microprocessor onboard Perseverance that was dedicated to communicating with Ingenuity became available. Perseverance can now pinpoint its surface location to within 10 inches, and it only needs about two minutes to do so.

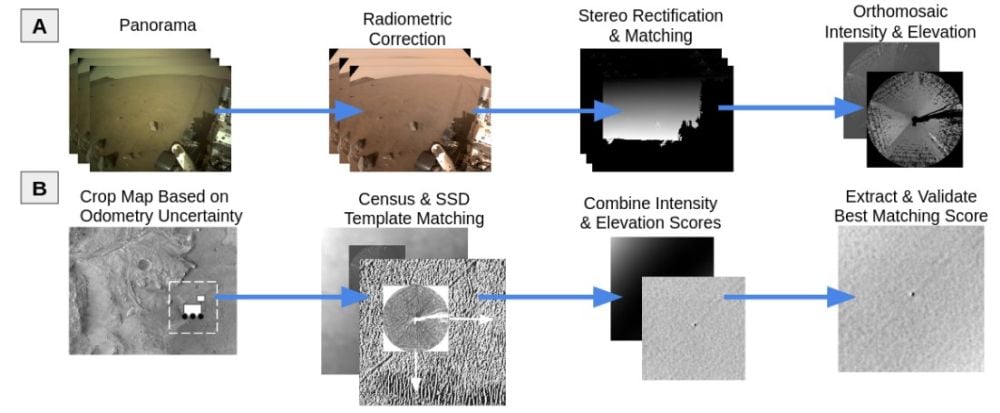

Using that tiny electronic brain, the system employs an algorithm to compare onboard terrain maps created by orbiters with panoramic images from Perseverance's cameras. This lets the rover find its location without human intervention. Perseverance used this system with great success on two days of normal operations. Once on February 2nd, and once on February 16th. The system has the potential to greatly increase the distance Perseverance can cover without human intervention. Since it can also perform scientific observations with some autonomy, MGL has the potential to pay big scientific dividends.

*These panels give a high-level view of the global localization framework. In the top row, rover images are transformed to match the orbital map. In the bottom row, the algorithm matches the rover images to the orbital map. Image Credit: Nash et al. 2026. ICRA*

*These panels give a high-level view of the global localization framework. In the top row, rover images are transformed to match the orbital map. In the bottom row, the algorithm matches the rover images to the orbital map. Image Credit: Nash et al. 2026. ICRA*

"It enables the rover to be commanded to drive for potentially unlimited drive distances without requiring localization from Earth," the authors write in the conference paper. The system can be applied to other robotic missions according to the authors. "Beyond Perseverance, absolute position estimation is key for future planetary robotic missions."

This animation shows how the system works. It lets Perseverance pinpoint its location using an onboard algorithm that matches terrain features in navigation camera shots (the circular image, called an orthomosaic) to those in orbital imagery (the background). Credit: NASA/JPL-Caltech.

“We’ve given the rover a new ability,” said Jeremy Nash, a JPL robotics engineer who led the team working on the project under Verma. “This has been an open problem in robotics research for decades, and it’s been super exciting to deploy this solution in space for the first time.”

Universe Today

Universe Today