[/caption]

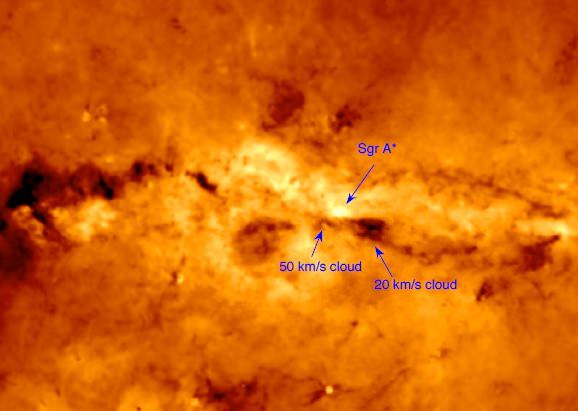

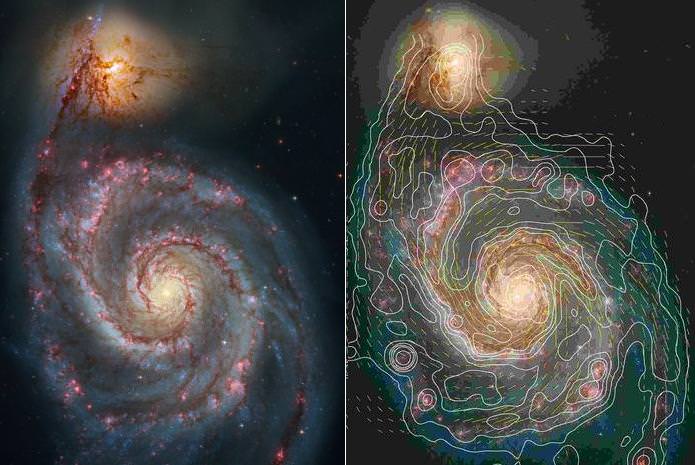

Molecular clouds are called so because they have sufficient density to support the formation of molecules, most commonly H2 molecules. Their density also makes them ideal sites for new star formation – and if star formation is prevalent in a molecular cloud, we tend to give it the less formal title of stellar nursery.

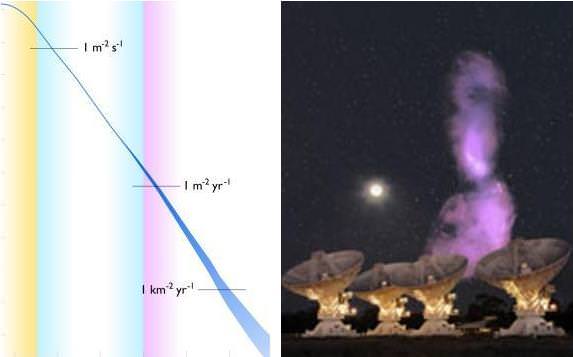

Traditionally, star formation has been difficult to study as it takes place within thick clouds of dust. However, observation of far-infrared and sub-millimetre radiation coming out of molecular clouds allows data to be collected about prestellar objects, even if they can’t be directly visualized. Such data are drawn from spectroscopic analysis – where spectral lines of carbon monoxide are particularly useful in determining the temperature, density and dynamics of prestellar objects.

Far-infrared and sub-millimetre radiation can be absorbed by water vapor in Earth’s atmosphere, making astronomy at these wavelengths difficult to achieve from sea level – but relatively easy from low humidity, high altitude locations such as Mauna Kea Observatory in Hawaii.

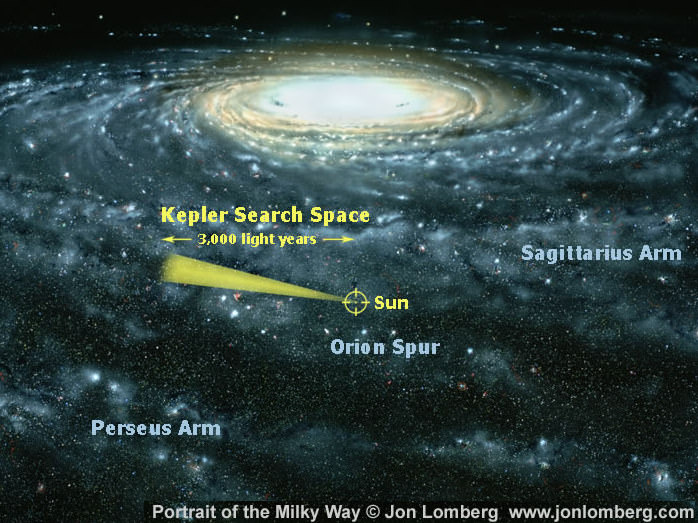

Simpson et al undertook a sub-millimeter study of the molecular cloud L1688 in Ophiuchus, particularly looking for protostellar cores with blue asymmetric double (BAD) peaks – which signal that a core is undergoing the first stages of gravitational collapse to form a protostar. A BAD peak is identified through Doppler-based estimates of gas velocity gradients across an object. All this clever stuff is done via the James Clerk Maxwell Telescope in Mauna Kea, using ACSIS and HARP – the Auto-Correlation Spectral Imaging System and the Heterodyne Array Receiver Programme.

The physics of star formation are not completely understood. But, presumably due to a combination of electrostatic forces and turbulence within a molecular cloud, molecules begin to aggregate into clumps which perhaps merge with adjacent clumps until there is a collection of material substantial enough to generate self-gravity.

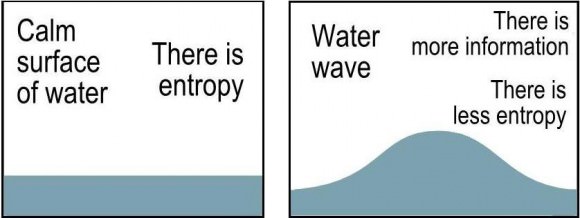

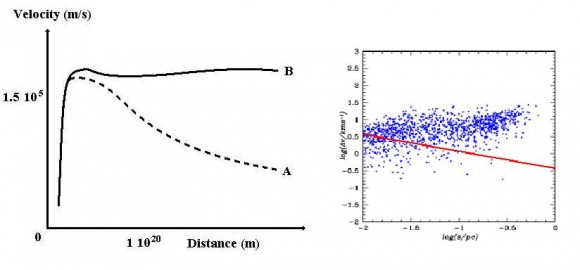

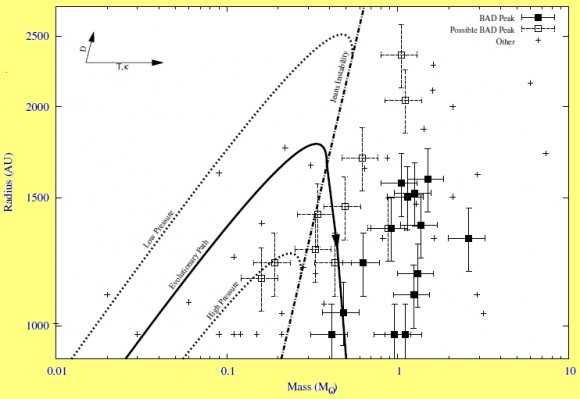

From this point, a hydrostatic equilibrium is established between gravity and the gas pressure of the prestellar object – although as more matter is accreted, self-gravity increases. Objects can be sustained within the Bonnor-Ebert mass range – where more massive objects in this range are smaller and denser (High Pressure in the diagram). But as mass continues to climb, the Jeans Instability Limit is reached where gas pressure can no longer withstand gravitational collapse and matter ‘infalls’ to create a dense, hot protostellar core.

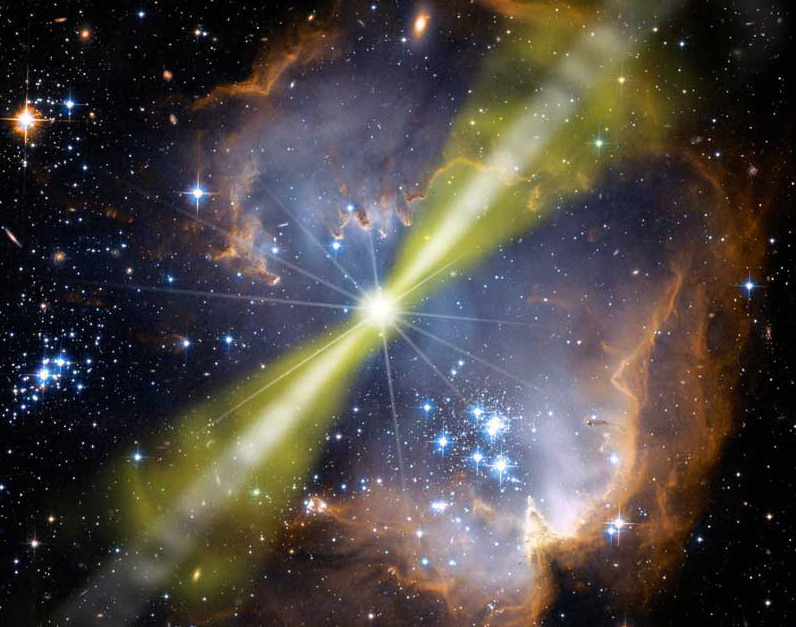

When the core’s temperature reaches 2000 Kelvin, H2 and other molecules dissociate to form a hot plasma. The core is not yet hot enough to drive fusion but it does radiate its heat – establishing a new hydrostatic equilibrium between outward thermal radiation and inward gravitational pull. At this point the object is now officially a protostar.

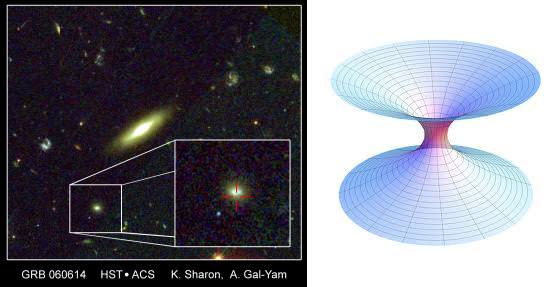

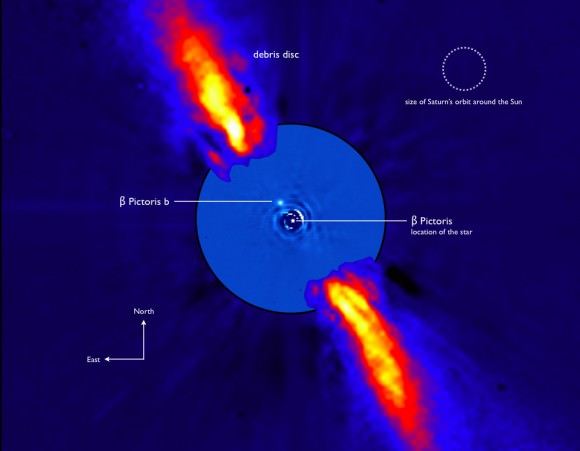

Being now a substantial center of mass, the protostar is likely to draw a circumstellar accretion disk around it. As it accretes more material and the core’s density increases further, deuterium fusion commences first – followed by hydrogen fusion, at which point a main sequence star is born.

Further reading: Simpson et al The initial conditions of isolated star formation – X. A suggested evolutionary diagram for prestellar cores.