[/caption]

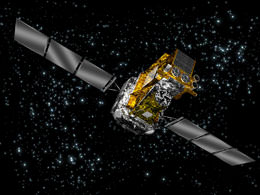

Ten years ago, on July 23, 1999, NASA’s Chandra X-ray Observatory was deployed into orbit by the space shuttle Columbia. Far exceeding its intened 5-year life span, Chandra has demonstrated an unrivaled ability to create high-resolution X- ray images, and enabled astronomers to investigate phenomena as diverse as comets, black holes, dark matter and dark energy.

“Chandra’s discoveries are truly astonishing and have made dramatic changes to our understanding of the universe and its constituents,” said Martin Weisskopf, Chandra project scientist at NASA’s Marshall Space Flight Center in Huntsville, Ala.

The science generated by Chandra — both on its own and in conjunction with other telescopes in space and on the ground — led to a widespread, transformative impact on 21st century astrophysics. Chandra has provided the strongest evidence yet that dark matter must exist. It has independently confirmed the existence of dark energy and made spectacular images of titanic explosions produced by matter swirling toward supermassive black holes.

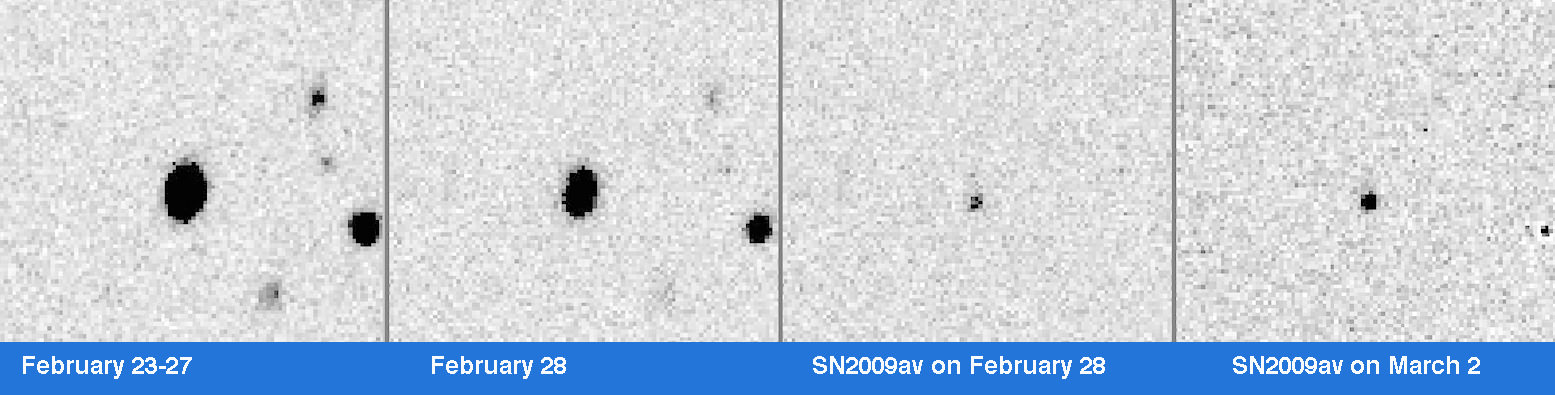

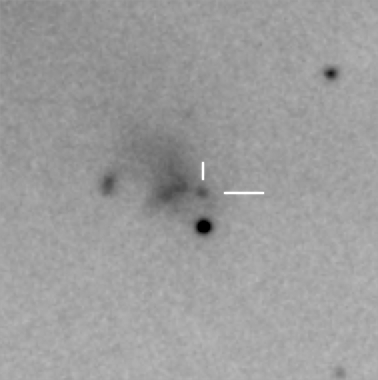

To commemorate the 10th anniversary of Chandra, three new versions of classic Chandra images will be released during the next three months. These images, the first of which was released today, provide new data and a more complete view of objects that Chandra observed in earlier stages of its mission. The image being released today is of the spectacular supernova remnant E0102-72.

“The Great Observatories program — of which Chandra is a major part — shows how astronomers need as many tools as possible to tackle the big questions out there,” said Ed Weiler, associate administrator of NASA’s Science Mission Directorate at NASA Headquarters in Washington. NASA’s other “Great Observatories” are the Hubble Space Telescope, Compton Gamma-Ray Observatory and Spitzer Space Telescope.

The next image will be released in August to highlight the anniversary of when Chandra opened up for the first time and gathered light on its detectors. The third image will be released during “Chandra’s First Decade of Discovery” symposium in Boston, which begins Sept. 22.

“I am extremely proud of the tremendous team of people who worked so hard to make Chandra a success,” said Harvey Tananbaum, director of the Chandra X-ray Center at the Smithsonian Astrophysical Observatory in Cambridge, Mass. “It has taken partners at NASA, industry and academia to make Chandra the crown jewel of high-energy astrophysics.”

Tananbaum and Nobel Prize winner Riccardo Giacconi originally proposed Chandra to NASA in 1976. Unlike the Hubble Space Telescope, Chandra is in a highly elliptical orbit that takes it almost one third of the way to the moon, and was not designed to be serviced after it was deployed.

The Chandra X-ray Observatory was named after the great Indian-born American astrophysicist Subrahmanyan Chandrasekhar, who served on the faculty at the University of Chicago for almost 60 years, winning the 1983 Nobel Prize in Physics for his work on explaining the structure and evolution of stars.