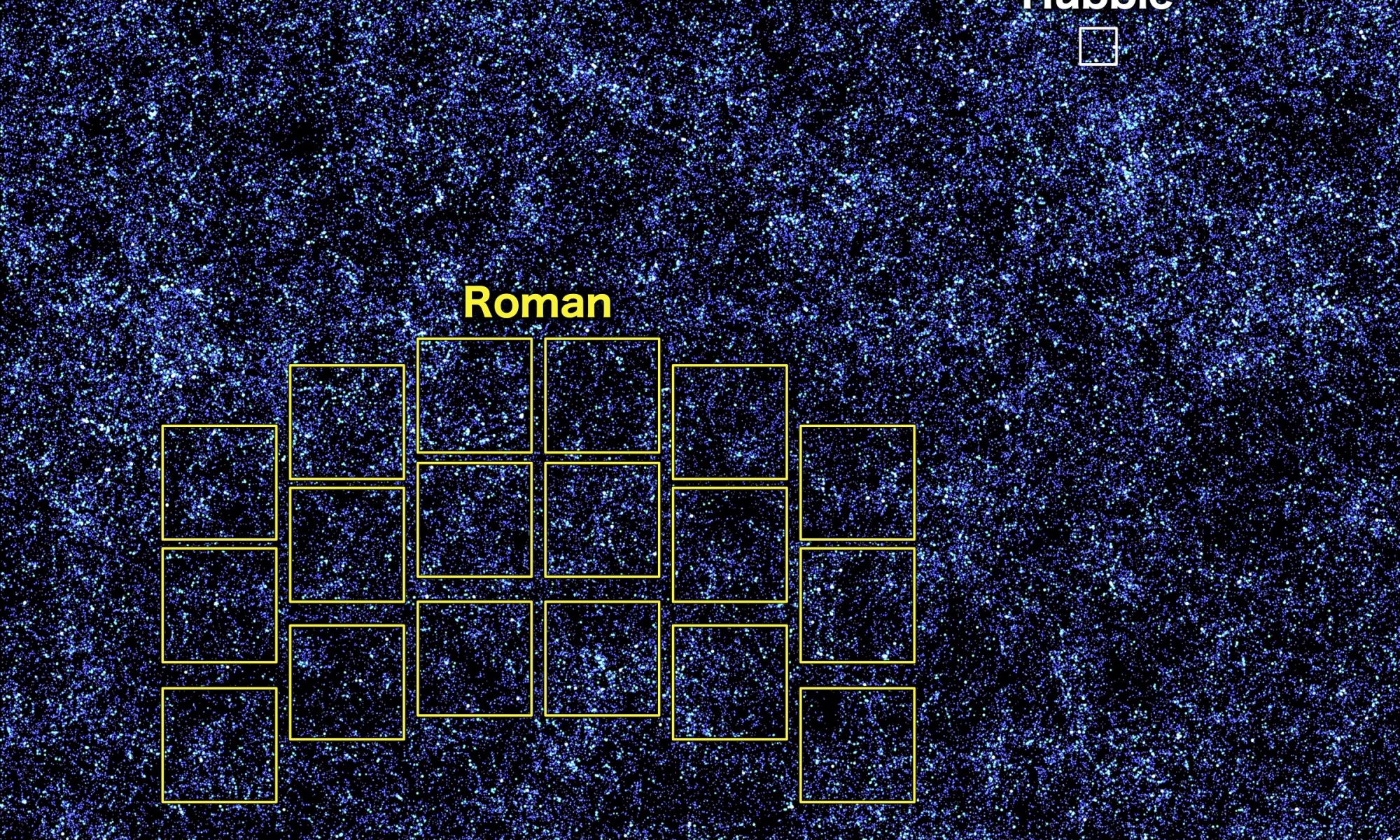

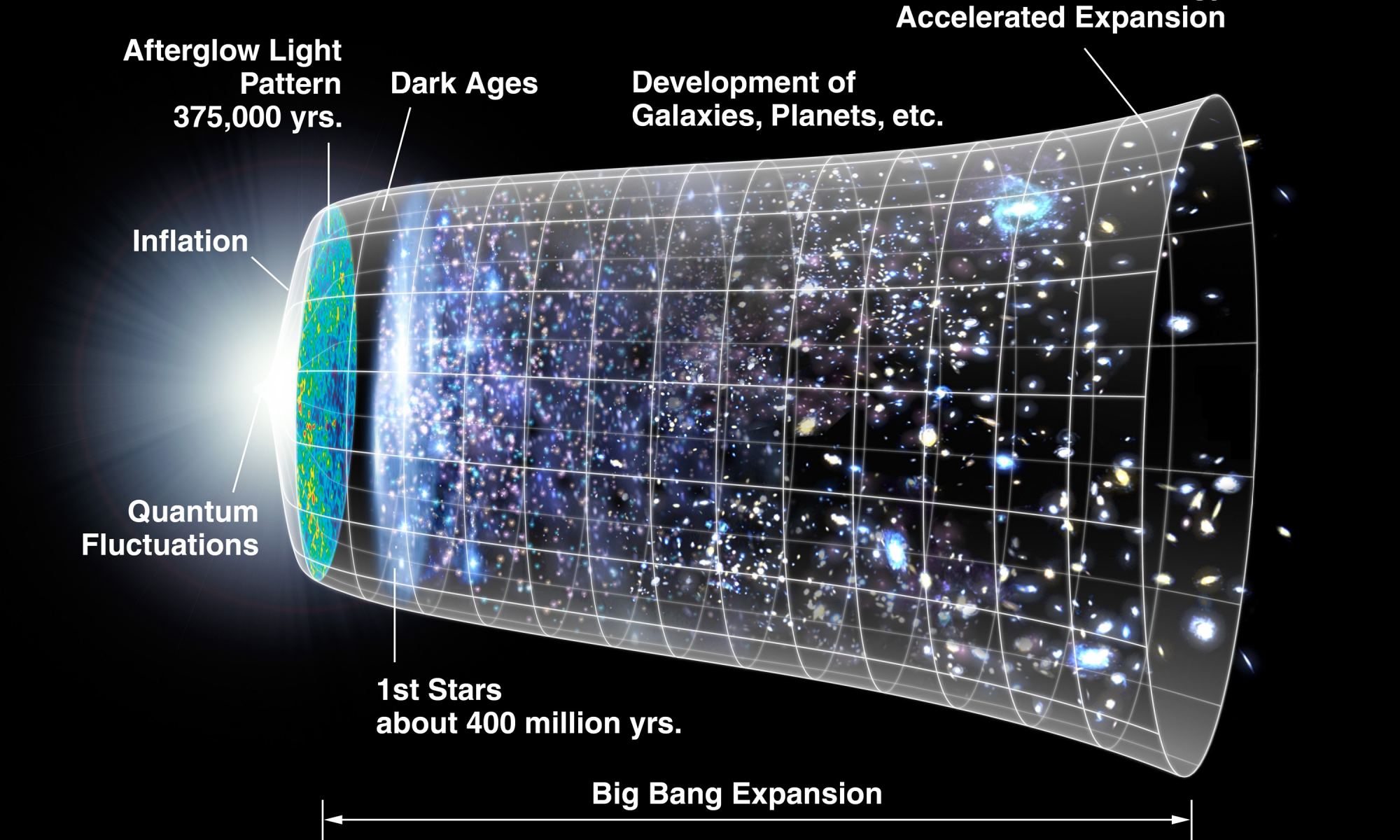

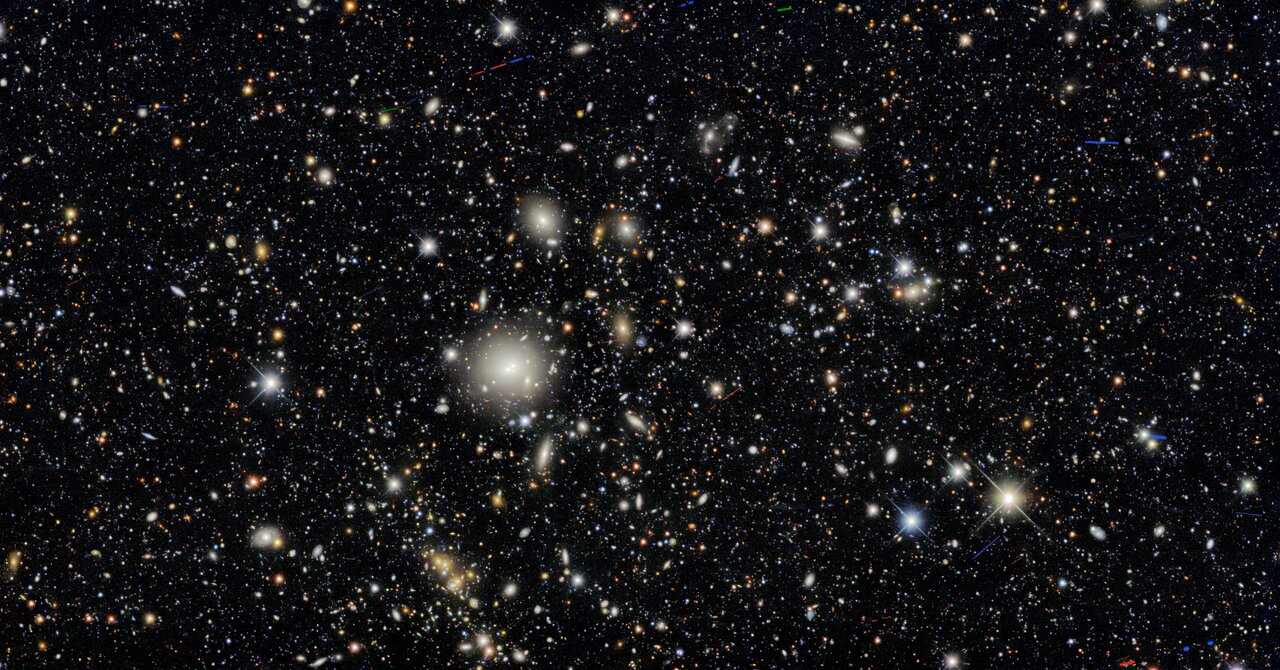

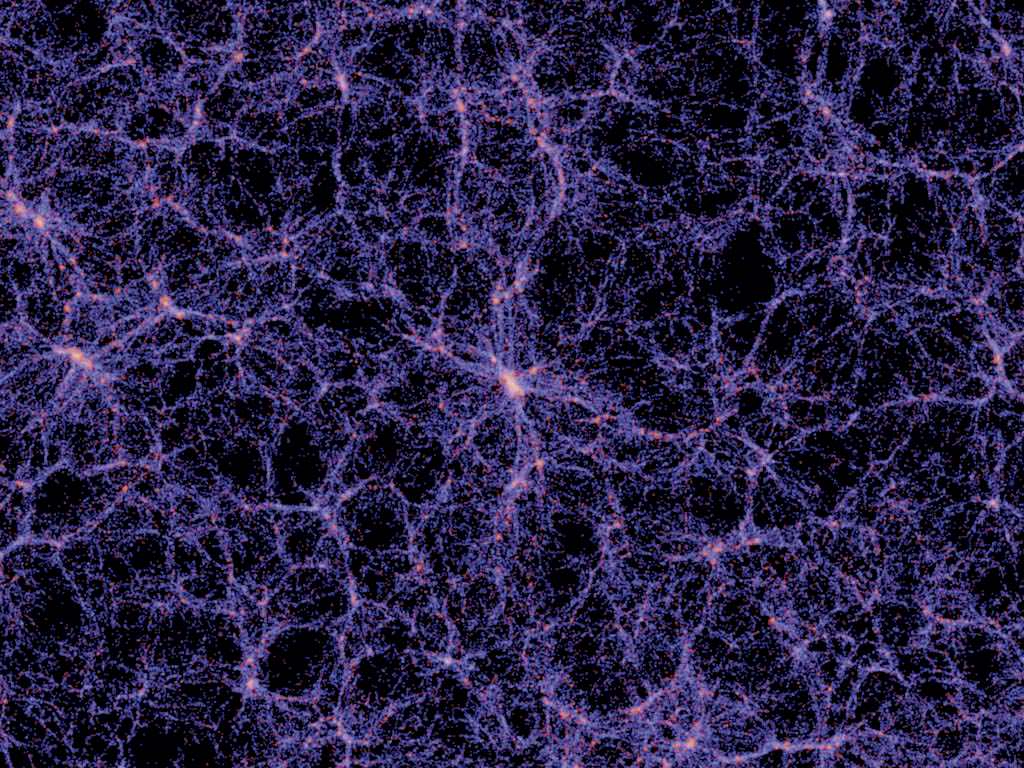

When the James Webb Space Telescope started collecting data, it gave us an unprecedented view of the distant cosmos. Faint, redshifted galaxies seen by Hubble as mere smudges of light were revealed as objects of structure and form. And astronomers were faced with a bit of a problem. Those earliest galaxies seemed too developed and too large to have formed within the accepted timeline of the universe. This triggered a flurry of articles claiming boldly that JWST had disproven the big bang. Now a new article in the Monthly Notices of the Royal Astronomical Society argues that the problem isn’t that galaxies are too developed, but rather that the universe is twice as old as we’ve thought. A whopping 26.7 billion years old to be exact. It’s a bold claim, but does the data really support it?

Continue reading “The Universe Could Be Twice As Old if Light is Tired and Physical Constants Change”The Universe Could Be Twice As Old if Light is Tired and Physical Constants Change