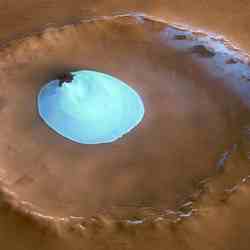

Mars. Image credit: NASA Click to enlarge

Are microbes making the methane that’s been found on Mars, or does the hydrocarbon gas come from geological processes? It’s the question that everybody wants to answer, but nobody can. What will it take to convince the jury?

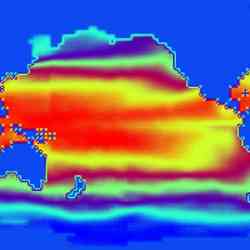

Many experts told Astrobiology Magazine that the best way to judge whether methane has a biological origin is to look at the ratio of carbon-12 (C-12) to carbon-13 (C-13) in the molecules. Living organisms preferentially take up the lighter C-12 isotopes as they assemble methane, and that chemical signature remains until the molecule is destroyed.

“There may be a way of distinguishing the origin of methane, whether biogenic or not, by using stable isotope measurements,” says Barbara Sherwood Lollar, an isotope chemist at the University of Toronto.

But isotope signals are subtle, best performed by accurate spectrometers placed on the martian surface rather than on an orbiting spacecraft orbit.

And there are complications. For one thing, an average martian methane level of 10 parts per billion (ppb) may be too faint for accurate isotope measurement, even for a spectroscope placed on Mars. Also, the C-12 to C-13 ratio of methane alone is not always proof of life. For example, the “Lost City” hydrothermal vent field in the Atlantic Ocean did not show a clear isotope signature, says James Kasting, professor of earth and mineral science at Penn State University.

“The methane is not that strongly fractionated, but they still think it might be biological,” says Kasting. “At Lost City, you can’t figure out if it’s biological or not by the isotopes. How are we going to figure that out on Mars?”

By expanding the search, responds Sherwood Lollar. Instead of measuring only carbon, she suggests measuring hydrogen isotopes, because biological systems also prefer hydrogen (H) to the heavier deuterium (2H).

A second approach would look at the longer, heavier hydrocarbons — ethane, propane and butane — that are related to methane, and that sometimes appear with biogenic or abiogenic methane. Sherwood Lollar detected these hydrocarbons while investigating abiogenic methane trapped in pores in ancient rocks in the Canadian Shield, a large deposit of Precambrian igneous rock. “When the water gets trapped over very, very long time periods,” she says, an abiogenic reaction between water and rock makes methane, ethane, propane and butane.

If the longer-chain abiogenic hydrocarbons are ever detected in the martian atmosphere, how could we distinguish them from similar hydrocarbons that are the breakdown products of kerogen, a remnant of decomposing living matter? The answer, Sherwood Lollar repeats, could be found in the isotopes. Abiogenic hydrocarbon chains would contain a higher proportion of heavier isotopes than the hydrocarbon chains derived from the breakdown of kerogen.

“Future missions to Mars plan to look for the presence of higher hydrocarbons as well as methane,” Sherwood Lollar says. “If this isotopic pattern can be identified in martian methane and ethane for instance, then this type of information could help resolve abiogenic versus biogenic origin.”

Isotopes figure prominently in several upcoming space missions that could slake the growing thirst for evidence on the methane mystery:

* The Phoenix lander, scheduled for launch in August 2007, will go to an ice-rich region near the North Pole, and “dig up dirt and analyze the dirt, along with the ice,” says William Boynton of the University of Arizona, who will direct the mission. The lander’s mass spectrometer will measure isotopes in any methane trapped in the soil, if the concentration is sufficient. “We won’t be able to measure the isotope ratio [in the atmosphere], because it won’t be a high enough concentration,” Boynton says.

* Mars Science Laboratory, scheduled for launch sometime between 2009 and 2011, is a 3,000-kilogram, six-wheel rover packed with scientific instruments. The tunable laser spectrometer and mass spectrometer-gas chromatograph may both be able to ferret out isotope ratios of carbon and other elements.

* Beagle 3, a successor to Britain’s lost-in-space Beagle 2, may carry an improved mass spectrometer capable of measuring carbon isotope ratios, but the project has yet to be approved. The craft would not launch until at least 2009.

From these launch dates, it’s clear the jury on this who-dun-it must remain sequestered for years, until hard data on the source of methane on Mars can be aired in the scientific courtroom. At this point, it’s fair to say that many expert witnesses take the possibility of a biogenic source rather seriously. For example, Vladimir Krasnopolsky, who led one of the teams that found methane on the planet, says, “Bacteria, I think, are plausible sources of methane on Mars, the most likely source.” But he expects the microbes to be found in oases, “because the martian conditions are very hostile to life. I think these bacteria may exist in some locations where conditions are warm and wet.”

That observation points to a possible win-win situation for those who want to find life on Mars, says Timothy Kral of the University of Arkansas, who grows methanogens for a living. If, as calculations suggest, asteroids and comets are not a likely to be delivering methane to Mars, then either methane-making organisms must be living in the subsurface, or there is a place where it’s warm enough for abiogenic generation.

“Even though it is not an indication of life directly, it’s an indication that there is warming,” says Kral. In those conditions, “there is heat, energy for organisms to grow.”

A lot has changed in the past year. Kral, who has spent a dozen years growing methanogens in a simulated martian environment, says, “Prior to last year, when people asked if I thought there was life on Mars, I would giggle. I would not be in this business if I did not think it was possible, but there was no real evidence for any life. Then, all of a sudden, last year, they found methane in the atmosphere, and we suddenly have a piece of real scientific evidence saying that it’s possible” that Mars is the second living planet.

Original Source: NASA Astrobiology