Scientists at Oxford University have developed a method to generate the shortest ever single-photon pulse by removing the interference of quantum entanglement. So how big are these tiny record-breakers? They are 20 microns long (or 0.00002 metres), with a period of 65 femtoseconds (65 millionths of a billionth of a second). This experiment smashes the previous record for the shortest single-photon pulse; the Oxford photon is 50 times shorter. While this sounds pretty cool, what is all the fuss about? How can these tiny electromagnetic wave-particles be of any use? In two words: quantum computing. And in an additional three words: quantum satellite communications…

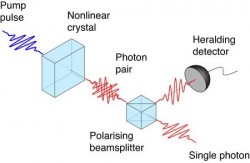

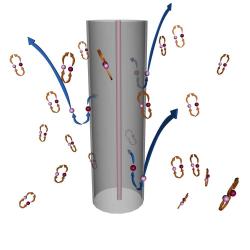

Quantum entanglement is a tough situation to put into words. In a nutshell: If a photon is absorbed by a type of material, two photons may be re-emitted. These two photons are of a lower energy than the original photon, but they are emitted from the same source and therefore entangled. This entangled pair is inextricably linked; regardless of the distance they are separated. Should the quantum state of one be changed, the other will experience that change. In theory, no matter how far away these photons are separated, the quantum change of one will communicated to the other instantly. Einstein called this quantum phenomenon “spooky action at a distance” and didn’t believe it possible, but experiment has proven otherwise.

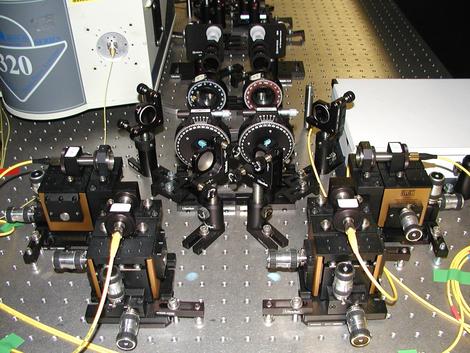

So, in a recent publication, the Oxford group are trying to remove the entangled state of photons, this experiment isn’t about using this “spooky action”, it is to get rid of it. This is to remove the interference caused when one of the photon pair is detected. Once one of the twins is detected, the quantum state of the other is altered, contaminating the signal. If this effect can be removed, very short-period “pure” photons can be generated, heralding a new phase of quantum computing. If scientists have very definite, identical single photons at their disposal, highly accurate information can be carried with no interference from the quirky nature of quantum physics.

“Our technique minimises the effects of this entanglement, enabling us to prepare single photons that are extremely consistent and, to our knowledge, have the shortest duration of any photon ever generated. Not only is this a fascinating insight into fundamental physics but the precise timing and consistent attributes of these photons also makes them perfect for building photonic quantum logic gates and conducting experiments requiring large numbers of single photons.” – Peter Mosley, Co-Investigator, Oxford University.

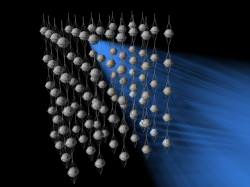

The Oxford University blog reporting this news highlights how useful these regimented photons will be to quantum computing, quantum communications in space could also be a major benefactor. Imagine sending pulses of quantum-identical photons through space, to satellites at first, later through interplanetary space. Space scientists will have an extremely powerful resource so data can be sent though the vacuum, encrypted in a small number of photons, indecipherable to everything other than its destination…

Source: University of Oxford