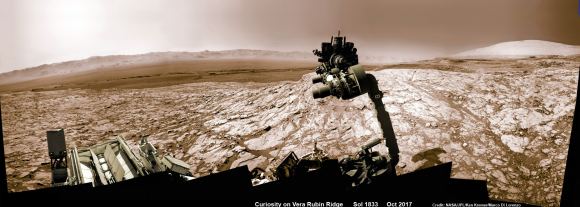

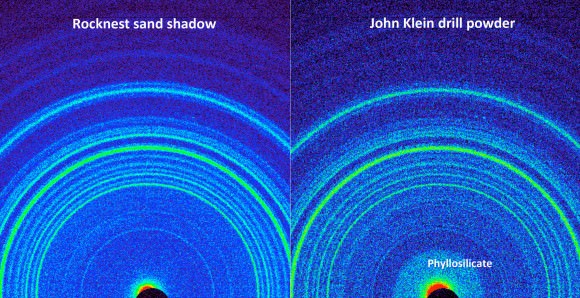

Since it landed on Mars in 2012, the Curiosity rover has used its drill to gather samples from a total of 15 sites. These samples are then deposited into two of Curiosity’s laboratory instruments – the Sample Analysis at Mars (SAM) or the Chemistry and Mineralogy X-ray Diffraction (CheMin) instrument – where they are examined to tell us more about the Red Planet’s history and evolution.

Unfortunately, in December of 2016, a key part of the drill stopped working when a faulty motor prevented the bit from extending and retracting between its two stabilizers. After managing to get the bit to extend after months of work, the Curiosity team has developed a new method for drilling that does not require stabilizers. The new method was recently tested and has been proven to be effective.

The new method involves freehand drilling, where the drill bit remains extended and the entire arm is used to push the drill forward. While this is happening, the rover’s force sensor – which was originally included to stop the rover’s arm if it received a high-force jolt – is used to takes measurements. This prevents the drill bit from drifting sideways and getting stuck in rock, as well as providing the rover with a sense of touch.

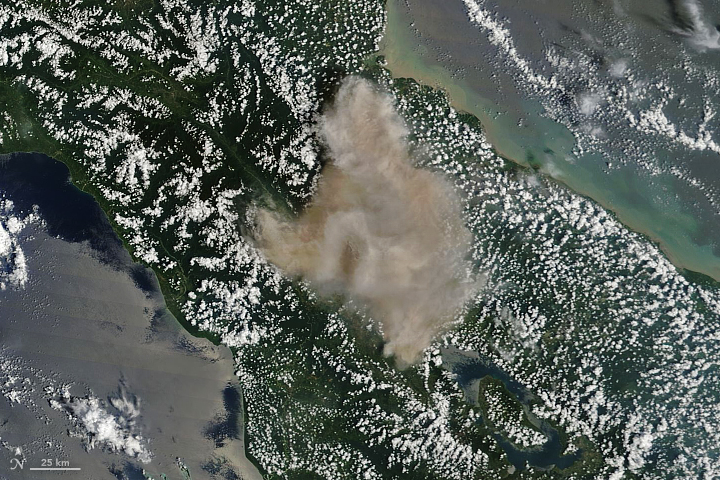

The test drill took place at a site called Lake Orcadie, which is located in the upper Vera Rubin Ridge – where Curiosity is currently located. The resulting hole, which was about 1 cm (half an inch) deep was not enough to produce a scientific sample, but indicated that the new method worked. Compared to the previous method, which was like a drill press, the new method is far more freehand.

As Steven Lee, the deputy project manager of the Mars Science Laboratory at NASA’s Jet Propulsion Laboratory, explained:

“We’re now drilling on Mars more like the way you do at home. Humans are pretty good at re-centering the drill, almost without thinking about it. Programming Curiosity to do this by itself was challenging — especially when it wasn’t designed to do that.”

This new method was the result of months of hard work by JPL engineers, who practiced the technique using their testbed – a near-exact replica of Curiosity. But as Doug Klein of JPL, one of Curiosity’s sampling engineers, indicated, “This is a really good sign for the new drilling method. Next, we have to drill a full-depth hole and demonstrate our new techniques for delivering the sample to Curiosity’s two onboard labs.”

Of course, there are some drawbacks to this new method. For one, leaving the drill in its extended position means that it no longer has access to the device that sieves and portions rock powder before delivering it to the rover’s Collection and Handling for In-Situ Martian Rock Analysis (CHIMRA) instrumet. To address this, the engineers at JPL had to invent a new way to deposit the powder without this device.

Here too, the engineers at JPL tested the method here on Earth. It consists of the drill shaking out the grains from its bit in order to deposit the sand directly in the CHIMRA instrument. While the tests have been successful here on Earth, it remains to be seen if this will work on Mars. Given that both atmospheric conditions and gravity are very different on the Red Planet, it remains to be seen if this will work there.

This drill test was the first of many that are planned. And while this first test didn’t produce a full sample, Curiosity’s science team is confident that this is a positive step towards the resumption of regular drilling. If the method proves effective, the team hopes to collect multiple samples from Vera Rubin Ridge, especially from the upper side. This area contains both gray and red rocks, the latter of which are rich in minerals that form in the presence of water.

Samples drilled from these rocks are expected to shed light on the origin of the ridge and its interaction with water. In the days ahead, Curiosity’s engineers will evaluate the results and likely attempt another drill test nearby. If enough sample is collected, they will use the rover’s Mastcam to attempt to portion the sample out and determine how much powder can be shaken from the drill bit.

Further Reading: NASA