In a recent post I wrote about a study that argued dark energy isn’t needed to explain the redshifts of distant supernovae. I also mentioned we shouldn’t rule out dark energy quite yet, because there are several independent measures of cosmic expansion that don’t require supernovae. Sure enough, a new study has measured cosmic expansion without all that mucking about with supernovae. The study confirms dark energy, but it also raises a few questions.

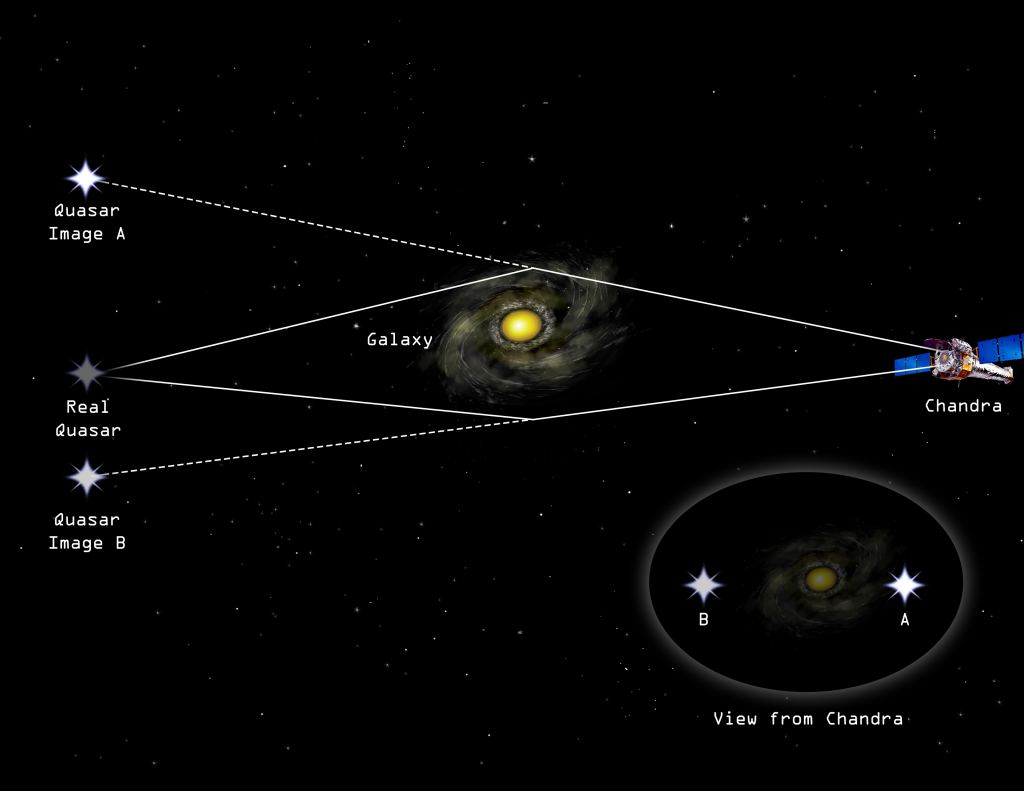

Rather than measuring the brightness of supernovae, this new study looks at an effect known as gravitational lensing. Since gravity is a curvature of space and time, a beam of light is deflected as it passes near a large mass. This effect was first observed by Arthur Eddington in 1919 and was one of the first confirmations of general relativity.

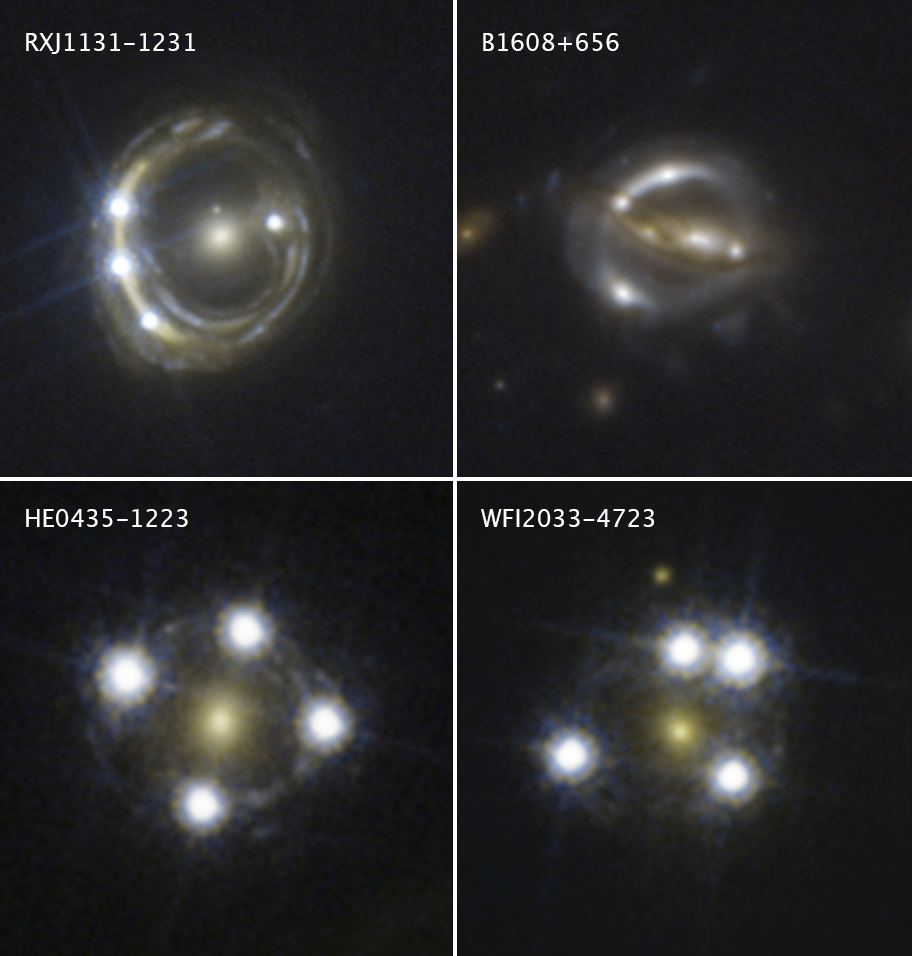

Sometimes this effect happens on a cosmic scale. If a distant supernova is far behind a galaxy, the light of the quasar is bent around the foreground galaxy, creating multiple images of the quasar. It is this gravitational lensing of distant quasars that was the focus of this new study.

So how does this measure cosmic expansion? Each lensed image of a quasar near a galaxy is produced by light that traveled a different path around the galaxy. Some paths are longer and some are shorter. So the light from the quasar takes a different amount of time to reach us. Quasars don’t just produce a steady stream of light, but rather flicker slightly over time. By measuring the flicker of each lensed quasar image, the team measured the time difference of each path, and thus the distance of each path.

Knowing the distance of each image path, the team could then calculate the size of the galaxy. That is different from its apparent size. Since the universe is expanding, the image of the galaxy is stretched on its way to us, so the galaxy appears larger than it actually is. By comparing the apparent size of the galaxy with its actual size as calculated by the lensed quasar, you know how much the cosmos has expanded. The team did this with lots of lensed quasars and was able to calculate the rate of cosmic expansion.

Cosmic expansion is typically expressed by the Hubble constant. This latest research got a value of 74 (km/s)/Mpc for the Hubble constant, which is just a bit higher than supernovae measurements. Given the uncertainty range, the supernova and lensing measures agree.

But these measurements don’t agree with other measures, such as those from the cosmic microwave background, that give a value around 67 (km/s)/Mpc. This is a huge problem. We now have multiple measures of the Hubble constant using completely independent methods, and they don’t agree. We are moving beyond the so-called Hubble tension into outright contradiction.

So tweaking supernovae results doesn’t get rid of dark energy. It still looks like dark energy is very real. But it is now clear that there is something we don’t understand about it. It’s a mystery more data might solve eventually, but at the moment more data is giving us more questions than answers.

Reference: Wong, Kenneth C., et al. “H0LiCOW XIII. A 2.4% measurement of H0 from lensed quasars: 5.3sigma tension between early and late-Universe probes.“

“We now have multiple measures of the Hubble constant using completely independent methods, and they don’t agree. We are moving beyond the so-called Hubble tension into outright contradiction.”

I don’t think that is how it works, since we also have measurements that straddle the extremes without tension [ https://sci.esa.int/web/planck/-/60504-measurements-of-the-hubble-constant ]. (There are more of these than shown in the diagram, such as some of the red giant branch tip measurements.) There are several explanations for something like that, including remaining systematic errors which are unaccounted for.

The history of other measurements may be illuminating on the problems, for example the apparent bias and hugely underestimated uncertainty in early light speed measurements [ https://arxiv.org/pdf/physics/0508199.pdf ].

“Franklin terms these time-dependent shifts and trends “bandwagon effects,” and discusses a particularly dramatic case with | ?+? |, the parameter that measures CP violation31. As seen in Fig. 1, measurements of | ?+? | before 1973 are systematically different from those after 1973.

Henrion and Fischhoff graphed experimental reports on the speed of light, as a function of the year of the experiment. Their graph (Fig. 2) shows that experimental results tend to cluster around a certain value for many years, and then suddenly jump to cluster around a new value, often many error bars from the previously accepted value32. Two such jumps occur for the speed of light. Again, the bias is clearly present in the graph, but the exact mechanism by which it occured is harder to discern.”

Do you think it Is possible that the speed of light is slowed by being subjected to varying spacetime curvature?

Of course, methods and data coverage improve, but we are also targeting harder problems so the (estimated) uncertainties are about the same.

I now note in the text that it speaks directly to the tension between current extreme measurements:

“A result can disagree with the average of all previous experiments by five standard deviations and still be right! ”

So maybe when we hit a 10 sigma tension range we should start to worry/be happy with that new physics can be the best explanation!?